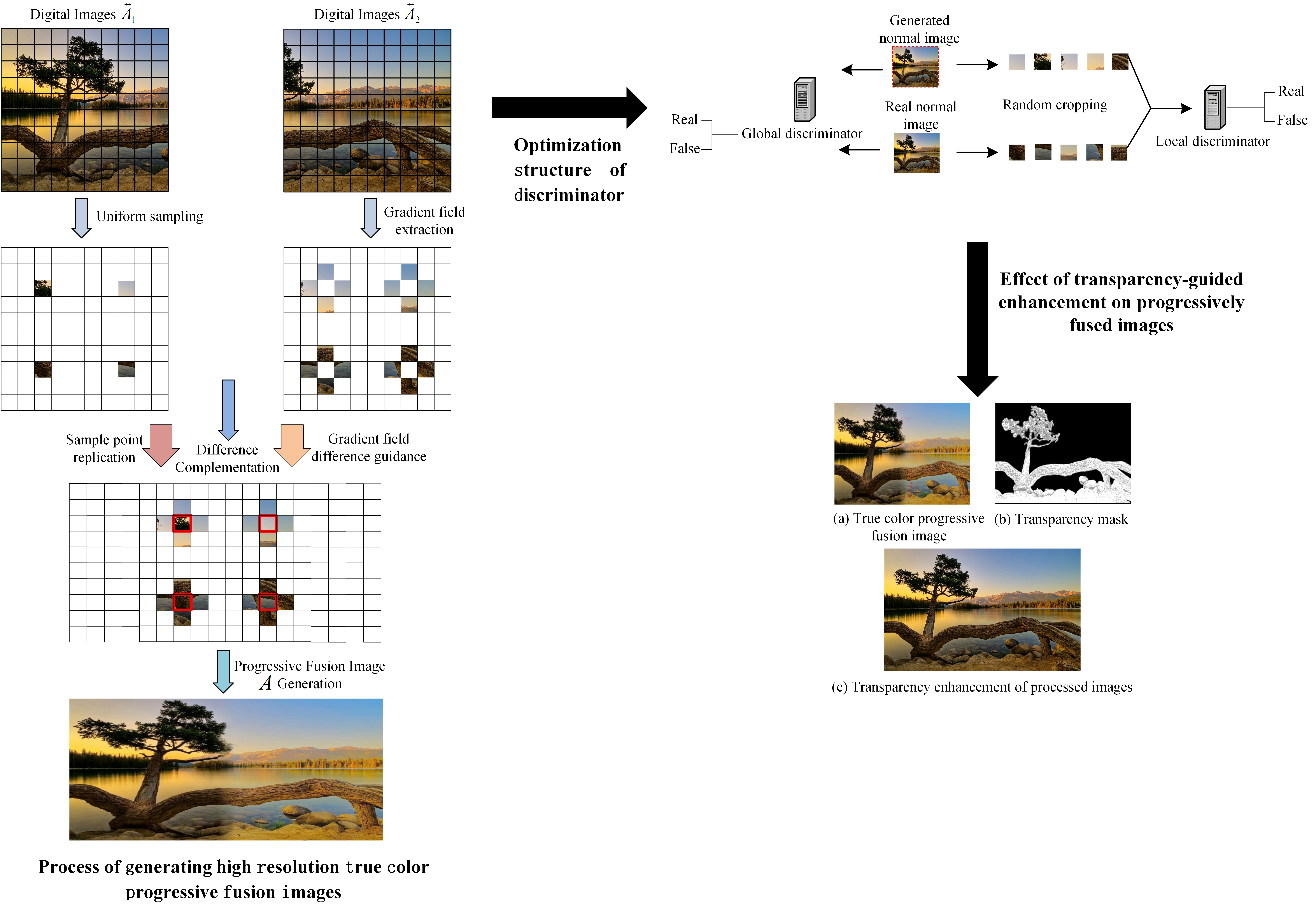

The progressive fusion algorithm enhances image boundary smoothness, preserves details, and improves visual harmony. However, issues with multi-scale fusion and improper color space conversion can lead to blurred details and color distortion, which do not meet modern image processing standards for high-quality output. Therefore, a progressive fusion image transparency-guided enhancement algorithm based on generative adversarial learning is proposed. The method combines wavelet transform with gradient field fusion to enhance image details, preserve spectral features, and generate high-resolution true-color fused images. It extracts the image mean, standard deviation, and smoothness features, and uses these along with the original image input to generate an adversarial network. The optimization design introduces global context, transparency mask prediction, and a dual-discriminator structure to enhance the transparency of progressively fused images. The experimental results showed that using the designed method, the information entropy was 7.638, the blind image quality index was 24.331, the natural image quality evaluator value was 3.611, and the processing time was 0.036 s. The overall evaluation indices were excellent, effectively restoring image detail information and spatial color while avoiding artifacts. The processed images exhibited high quality with complete detail preservation.

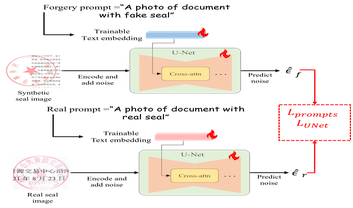

Seal-related tasks in document processing—such as seal segmentation, authenticity verification, seal removal, and text recognition under seals—hold substantial commercial importance. However, progress in these areas has been hindered by the scarcity of labeled document seal datasets, which are essential for supervised learning. To address this limitation, we propose Seal2Real, a novel generative framework designed to synthesize large-scale labeled document seal data. As part of this work, we also present Seal-DB, a comprehensive dataset containing 20,000 labeled images to support seal-related research. Seal2Real introduces a prompt prior learning architecture built upon a pretrained Stable Diffusion model, effectively transferring its generative capability to the unsupervised domain of seal image synthesis. By producing highly realistic synthetic seal images, Seal2Real significantly enhances the performance of downstream seal-related tasks on real-world data. Experimental evaluations on the Seal-DB dataset demonstrate the effectiveness and practical value of the proposed framework.

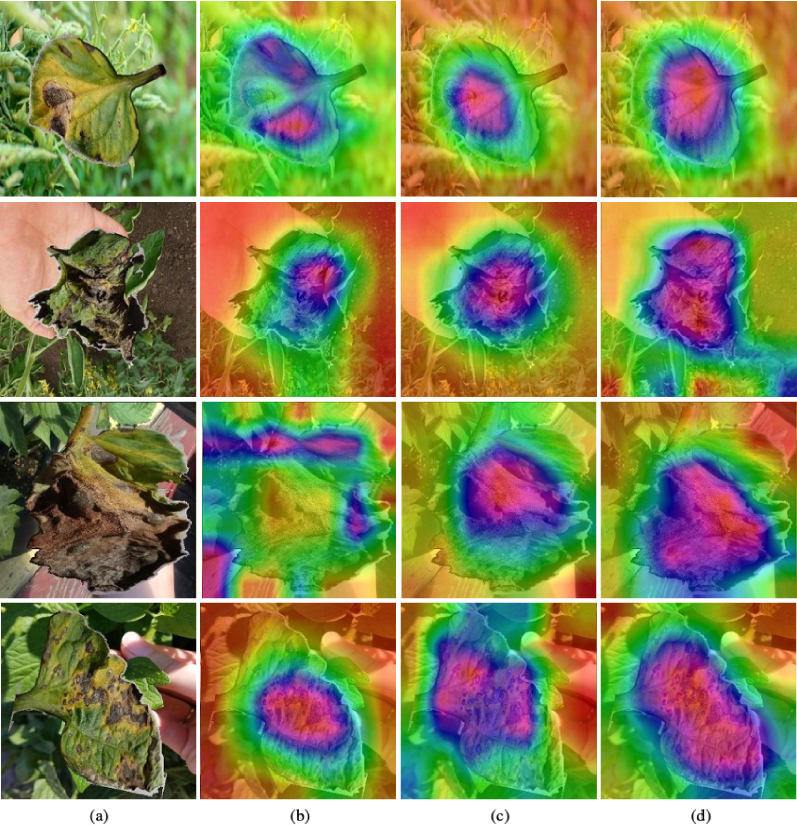

The tomato leaf is a significant organ that reflects the health and growth of tomato plants. Early detection of leaf diseases is crucial to both crop yield and the income of farmers. However, the global distribution of diseases across tomato leaves coupled with fine-grained differences among various diseases poses significant challenges for accurate disease detection. To tackle these obstacles, we propose an accurate tomato leaf disease identification method based on an improved Swin Transformer. The proposed method consists of three parts: the Swin Transformer backbone, a Local Feature Perception (LFP) module, and a Spatial Texture Attention (STA) module. The backbone can model long-range dependencies of leaf diseases for representative features while the LFP module adopts a multi-scale aggregation strategy to enhance the capability of the Swin Transformer in local feature extraction. Moreover, the STA module integrates hierarchical features from different stages of the Swin Transformer to capture fine-grained features for the classification head and boost overall performance. Extensive experiments are conducted on the public LBFtomato dataset, and the results demonstrate the superior performance of our proposed method. Our proposed model achieves the scores of 99.28% in Accuracy, 99.07% in Precision, 99.36% in Recall, and 99.24% in F1-score metrics.

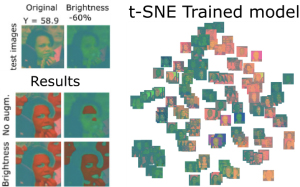

Recent advancements in artificial intelligence have significantly impacted many color imaging applications, including skin segmentation and enhancement. Although state-of-the-art methods emphasize geometric image augmentations to improve model performance, the role of color-based augmentations and color spaces in enhancing skin segmentation accuracy remains underexplored. This study addresses this gap by systematically evaluating the impact of various color-based image augmentations and color spaces on skin segmentation models based on convolutional neural networks (CNNs). We investigate the effects of color transformations—including brightness, contrast, and saturation adjustments—in three color spaces: sRGB, YCbCr, and CIELab. To represent CNN models, an existing semantic segmentation model is trained using these color augmentations on a custom dataset of 900 images with annotated skin masks, covering diverse skin tones and lighting conditions. Our findings reveal that current training practices, which primarily rely on single-color augmentation in the sRGB space and focus mainly on geometric augmentations, limit model generalization in color-related applications like skin segmentation. Models trained with a greater variety of color augmentations show improved skin segmentation, particularly under over- and underexposure conditions. Additionally, models trained in YCbCr outperform those trained in sRGB color space when combined with color augmentation while CIELab leads to comparable performance to sRGB. We also observe significant performance discrepancies across skin tones, highlighting challenges in achieving consistent segmentation under varying lighting. This study highlights gaps in existing image augmentation approaches and provides insights into the role of various color augmentations and color spaces in improving the accuracy and inclusivity of skin segmentation models.

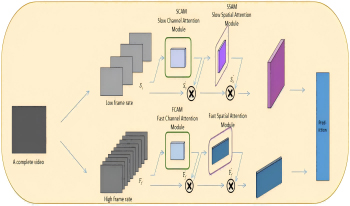

In modern life, with the explosive growth of video, images, and other data, the use of computers to automatically and efficiently classify and analyze human actions has become increasingly important. Action recognition, a problem of perceiving and understanding the behavioral state of objects in a dynamic scene, is a fundamental yet key task in the computer field. However, analyzing a video with multiple objects or a video with irregular shooting angles poses a significant challenge for existing action recognition algorithms. To address these problems, the authors propose a novel deep-learning-based method called SlowFast-Convolutional Block Attention Module (SlowFast-CBAM). Specifically, the training dataset is preprocessed using the YOLOX network, where individual frames of action videos are separately placed in slow and fast pathways. Then, CBAM is incorporated into both the slow and fast pathways to highlight features and dynamics in the surrounding environment. Subsequently, the authors establish a relationship between the convolutional attention mechanism and the SlowFast network, allowing them to focus on distinguishing features of objects and behaviors appearing before and after different actions, thereby enabling action detection and performer recognition. Experimental results demonstrate that this approach better emphasizes the features of action performers, leading to more accurate action labeling and improved action recognition accuracy.

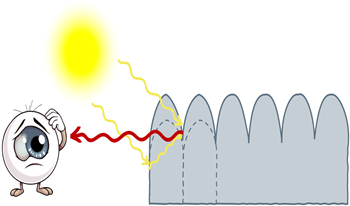

A recent work proposed a methodology to effectively enhance or suppress perceived bumpiness in digital images. We hypothesized that this manipulation may affect perceived translucency due to similarity in affected image cues. In this work, we test this hypothesis experimentally. We conducted psychophysical experiments and found a correlation between perceived bumpiness and perceived translucency in processed images. This not only may have implications when digitally editing the bumpiness of a given material but also can be taken advantage of as a translucency editing tool unless the method produces artifacts. To check this, we evaluated the naturalness and quality of the processed images using subjective user study and objective image quality metrics, respectively.

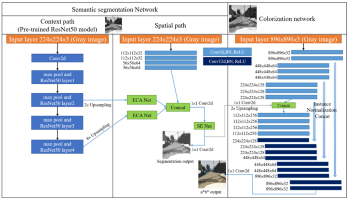

A fully automated colorization model that integrates image segmentation features to enhance both the accuracy and diversity of colorization is proposed. In the model, a multipath architecture is employed, with each path designed to address a specific objective in processing grayscale input images. The context path utilizes a pretrained ResNet50 model to identify object classes while the spatial path determines the locations of these objects. ResNet50 is a 50-layer deep convolutional neural network (CNN) that uses skip connections to address the challenges of training deep models. It is widely applied in image classification and feature extraction. The outputs from both paths are subsequently fused and fed into the colorization network to ensure precise representation of image structures and to prevent color spillover across object boundaries. The colorization network is designed to handle high-resolution inputs, enabling accurate colorization of small objects and enhancing overall color diversity. The proposed model demonstrates robust performance even when training with small datasets. Comparative evaluations with CNN-based and diffusion-based classification approaches show that the proposed model significantly improves colorization quality.

In recent years, deep learning has achieved excellent results in several applications across various fields. However, as the scale of deep learning models increases, the training time of the models also increases dramatically. Furthermore, hyperparameters have a significant influence on model training results and selecting the model’s hyperparameters efficiently is essential. In this study, the orthogonal array of the Taguchi method is used to find the best experimental combination of hyperparameters. This research uses three hyperparameters of the you only look once-version 3 (YOLOv3) detector and five hyperparameters of data augmentation as the control factor of the Taguchi method in addition to the traditional signal-to-noise ratio (S/N ratio) analysis method with larger-the-better (LB) characteristics.

Experimental results show that the mean average precision of the blood cell count and detection dataset is 84.67%, which is better than the available results in literature. The method proposed herein can provide a fast and effective search strategy for optimizing hyperparameters in deep learning.