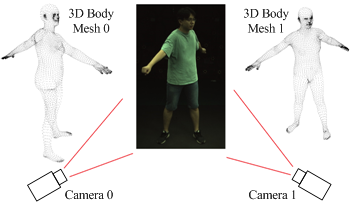

Human pose and shape estimation (HPSE) is a crucial function for human-centric applications, while the accuracy of deep learning-based monocular 3D HPSE may suffer due to depth ambiguity. Multi-camera systems with wide baselines can mitigate the problem but accurate and robust multi-camera calibration is a prerequisite. The main objective of the paper is to develop fast and accurate algorithms for automatic calibration of multi-camera systems which fully utilize human semantic information without using predetermined calibration patterns or objects. The proposed automatic calibration method for multi-camera systems takes from each camera the 3D human body meshes output from pretrained Human Mesh Recovery (HMR) model, and the vertices of each 3D human body mesh are projected onto the 2D image plane for each corresponding camera. Structure-from-Motion (SfM) algorithm is used to reconstruct 3D shapes from a pair of cameras, using iterative Random Sample Consensus (RANSAC) algorithm to remove outliers when calculating the essential matrix in each iteration. Relative camera extrinsic parameters (i.e., the rotation matrix and translation vector) can be calculated from the estimated essential matrix accordingly. By assuming one main camera’s pose in the world coordinate is known, the poses of all other cameras in the multi-camera system can be readily calculated. Using (1) average 2D projection error and (2) average rotation and translation errors as performance metrics, the proposed method is shown to perform calibration more accurate than methods using appearance-based feature extractors, e.g., Scale-Invariant Feature Transform (SIFT), and deep learning-based 2D human joint estimators, e.g., OpenPose.

The segment anything model (SAM) was released as a foundation model for image segmentation. The promptable segmentation model was trained by over 1 billion masks on 11M licensed and privacy-respecting images. The model supports zero-shot image segmentation with various segmentation prompts (e.g., points, boxes, masks). It makes the SAM attractive for medical image analysis, especially for digital pathology where the training data are rare. In this study, we evaluate the zero-shot segmentation performance of SAM model on representative segmentation tasks on whole slide imaging (WSI), including (1) tumor segmentation, (2) non-tumor tissue segmentation, (3) cell nuclei segmentation. Core Results: The results suggest that the zero-shot SAM model achieves remarkable segmentation performance for large connected objects. However, it does not consistently achieve satisfying performance for dense instance object segmentation, even with 20 prompts (clicks/boxes) on each image. We also summarized the identified limitations for digital pathology: (1) image resolution, (2) multiple scales, (3) prompt selection, and (4) model fine-tuning. In the future, the few-shot fine-tuning with images from downstream pathological segmentation tasks might help the model to achieve better performance in dense object segmentation.

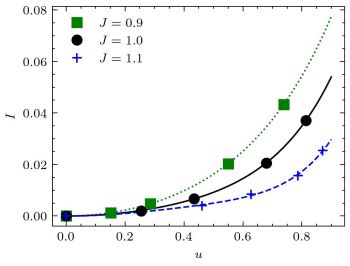

Modeling and simulation of rare events is a problem that has been largely ignored by the image processing community, but is of great interest in many areas of science. Rare events in physical systems are responsible for many failure modes, and as such must be precisely understood. We propose a novel method for simulating and modeling rare images using asymptotically efficient importance sampling, and apply it to binary images of interest in statistical mechanics and materials science. These rare images correspond to the occurrence of rare events in systems modeled by Gibbs distributions, more commonly known as Markov random fields (MRFs) in image processing. We will first give a precise definition of a rare event, in terms of a rare event statistic and a rare event region. The rare event statistic of interest here will be the per-site magnetization of a ferromagnet under the Ising model, but this could be replaced by many other statistics for other problems, such as boundary length in two-phase material microstructures, for example. For the given rare event statistic, we estimate the asymptotically efficient importance sampling (AEIS) distribution, which is based on a large deviation principle (LDP); draw samples of rare binary images from this AEIS distribution; and estimate rare event probabilities for several different rare event regions. Theoretically, the AEIS sampling distribution gives an unbiased estimator with the lowest variance asymptotically from a class of importance sampling distributions that are practically feasible for Monte Carlo Markov chain simulation. Finally, we fit large deviations rate functions from simulations using several rare event regions. This allows us to compute probability estimates associated with a given rare event statistic for any rare event region of interest without requiring further simulations.

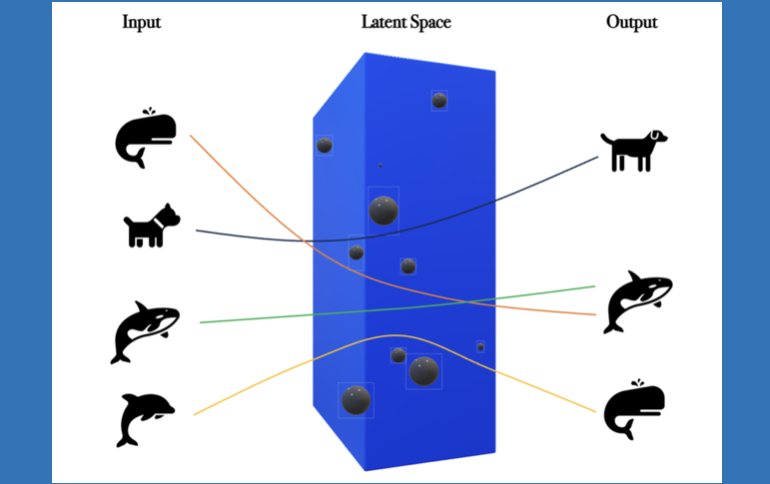

In this paper, we explore a space-time geometric view of signal representation in machine learning models. The question we are interested in is if we can identify what is causing signal representation errors – training data inadequacies, model insufficiencies, or both. Loosely expressed, this problem is stylistically similar to blind deconvolution problems. However, studies of space-time geometries might be able to partially solve this problem by considering the curvature produced by mass in (Anti-)de Sitter space. We study the effectiveness of our approach on the MNIST dataset.

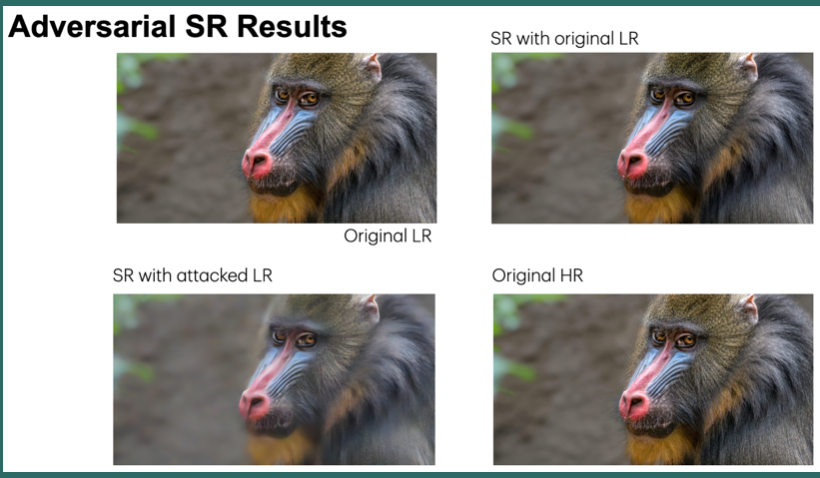

Machine learning and image enhancement models are prone to adversarial attacks, where inputs can be manipulated in order to cause misclassifications. While previous research has focused on techniques like Generative Adversarial Networks (GANs), there’s limited exploration of GANs and Synthetic Minority Oversampling Technique (SMOTE) in image super-resolution, and text and image classification models to perform adversarial attacks. Our study addresses this gap by training various machine learning models and using GANs and SMOTE to generate additional data points aimed at attacking super-resolution and classification algorithms. We extend our investigation to face recognition models, training a Convolutional Neural Network(CNN) and subjecting it to adversarial attacks with fast gradient sign perturbations on key features identified by GradCAM, a technique used to highlight key image characteristics of CNNs use in classification. Our experiments reveal a significant vulnerability in classification models. Specifically, we observe a 20% decrease in accuracy for the top-performing text classification models post-attack, along with a 30% decrease in facial recognition accuracy.