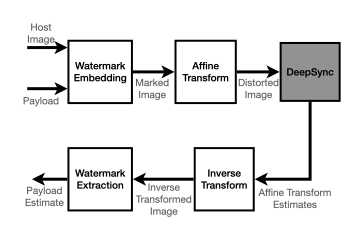

In the context of digital watermarking of images/video, template based techniques rely on the insertion of a signal template to aid recovery of the watermark after transforms (rotation, scale, translation, aspect-ratio) common in imaging workflows. Detection approaches for such techniques often rely on known signal properties when performing geometry estimation before watermark extraction. In deep watermarking, i.e., watermarking employing deep learning, focus so far has been on extraction methods that are invariant to geometric transforms. This results in a gap in precise geometry recovery and synchronization which compromises watermark recovery, including the recovery of information bits, i.e., the payload. In this work, we propose DeepSync, a novel deep learning approach aimed at enhancing watermark synchronization for both template-based and deep watermarks.

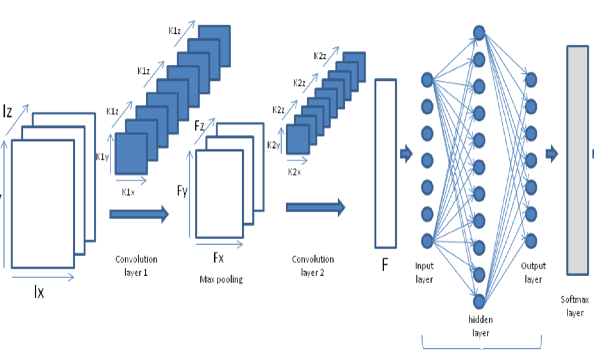

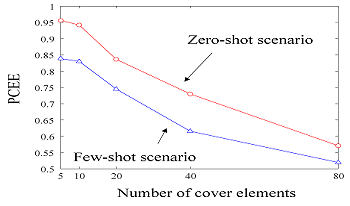

Captchas are used on many websites in the Internet to prevent automated web requests. Likewise, marketplaces in the darknet commonly use captchas to secure themselves against DDoS attacks and automated web scrapers. This complicates research and investigations regarding the content and activity of darknet marketplaces. In this work we focus on the darknet and provide an overview about the variety of captchas found in darknet marketplaces. We propose a workflow and recommendations for building automated captcha solvers and present solvers based on machine learning models for 5 different captcha types we found. With our solvers we were able to achieve accuracies between 65% and 99% which significantly improved our ability to collect data from the corresponding marketplaces with automated web scrapers.

Deep Neural Networks (DNNs), has seen revolutionary progress in recent years. Its applications spread from naïve image classification application to complex natural language processing like ChatGPT etc. Training of deep neural network (DNN) needs extensive use of hardware, time, and technical intelligence to suit specific application on a specific embedded processor. Therefore, trained DNN weights and network architecture are the intellectual property which needs to be protected from possible theft or abuse at the various stage of model development and deployment. Hence there is need of protection of Intellectual property of DNN and also there is need of identification of theft even if it happens in some case to claim the ownership of DNN weights. The Intellectual Property protection of DNN weights has attracted increasing serious attention in the academia and industries. Many works on IP protection for Deep Neural Networks (DNN) weights have been proposed. The vast majority of existing work uses naïve watermarking extraction to verify the ownership of the model after piracy occurs. In this paper a novel method for protection and identification for intellectual property related to DNN weights is presented. Our method is based on inserting the digital watermarks at learned least significant bits of weights for identification purpose and usages of hardware effuse for rightful usages of these watermarked weights on intended embedded processor.

With the advancements made in the field of artificial intelligence (AI) in recent years, it has become more accessible to create facial forgeries in images and videos. In particular, face swapping deepfakes allow for convincing manipulations where a persons facial texture can be replaced with an arbitrary facial texture with the help of AI. Since such face swapping manipulations are nowadays commonly used for creating and spreading fake news and impersonation with the aim of defamation and fraud, it is of great importance to distinguish between authentic and manipulated content. In the past, several methods have been proposed to detect deepfakes. At the same time, new synthesis methods have also been introduced. In this work, we analyze whether the current state-of-the-art detection methods can detect modern deepfake methods that were not part of the training set. The experiments showed, that while many of the current detection methods are robust to common post-processing operations, they most often do not generalize well to unseen data.

A new algorithm for the detection of deepfakes in digital videos is presented. The I-frames were extracted in order to provide faster computation and analysis than approaches described in the literature. To identify the discriminating regions within individual video frames, the entire frame, background, face, eyes, nose, mouth, and face frame were analyzed separately. From the Discrete Cosine Transform (DCT), the β components were extracted from the AC coefficients and used as input to standard classifiers. Experimental results show that the eye and mouth regions are those most discriminative and able to determine the nature of the video under analysis.

The impressive rise of Deep Learning and, more specifically, the discovery of generative adversarial networks has revolutionised the world of Deepfake. The forgeries are becoming more and more realistic, and consequently harder to detect. Attesting whether a video content is authentic is increasingly sensitive. Furthermore, free access to forgery technologies is dramatically increasing and very worrying. Numerous methods have been proposed to detect these deepfakes and it is difficult to know which detection methods are still accurate regarding the recent advances. Therefore, an approach for face swapping detection in videos, based on residual signal analysis is presented in this paper.

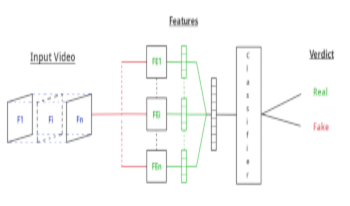

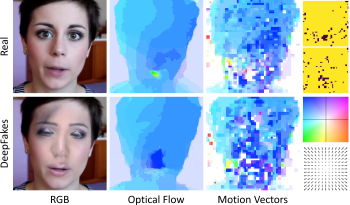

Video DeepFakes are fake media created with Deep Learning (DL) that manipulate a person’s expression or identity. Most current DeepFake detection methods analyze each frame independently, ignoring inconsistencies and unnatural movements between frames. Some newer methods employ optical flow models to capture this temporal aspect, but they are computationally expensive. In contrast, we propose using the related but often ignored Motion Vectors (MVs) and Information Masks (IMs) from the H.264 video codec, to detect temporal inconsistencies in DeepFakes. Our experiments show that this approach is effective and has minimal computational costs, compared with per-frame RGB-only methods. This could lead to new, real-time temporally-aware DeepFake detection methods for video calls and streaming.

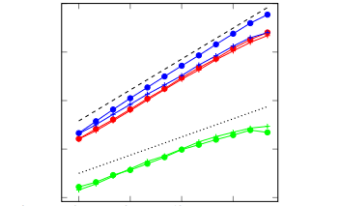

In this article, we study the properties of quantitative steganography detectors (estimators of the payload size) for content-adaptive steganography. In contrast to non-adaptive embedding, the estimator's bias as well as variance strongly depend on the true payload size. Initially, and depending on the image content, the estimator may not react to embedding. With increased payload size, it starts responding as the embedding changes begin to ``spill'' into regions where their detection is more reliable. We quantify this behavior with the concepts of reactive and estimable payloads. To better understand how the payload estimate and its bias depend on image content, we study a maximum likelihood estimator derived for the MiPOD model of the cover image. This model correctly predicts trends observed in outputs of a state-of-the-art deep learning payload regressor. Moreover, we use the model to demonstrate that the cover bias can be caused by a small number of ``outlier'' pixels in the cover image. This is also confirmed for the deep learning regressor on a dataset of artificial images via attribution maps.

Assuming that Alice commits to an embedding method and the Warden to a detector, we study how much information Alice can communicate at a constant level of statistical detectability over potentially infinitely many uses of the stego channel. When Alice is allowed to allocate her payload across multiple cover objects, we find that certain payload allocation strategies that are informed by a steganography detector exhibit super-square root secure payload (scaling exponent 0.85) for at least tens of thousands of uses of the stego channel. We analyze our experiments with a source model of soft outputs of the detector across images and show how the model determines the scaling of the secure payload.

Recent studies show that scaling pre-trained language models can lead to a significantly improved model capacity on downstream tasks, resulting in a new research direction called large language models (LLMs). A remarkable application of LLMs is ChatGPT, which is a powerful large language model capable of generating human-like text based on context and past conversations. It is demonstrated that LLMs have impressive skills in reasoning, especially when using prompting strategies. In this paper, we explore the possibility of applying LLMs to the field of steganography, which is referred to as the art of hiding secret data into an innocent cover for covert communication. Our purpose is not to combine an LLM into an already designed steganographic system to boost the performance, which follows the conventional framework of steganography. Instead, we expect that, through prompting, an LLM can realize steganography by itself, which is defined as prompting steganography and may be a new paradigm of steganography. We show that, by reasoning, an LLM can embed secret data into a cover, and extract secret data from a stego, with an error rate. This error rate, however, can be reduced by optimizing the prompt, which may shed light on further research.