This manuscript provides an overview of the 35 th annual Stereoscopic Displays and Applications conference and an introduction to the conference proceedings for this conference.

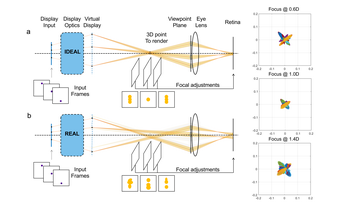

Aberrations in the optical system of a light field (LF) display can degrade its quality and affect the focusing effects in the retinal image, formed by the superposition of multiperspective LF views. To address this problem, we propose a method for calibrating and correcting aberrated LF displays. We employ an LF display optical model to subsequently derive the retinal image formation with a given LF input. This enables us to efficiently measure actual viewpoint locations and the deformation of each perspective image, by capturing focal-stack images during the calibration process. We then use the calibration coefficients to pre-warp the input images so that the aberrations are compensated. We demonstrate the effectiveness of our method on a simulated model of an aberrated near-eye LF display and show that it improves the display's optical quality and the accuracy of the focusing effects.

Stereoscopic 3D remote vision system (sRVS) design can be challenging. The components often interact such that changing one parameter will cause unintended distortions in the perceptual image space. For example, increasing camera convergence to reduce vergence-accommodation mismatch will have the unintended effect of increasing depth compression. In this study, we investigated the trade-offs between changes in two parameters: viewing distance and camera toe-in. Participants used a simulated telerobotic arm to complete a precision depth matching task in an sRVS environment. Both a comfort questionnaire (subjective) and eye-tracking metrics (objective) were used as indicators of visual stress. The closer viewing distance increased both depth matching performance and objective measures of visual stress, demonstrating the inherent trade-offs associated with many sRVS design variables. The camera toe-in had no effect on either user performance or comfort. While these results suggest that small amounts of camera toe-in may be more tolerable than larger manipulations of viewing distance, the consequences of both should be carefully considered when designing an sRVS.

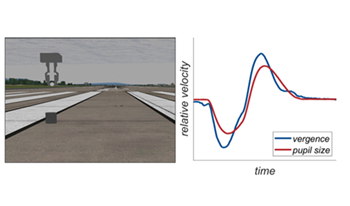

During natural viewing, the oculomotor system interacts with depth information through a correlated, tightly related linkage between convergence, accommodation, and pupil miosis known as the near response. When natural viewing breaks down, such as when depth distortions and cue conflicts are introduced in a stereoscopic remote vision system (sRVS), the individual elements of the near response may decouple (e.g., vergence-accommodation, or VA, mismatch), limiting the comfort and usability of the sRVS. Alternatively, in certain circumstances the near response may become more tightly linked to potentially preserve image quality in the presence of significant distortion. In this experiment, we measured two elements of the near response (vergence posture and pupil size) of participants using an sRVS. We manipulated the degree of depth distortion by changing the viewing distance, creating a perceptual compression of the image space, and increasing the VA mismatch. We found a strong positive cross-correlation of vergence posture and pupil size in all participants in both conditions. The response was significantly stronger and quicker in the near viewing condition, which may represent a physiological response to maintain image quality and increase the depth of focus through pupil miosis.

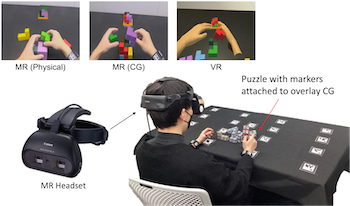

This study evaluates user experiences in Virtual Reality (VR) and Mixed Reality (MR) systems during task-based interactions. Three experimental conditions were examined: MR (Physical), MR (CG), and VR. Subjective and objective indices were used to assess user performance and experience. The results demonstrated significant differences among conditions, with MR (Physical) consistently outperforming MR (CG) and VR in various aspects. Eye-tracking data revealed that users spent less time observing physical objects in the MR (Physical) condition, primarily relying on virtual objects for task guidance. Conversely, in conditions with a higher proportion of CG content, users spent more time observing objects but reported increased discomfort due to delays. These findings suggest that the ratio of virtual to physical objects significantly impacts user experience and performance. This research provides valuable insights into improving user experiences in VR and MR systems, with potential applications across various domains.

Background: Current gold standard for clinical training is on actual Linear Accelerators (LINAC) machines, recordings of the actual procedure, videos clips and word of mouth. Current training practices lack the element of immersion, convenience and flexibility. These trainings are not always interactive and may not always represent actual use case scenarios. Immersive experiences by interacting with LINAC machines in a safe and controlled virtual environment is highly desirable. Current real world radiation treatment training often requires a radiation bunker and actual equipment that is not always accessible in the clinical setting. Oncologist's time is extremely precious and radiation bunkers are hard to come by due to heavy demands in specialty clinics. Moreover, scarcity of Linear Accelerator (LIANC) due to its multi-million dollar capital cost makes the accessibility even worse. To solve the logistical, economical and resource issues, virtual reality (VR) radiation treatment will offer a solution that will also improve the clinical outcomes by preparing Oncologists in the virtual environment anywhere and anytime. We are introducing a virtual reality (VR) space for Radiation trainees, so that they can use it anywhere and anytime to practice and refine their radiation treatment skills. Moreover, an immersive space for collaborative learning and sharing their knowledge Methods: Trainees were invited to the VR space and went through the pre-assessment questionnaire and then guided through series of videos and digital contents and then subjected to post-assessment randomized questionnaire Findings: Trainees (n=32) reported significant improvement in their learning. For future work we can compare to traditional methods. A number of trainees were new to VR technology and also new to Radiation Oncology. Interpretation: Our research revealed that incorporating virtual reality (VR) as a tool for elucidating Radiation Oncology concepts resulted in an immediate and notable enhancement in trainees' proficiency. Moreover, those who were educated through VR demonstrated a more profound understanding of Radiation Oncology, including a wider array of potential treatment strategies, all within a user-friendly setting.

In August 2023, a series of co-design workshops were run in a collaboration between Kyushu University (Fukuoka, Japan), the Royal College of Art (London, UK), and Imperial College London (London, UK). In this series of workshops, participants were asked to create a number of drawings visualising avian-human interaction scenarios. Each set of drawings demonstrated a specific interaction each participant had with an avian species in three different contexts: the interaction from the participants perspective, the interaction from the birds perspective, and how the participant hopes interaction will be embodied in 50 years' time. The main purpose of this exercise was to co-imagine a utopian future with more positive interspecies relations between humans and birds. Based on these drawings, we have created a number of visualisations presenting those perspectives in Virtual Reality. This development allows viewers to visualise participants' perspective shifts through the subject matter depicted in their workshop drawings, allowing for the observation of the relationship between humans and non-humans (here: avian species). This research tests the hypothesis that participants perceive Virtual Reality as furthering their feelings of immersion relating to the workshop topic of human-avian relationships. This demonstrates the potential of XR technologies as a medium for building empathy towards non-human species, providing foundational justification for this body of work to progress into employing XR as a medium for perspective shifts.

This paper is a hypothetical investigation with tongue firmly in cheek - prompted by the discovery of a plastic collectible Toy Story Alien toy depicted wearing anaglyph glasses. The Toy Story Alien has three eyes and was first introduced in the 1995 Pixar movie Toy Story. Would it be possible for three-eyed aliens to see in 3D using red-cyan anaglyph glasses? Probably not, for reasons described in the presentation, but a possible solution is provided. There are real-world examples of anaglyph glasses being fitted to Praying Mantis and Cuttle Fish to investigate 3D vision, so why not aliens? The presentation also discusses options for other types of three-lens 3D glasses such as passive polarised and active 3D glasses. Are there any useful insights from this investigation? Its an interesting thought experiment, so well let you decide!