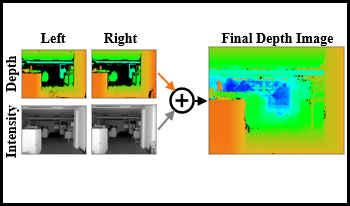

Solid-state lidar cameras produce 3D images, useful in applications such as robotics and self-driving vehicles. However, range is limited by the lidar laser power and features such as perpendicular surfaces and dark objects pose difficulties. We propose the use of intensity images, inherent in lidar camera data from the total laser and ambient light collected in each pixel, to extract additional depth information and boost ranging performance. Using a pair of off-the-shelf lidar cameras and a conventional stereo depth algorithm to process the intensity images, we demonstrate increase of the native lidar maximum depth range by 2× in an indoor environment and almost 10× outdoors. Depth information is also extracted from features in the environment such as dark objects, floors and ceiling which are otherwise not detected by the lidar sensor. While the specific technique presented is useful in applications involving multiple lidar cameras, the principle of extracting depth data from lidar camera intensity images could also be extended to standalone lidar cameras using monocular depth techniques.

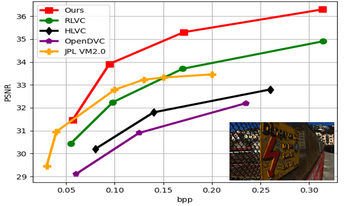

In recent years, several deep learning-based architectures have been proposed to compress Light Field (LF) images as pseudo video sequences. However, most of these techniques employ conventional compression-focused networks. In this paper, we introduce a version of a previously designed deep learning video compression network, adapted and optimized specifically for LF image compression. We enhance this network by incorporating an in-loop filtering block, along with additional adjustments and fine-tuning. By treating LF images as pseudo video sequences and deploying our adapted network, we manage to address challenges presented by the unique features of LF images, such as high resolution and large data sizes. Our method compresses these images competently, preserving their quality and unique characteristics. With the thorough fine-tuning and inclusion of the in-loop filtering network, our approach shows improved performance in terms of Peak Signal-to-Noise Ratio (PSNR) and Mean Structural Similarity Index Measure (MSSIM) when compared to other existing techniques. Our method provides a feasible path for LF image compression and may contribute to the emergence of new applications and advancements in this field.

Neural Radiance Fields (NeRF) have attracted particular attention due to their exceptional capability in virtual view generation from a sparse set of input images. However, their scope is constrained by the substantial amount of images required for training. This work introduces a data augmentation methodology to train NeRF using external depth information. The approach entails generating new virtual images at different positions through the utilization of MPEG's reference view synthesizer (RVS) to augment the training image pool for NeRF. Results demonstrate a substantial enhancement in the output quality when employing the generated views in comparison to a scenario where they are omitted.

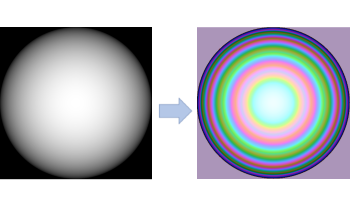

Recent advancements in 3D data capture have enabled the real-time acquisition of high-resolution 3D range data, even in mobile devices. However, this type of high bit-depth data remains difficult to efficiently transmit over a standard broadband connection. The most successful techniques for tackling this data problem thus far have been image-based depth encoding schemes that leverage modern image and video codecs. To our knowledge, no published work has directly optimized the end-to-end losses of a depth encoding scheme passing through a lossy image compression codec. In contrast, our compression-resilient neural depth encoding method leverages deep learning to efficiently encode depth maps into 24-bit RGB representations that minimize end-to-end depth reconstruction errors when compressed with JPEG. Our approach employs a fully differentiable pipeline, including a differentiable approximation of JPEG, allowing it to be trained end-to-end on the FlyingThings3D dataset with randomized JPEG qualities. On a Microsoft Azure Kinect depth recording, the neural depth encoding method was able to significantly outperform an existing state-of-the-art depth encoding method in terms of both root-mean-square error (RMSE) and mean absolute error (MAE) in a wide range of image qualities, all with over 20% lower average file sizes. Our method offers an efficient solution for emerging 3D streaming and 3D telepresence applications, enabling high-quality 3D depth data storage and transmission.