Quantification of the image sensor signal and noise is essential to derive key image quality performance indicators. Image sensors in automotive cameras are predominately activated in high dynamic range (HDR) mode, however, legacy procedures to quantify image sensor noise were optimized for operation in standard dynamic range mode. This work discusses the theoretical background and the workflow of the photon-transfer curve (PTC) test. Afterwards, it presents example implementations of the PTC test and its derivatives according to legacy procedures and according to procedures that were optimized for image sensors in HDR mode.

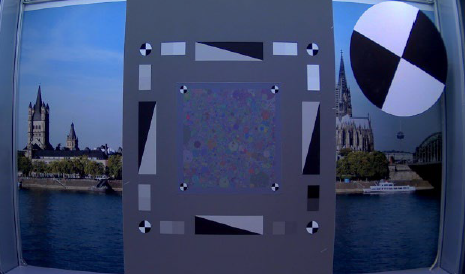

Noise Equivalent Quanta (NEQ) is an objective Fourier metric which evaluates the performance of an imaging system by detailing the effective equivalent quanta of an exposure versus spatial frequency. Calculated via the modulation transfer function (MTF) and noise power spectrum (NPS), it is a valuable precursor for ranking the detection capabilities of systems and a fundamental metric that combines sharpness and noise performance of an imaging system into a single curve in a physically meaningful way. The dead leaves measurement technique is able to provide an estimate of the MTF and NPS of an imaging system using a single target, and therefore a potentially convenient method for the assessment of NEQ. This work validates the use of the dead leaves technique to measure NEQ, firstly through simulation of an imaging system with known MTF and NPS, then via measurement of camera systems, both in the RAW domain and post-ISP. The dead leaves approach is shown to be a highly effective and practical method to estimate NEQ, ranking imaging systems performance both pre- and post-ISP.

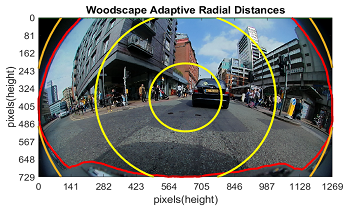

The Modulation Transfer Function (MTF) is an important image quality metric typically used in the automotive domain. However, despite the fact that optical quality has an impact on the performance of computer vision in vehicle automation, for many public datasets, this metric is unknown. Additionally, wide field-of-view (FOV) cameras have become increasingly popular, particularly for low-speed vehicle automation applications. To investigate image quality in datasets, this paper proposes an adaptation of the Natural Scenes Spatial Frequency Response (NS-SFR) algorithm to suit cameras with a wide field-of-view.

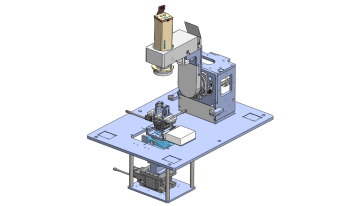

Vehicle-borne cameras vary greatly in imaging properties, e.g., angle of view, working distance and pixel count, to meet the diverse requirements of various applications. In addition, auto parts must tolerate dramatic variations in ambient temperature. These pose considerable challenges to the automotive industry when it comes to the evaluation of automotive cameras in terms of imaging performance. In this paper, an integrated and fully automated system, developed specifically to address these issues, is described. The key components include a collimator unit incorporating a LED light source and a transmissive test target, a mechanical structure that holds and moves the collimator and the camera under test, and a software suite that communicates with the controllers and computes the images captured by the camera. With the multifunctional system, imaging performance of cameras can be conveniently measured at a high degree of accuracy, precision and compatibility. The results are consistent with those obtained from tests conducted with conventional methods. Preliminary results demonstrate the potential of the system in terms of functionality and flexibility with continuing development.

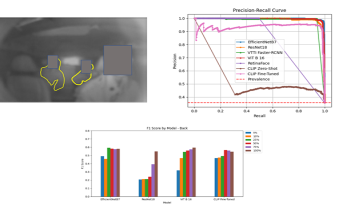

Multi-modal pedestrian detection has been developed actively in the research field for the past few years. Multi-modal pedestrian detection with visible and thermal modalities outperforms visible-modal pedestrian detection by improving robustness to lighting effects and cluttered backgrounds because it can simultaneously use complementary information from visible and thermal frames. However, many existing multi-modal pedestrian detection algorithms assume that image pairs are perfectly aligned across those modalities. The existing methods often degrade the detection performance due to misalignment. This paper proposes a multi-modal pedestrian detection network for a one-stage detector enhanced by a dual-regressor and a new algorithm for learning multi-modal data, so-called object-based training. This study focuses on Single Shot MultiBox Detector (SSD), one of the most common one-stage detectors. Experiments demonstrate that the proposed method outperforms current state-of-the-art methods on artificial data with large misalignment and is comparable or superior to existing methods on existing aligned datasets.

Naturalistic driving studies consist of drivers using their personal vehicles and provide valuable real-world data, but privacy issues must be handled very carefully. Drivers sign a consent form when they elect to participate, but passengers do not for a variety of practical reasons. However, their privacy must still be protected. One large study includes a blurred image of the entire cabin which allows reviewers to find passengers in the vehicle; this protects the privacy but still allows a means of answering questions regarding the impact of passengers on driver behavior. A method for automatically counting the passengers would have scientific value for transportation researchers. We investigated different image analysis methods for automatically locating and counting the non-drivers including simple face detection and fine-tuned methods for image classification and a published object detection method. We also compared the image classification using convolutional neural network and vision transformer backbones. Our studies show the image classification method appears to work the best in terms of absolute performance, although we note the closed nature of our dataset and nature of the imagery makes the application somewhat niche and object detection methods also have advantages. We perform some analysis to support our conclusion.

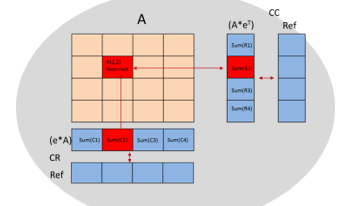

With artificial-intelligence (AI) becoming the mainstream approach to solve a myriad of problems across industrial, automotive, medical, military, wearables and cloud, the need for high-performance, low-power embedded devices are stronger than ever. Innovations around designing an efficient hardware accelerator to perform AI tasks also involves making them fault-tolerant to work reliability under varying stressful environmental conditions. These embedded devices could be deployed under varying thermal and electromagnetic interference conditions which require both the processing blocks and on-device memories to recover from faults and provide a reliable quality of service. Particularly in the automotive context, ASIL-B compliant AI systems typically implement error-correction-code (ECC) which takes care of single-error-correction, double-error detection (SECDED) faults. ASIL-D based AI systems implement dual lock step compute blocks and builds processing redundancy to reinforce prediction certainty, on top of protecting its memories. Fault-tolerant systems take it one level higher by tripling the processing blocks, where fault detected by one processing element is corrected and reinforced by the other two elements. This becomes a significant silicon area adder and makes the solution an expensive proposition. In this paper we propose novel techniques that can be applied to a typical deep-learning based embedded solution with many processing stages such as memory load, matrix-multiply, accumulate, activation functions and others to build a robust fault tolerant system without linearly tripling compute area and hence the cost of the solution.

Automated extraction of intersection topologies from aerial and street-level images is relevant for Smart City traffic-control and safety applications. The intersection topology is expressed in the amount of approach lanes, the crossing (conflict) area, and the availability of painted striping for guidance and road delineation. Segmentation of road surface and other basic information can be obtained with 80% score or higher, but the segmentation and modeling of intersections is much more complex, due to multiple lanes in various directions and occlusion of the painted stripings. This paper addresses this complicated problem by proposing a dualistic channel model featuring direct segmentation and involving domain knowledge. These channels are developing specific features such as drive lines and lane information based on painted striping, which are filtered and then fused to determine an intersection-topology model. The algorithms and models are evaluated with two datasets, a large mixture of highway and urban intersections and a smaller dataset with intersections only. Experiments with measuring the GEO metric show that the proposed late-fusion system increases the recall score with 47 percentage points. This recall gain is consistent for using either aerial imagery or a mixture of aerial and street-level orthographic image data. The obtained recall for intersections is much lower than for highway data because of the complexity, occlusions by trees and the small amount of annotated intersections. Future work should aim at consolidating this model improvement at a higher recall level with more annotated data on intersections.

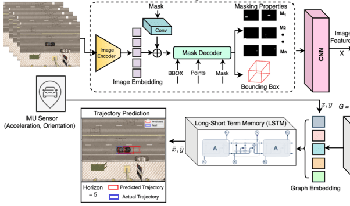

Predicting the trajectory of an ego vehicle is a critical component of autonomous driving systems. Current state-of-the-art methods typically rely on Deep Neural Networks (DNNs) and sequential models to process front-view images for future trajectory prediction. However, these approaches often struggle with perspective issues affecting object features in the scene. To address this, we advocate for the use of Bird’s Eye View (BEV) perspectives, which offer unique advantages in capturing spatial relationships and object homogeneity. In our work, we leverage Graph Neural Networks (GNNs) and positional encoding to represent objects in a BEV, achieving competitive performance compared to traditional DNN-based methods. While the BEV-based approach loses some detailed information inherent to front-view images, we balance this by enriching the BEV data by representing it as a graph where relationships between the objects in a scene are captured effectively.

Traffic simulation is a critical tool used by psychologists and engineers to study road behavior and improve safety standards. However, the creation of large 3D virtual environments requires specific technical expertise that traditionally trained traffic researchers may not have. This research proposes an approach to utilize fundamental image processing techniques to identify key features of an environment from a top-down view such as satellite imagery and map. The segmented data from the processed image is then utilized to create an approximate 3D virtual environment. A mesh of the detected roads is automatically generated while buildings and vegetation are selected from a library based on detected attributes. This research would enable traffic researchers with little to no 3D modeling experience to create large complex environments to study a variety of traffic scenarios.