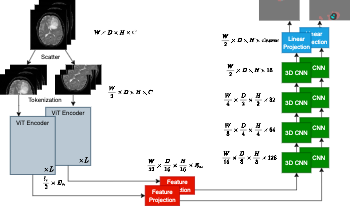

We introduce an efficient distributed sequence parallel approach for training transformer-based deep learning image segmentation models. The neural network models are comprised of a combination of a Vision Transformer encoder with a convolutional decoder to provide image segmentation mappings. The utility of the distributed sequence parallel approach is especially useful in cases where the tokenized embedding representation of image data are too large to fit into standard computing hardware memory. To demonstrate the performance and characteristics of our models trained in sequence parallel fashion compared to standard models, we evaluate our approach using a 3D MRI brain tumor segmentation dataset. We show that training with a sequence parallel approach can match standard sequential model training in terms of convergence. Furthermore, we show that our sequence parallel approach has the capability to support training of models that would not be possible on standard computing resources.

Isaac Lyngaas, Murali Gopalakrishnan Meena, Evan Calabrese, Mohamed Wahib, Peng Chen, Jun Igarashi, Yuankai Huo, Xiao Wang, "Efficient Distributed Sequence Parallelism for Transformer-Based Image Segmentation" in Electronic Imaging, 2024, pp 199-1 - 199-7, https://doi.org/10.2352/EI.2024.36.12.HPCI-199

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed