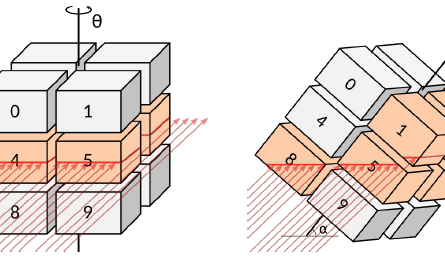

Laminography is a specialized 3D imaging technique optimized for examining flat, elongated structures. Laminographic reconstruction is the process of generating 3D volume from a set of 2D projections that are collected during the laminography experiment. Iterative reconstruction techniques are typically the preferred computational method for generating high-quality 3D volumes, however, these methods are computationally demanding and therefore can be infeasible to apply to large datasets. To counteract these challenges, we require state-of-the-art computational methods that can efficiently utilize high-performance computing resources such as GPUs. In this work, we investigate the integration of the Unequally Spaced Fast Fourier Transform (USFFT) with two optimization methods: the Alternating Direction Method of Multipliers (ADMM) and the Conjugate Gradient (CG). The usage of USFFT addresses non-uniform sampling issues typical in laminography, while the combination of ADMM and CG introduces robust regularization techniques that enhance image quality by preserving edges and reducing noise. We further accelerated the iterative algorithm of USFFT by preprocessing the image into the frequency domain. Compared to the original algorithm, the optimized USFFT method achieved a 1.82x speedup. By harnessing heterogeneous computing and parallel computing with both CPU and GPU, our approach significantly accelerates the reconstruction process while keeping the quality of the generated images. We evaluate the performance of our methods using real-world datasets collected at 32-ID beamline at Advanced Photon Source using Argonne Leadership Computing Resources.

The utilization of supercomputers and large clusters for big-data processing has recently gained immense popularity, primarily due to the widespread adoption of Graphics Processing Units (GPUs) to execute iterative algorithms, such as Deep Learning and 2D/3D imaging applications. This trend is especially prominent in the context of large-scale datasets, which can range from hundreds of gigabytes to several terabytes in size. Similar to the field of Deep Learning, which deals with datasets of comparable or even greater sizes (e.g. LION-3B), these efforts encounter complex challenges related to data storage, retrieval, and efficient GPU utilization. In this work, we benchmarked a collection of high-performance general dataloaders used in Deep Learning with a dual focus on user-friendliness (Pythonic) and high-performance execution. These dataloaders have become crucial tools. Notably, advanced dataloading solutions such as Web-datasets, FFCV, and DALI have demonstrated significantly superior performance when compared to traditional PyTorch general data loaders. This work provides a comprehensive benchmarking analysis of high-performance general dataloaders tailored for handling extensive datasets within supercomputer environments. Our findings indicate that DALI surpasses our baseline PyTorch dataloader by up to 3.4x in loading times for datasets comprising one million images.

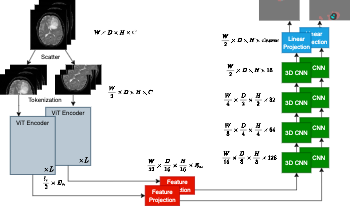

We introduce an efficient distributed sequence parallel approach for training transformer-based deep learning image segmentation models. The neural network models are comprised of a combination of a Vision Transformer encoder with a convolutional decoder to provide image segmentation mappings. The utility of the distributed sequence parallel approach is especially useful in cases where the tokenized embedding representation of image data are too large to fit into standard computing hardware memory. To demonstrate the performance and characteristics of our models trained in sequence parallel fashion compared to standard models, we evaluate our approach using a 3D MRI brain tumor segmentation dataset. We show that training with a sequence parallel approach can match standard sequential model training in terms of convergence. Furthermore, we show that our sequence parallel approach has the capability to support training of models that would not be possible on standard computing resources.