References

1RebecqH.HorstschäferT.GallegoG.ScaramuzzaD.2016Evo: A geometric approach to event-based 6-dof parallel tracking and mapping in real timeIEEE Robot. Autom. Lett.2593600593–60010.1109/LRA.2016.2645143

2JiangZ.ZhangY.ZouD.RenJ.LvJ.LiuY.Learning event-based motion deblurringCVPR2020IEEEPiscataway, NJ332033293320–910.1109/CVPR42600.2020.00338

3ZhuA. Z.YuanL.2018Ev-flownet: Self-supervised optical flow estimation for event-based camerasRobotics: Science and Systems10.15607/RSS.2018.XIV.062

4VidalA. R.RebecqH.HorstschaeferT.ScaramuzzaD.2018Ultimate slam? combining events, images, and imu for robust visual slam in hdr and high-speed scenariosIEEE Roboti. Autom. Lett.39941001994–100110.1109/LRA.2018.2793357

5TulyakovS.GehrigD.GeorgoulisS.ErbachJ.GehrigM.LiY.ScaramuzzaD.Time lens: Event-based video frame interpolationCVPR2021IEEEPiscataway, NJ161551616416155–6410.1109/CVPR46437.2021.01589

6PatrickL.PoschC.DelbruckT.2008A 128 × 128 120 db 15 μs latency asynchronous temporal contrast vision sensorIEEE J. Solid-State Circuits43566576566–7610.1109/JSSC.2007.914337

7BrandliC.BernerR.YangM.LiuS.-C.DelbruckT.2014A 240×180 130 db 3 μs latency global shutter spatiotemporal vision sensorIEEE J. Solid-State Circuits49233323412333–4110.1109/JSSC.2014.2342715

8RebecqH.RanftlR.KoltunV.ScaramuzzaD.Events-to-video: Bringing modern computer vision to event camerasCVPR2019IEEEPiscataway, NJ385738663857–6610.1109/CVPR.2019.00398

9ScheerlinckC.RebecqH.GehrigD.BarnesN.MahonyR.ScaramuzzaD.Fast image reconstruction with an event cameraWACV2020IEEEPiscataway, NJ156163156–6310.1109/WACV45572.2020.9093366

10GehrigD.RebecqH.GallegoG.ScaramuzzaD.Asynchronous, photometric feature tracking using events and framesECCV2018SpringerCham750765750–6510.1007/978-3-030-01258-8_46

11LinS.ZhangJ.PanJ.JiangZ.ZouD.WangY.ChenJ.RenJ.Learning event-driven video deblurring and interpolationECCV2020SpringerCham695710695–71010.1007/978-3-030-58598-3_41

12ZhuA. Z.YuanL.ChaneyK.DaniilidisK.Unsupervised event-based learning of optical flow, depth, and egomotionCVPR2019IEEEPiscataway, NJ10.1109/CVPR.2019.00108

13KimH.LeuteneggerS.DavisonA. J.Real-time 3d reconstruction and 6-dof tracking with an event cameraECCV2016SpringerCham349364349–6410.1007/978-3-319-46466-4_21

14KuengB.MuegglerE.GallegoG.ScaramuzzaD.Low-latency visual odometry using event-based feature tracksIROS2016IEEEPiscataway, NJ162316–2310.1109/IROS.2016.7758089

15BaruaS.MiyataniY.VeeraraghavanA.Direct face detection and video reconstruction from event camerasWACV2016IEEEPiscataway, NJ10.1109/WACV.2016.7477561

16BardowP.DavisonA. J.LeuteneggerS.Simultaneous optical flow and intensity estimation from an event cameraCVPR2016IEEEPiscataway, NJ10.1109/CVPR.2016.102

17WangL.Mohammad MostafaviI. S.HoY.-S.YoonK.-J.Event-based high dynamic range image and very high frame rate video generation using conditional generative adversarial networksCVPR2019IEEEPiscataway, NJ10.1109/CVPR.2019.01032

18Mohammad MostafaviI. S.ChoiJ.YoonK.-J.Learning to super resolve intensity images from eventsCVPR2020IEEEPiscataway, NJ10.1109/CVPR42600.2020.00284

19WangL.KimT.-K.YoonK.-J.EventSR: From asynchronous events to image reconstruction, restoration, and super-resolution via end-to-end adversarial learningCVPR2020IEEEPiscataway, NJ10.1109/CVPR42600.2020.00834

20StoffregenT.ScheerlinckC.ScaramuzzaD.DrummondT.BarnesN.KleemanL.MahonyR.Reducing the sim-to-real gap for event camerasECCV2020SpringerCham10.1007/978-3-030-58583-9_32

21YoonK.-J.KweonI. S.Adaptive support-weight approach for correspondence searchIEEE Transactions on Pattern Analysis and Machine Intelligence2006Vol. 28IEEEPiscataway, NJ650656650–610.1109/TPAMI.2006.70

22HosniA.RhemannC.BleyerM.RotherC.GelautzM.Fast cost-volume filtering for visual correspondence and beyondIEEE TPAMI2013IEEEPiscataway, NJ10.1109/TPAMI.2012.156

23ZbontarJ.LeCunY.Computing the stereo matching cost with a convolutional neural networkCVPR2015IEEEPiscataway, NJ10.1109/CVPR.2015.7298767

24LuoW.SchwingA. G.UrtasunR.Efficient deep learning for stereo matchingCVPR2016IEEEPiscataway, NJ569557035695–70310.1109/CVPR.2016.614

25MayerN.IlgE.HausserP.FischerP.CremersD.DosovitskiyA.BroxT.A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimationCVPR2016IEEEPiscataway, NJ404040484040–810.1109/CVPR.2016.438

26KendallA.MartirosyanH.DasguptaS.HenryP.KennedyR.BachrachA.BryA.End-to-end learning of geometry and context for deep stereo regressionICCV2017IEEEPiscataway, NJ10.1109/ICCV.2017.17

27ChangJ.-R.ChenY.-S.Pyramid stereo matching networkCVPR2018IEEEPiscataway, NJ10.1109/CVPR.2018.00567

28ZhangF.PrisacariuV.YangR.TorrP. H. S.Ga-net: Guided aggregation net for end-to-end stereo matchingCVPR2019IEEEPiscataway, NJ10.1109/CVPR.2019.00027

29DuggalS.WangS.MaW.-C.HuR.UrtasunR.DeepPruner: Learning efficient stereo matching via differentiable patchmatchICCV2019IEEEPiscataway, NJ438443934384–9310.1109/ICCV.2019.00448

30XuH.ZhangJ.AANET: Adaptive aggregation network for efficient stereo matchingCVPR2020IEEEPiscataway, NJ195919681959–6810.1109/CVPR42600.2020.00203

31ChiuW. W.-C.BlankeU.FritzM.Improving the kinect by cross-modal stereoBMVC2011Dundee116110116–0

32ZhiT.PiresB. R.HebertM.NarasimhanS. G.Deep material-aware cross-spectral stereo matchingProc. IEEE Conf. on Computer Vision and Pattern Recognition2018IEEEPiscataway, NJ191619251916–2510.1109/CVPR.2018.00205

33ShenX.XuL.ZhangQ.JiaJ.Multi-modal and multi-spectral registration for natural imagesECCV2014SpringerCham309324309–2410.1007/978-3-319-10593-2_21

34JeonH.-G.LeeJ.-Y.ImS.HaH.KweonI. S.Stereo matching with color and monochrome cameras in low-light conditionsCVPR2016IEEEPiscataway, NJ408640944086–9410.1109/CVPR.2016.443

35KimS.MinD.HamB.RyuS.DoM. N.SohnK.DASC: Dense adaptive self-correlation descriptor for multi-modal and multi-spectral correspondenceCVPR2015IEEEPiscataway, NJ210321122103–1210.1109/CVPR.2015.7298822

36KimS.MinD.LinS.SohnK.Deep self-correlation descriptor for dense cross-modal correspondenceEuropean Conf. on Computer Vision2016SpringerCham679695679–9510.1007/978-3-319-46484-8_41

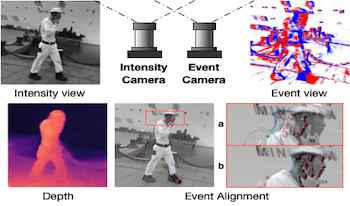

37Mohammad MostafaviI. S.YoonK.- J.ChoiJ.Event-intensity stereo: Estimating depth by the best of both worldsICCV2021IEEEPiscataway, NJ425842674258–6710.1109/ICCV48922.2021.00422

38GargR.KumarB. V.CarneiroG.ReidI.Unsupervised CNN for single view depth estimation: Geometry to the rescueECCV2016SpringerCham740756740–5610.1007/978-3-319-46484-8_45

39GodardC.AodhaO. M.J. BrostowG.Unsupervised monocular depth estimation with left-right consistencyCVPR2017IEEEPiscataway, NJ10.1109/CVPR.2017.699

40GodardC.AodhaO. M.FirmanM.J. BrostowG.Digging into self-supervised monocular depth estimationICCV2019IEEEPiscataway, NJ382838383828–3810.1109/ICCV.2019.00393

41ZhouC.ZhangH.ShenX.JiaJ.Unsupervised learning of stereo matchingICCV2017IEEEPiscataway, NJ156715751567–7510.1109/ICCV.2017.174

42RebecqH.RanftlR.KoltunV.ScaramuzzaD.High speed and high dynamic range video with an event cameraIEEE Transactions on Pattern Analysis and Machine Intelligence2021Vol. 43IEEEPiscataway, NJ196419801964–8010.1109/TPAMI.2019.2963386

43ZhangR.IsolaP.EfrosA. A.ShechtmanE.WangO.The unreasonable effectiveness of deep features as a perceptual metricCVPR2018IEEEPiscataway, NJ586595586–9510.1109/CVPR.2018.00068

44JinjinG.HaomingC.HaoyuC.XiaoxingY.RenJ. S.ChaoD.Pipal: a large-scale image quality assessment dataset for perceptual image restorationECCV2020SpringerCham633651633–5110.1007/978-3-030-58621-8_37

45WangZ.BovikA. C.SheikhH. R.SimoncelliE. P.2004Image quality assessment: from error visibility to structural similarityIEEE Trans. Image Process.13600612600–1210.1109/TIP.2003.819861

46ZhouS.ZhangJ.ZuoW.XieH.PanJ.RenJ. S.DAVANet: Stereo deblurring with view aggregationCVPR2019IEEEPiscataway, NJ109961100510996–100510.1109/CVPR.2019.01125

47JiangH.SunD.JampaniV.YangM.-H.Learned-MillerE.KautzJ.Super SloMo: High quality estimation of multiple intermediate frames for video interpolationCVPR2018IEEEPiscataway, NJ900090089000–810.1109/CVPR.2018.00938

48DelbruckT.HuY.HeZ.V2E: From video frames to realistic DVS event camera streamsCVPR2021IEEEPiscataway, NJ

49ZhuA. Z.ThakurD.ÖzaslanT.PfrommerB.KumarV.DaniilidisK.2018The multivehicle stereo event camera dataset: An event camera dataset for 3d perceptionIEEE Robot. Autom. Lett.3203220392032–910.1109/LRA.2018.2800793

50PaszkeA.GrossS.MassaF.LererA.BradburyJ.ChananG.KilleenT.LinZ.GimelsheinN.AntigaL.DesmaisonA.Pytorch: An imperative style, high-performance deep learning libraryNeurIPS2019Curran AssociatesRed Hook, NY802680378026–37

51KingmaD. P.BaJ.Adam: A method for stochastic optimizationICLR2015ICLRNew Orleans, LA

52GehrigD.RüeggM.GehrigM.Hidalgo-CarrióJ.ScaramuzzaD.2021Combining events and frames using recurrent asynchronous multimodal networks for monocular depth predictionIEEE Robot. Autom. Lett.6282228292822–910.1109/LRA.2021.3060707

53DuanP.WangZ. W.ShiB.CossairtO.HuangT.KatsaggelosA.2021Guided event filtering: Synergy between intensity images and neuromorphic events for high performance imagingIEEE Trans. Pattern Anal. Mach. Intell.44826182758261–7510.1109/TPAMI.2021.3113344

54WangX.YuK.WuS.GuJ.LiuY.DongC.QiaoY.LoyC. C.ESRGAN: Enhanced super-resolution generative adversarial networksECCV2018SpringerCham637963–7910.1007/978-3-030-11021-5_5

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed