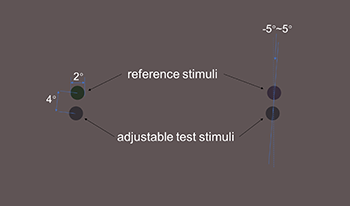

Three-dimensional (3D) displays employ the technique of binocular disparity, by presenting two separate images with parallax cues to the two eyes, resulting in the perception of stereo vision. The human brain can fuse these two images into a single stereoscopic image, provided that their color difference falls within the fusion limit. Prior research on binocular color fusion has mostly concentrated on assessing the fusion limit across different conditions, but limited attention has been given to investigating binocular color mixture, particularly concerning opposite color pairs. In order to explore the impact of background luminance on the binocular mixture of opposite colors, a series of color matching experiments were conducted for three background luminance levels using a custom-built stereoscopic display. The findings reveal that as the contrast of the background luminance decreases, the binocular color mixture is more affected by the sensory dominant eye.

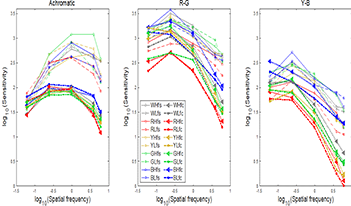

The goals of this work are to accumulate experimental data on contrast sensitivity functions and to establish a visual model that incorporates spatial frequency dependence. In the experimental design, two patterns were compared: fixed-size and fixed-cycle stimuli from different luminance levels. The detection thresholds have been measured for chromatic contrast patterns at different spatial frequencies. The present experiment was conducted with the aim to form a most comprehensive data by combining with our data. The experimental parameters including (1) five colour centres (white, red, yellow, green and blue), which were recommended by the International Commission on Illumination (CIE), at two different luminance levels for each colour centre; (2) three colour directions for each colour centre, namely luminance, red-green and yellow-blue and (3) five spatial frequencies, 0.06, 0.24, 0.96, 3.84 and 6.00 cycles per degree (cpd). The present and our earlier data were combined to form a complete set data to develop and test different models. A 10-bit display characterized by GOG model was used to obtain contrast thresholds of different color centers by the 2-alternative forced choice method and stair-case method. The experimental results revealed different parametric effects and also confirmed the McCann’s finding that the number of cycles affects the comparative sensitivity. Finally, a cone contrast model and a postreceptoral contrast model proposed by Mantiuk et al was developed by fitting the visual test data (fixed number of cycles and fixed size). The models could accurately predict the contrast sensitivity of different color centers, spatial frequencies and stimulus.

The purpose of this study is to accumulate colour appearance data under high dynamic luminance range. Two experiments were conducted based on different types of stimuli, colour patches and colour images. The colour patch experiment was conducted to match corresponding data between colors on a display and the real scene viewed under high dynamic range viewing conditions. Ten observers assessed 13 stimuli under 6 illuminance levels ranged from 15, 100, 1000, 3160, 10000 to 32000 lux. Observers adjusted the color patches on a display screen to match the color samples of the real scene against of a neutral background (The reflectivity was 35%). The visual results showed a clear trend, an increase of illuminance level raised vividness perception (both increases in lightness and colorfulness). However, CAM16-UCS did not give accurate prediction to the visual results, especially in the lightness direction. The model was then refined to achieve satisfactory performance and to truthfully reflect the visual phenomena. By modifying the lightness induction factor in calculating lightness according to adaptive luminance, the predictions of the model and the visual results had a good agreement in the direction of colour shift. The colour image experiment was carried out using 3 images assessed by 10 observers to test the CAM16-UCS on image generation. The results showed the modified model based on patches do not perform well and a further modification was made to come out a modified CAM16-UCS for images.

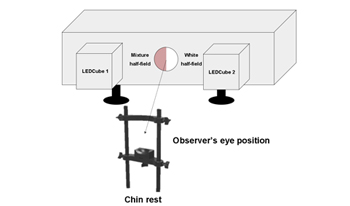

The visual changes caused by aging have an important effect on human vision. In this study, a series of color matching experiments were conducted to explore the differences in color matching results between observers of different age groups. The experiments were conducted using a visual trichromator, which could be illuminated by 18 LEDs with different peak wavelengths as light sources that formed 11 different triplets of RGB primaries. The matched and reference fields observed by the observers in the experiment were equally sized semicircular fields that together formed a circular field. A fixed triplet of RGB primaries produced the white reference field on the right side. It was matched on the left-side by adjusting the primary intensities of each of the 11 triplets of RGB primaries. The white reference stimulus was fixed at 120 𝑐𝑐𝑐𝑐/𝑚𝑚2 luminance level. One hundred Chinese observers with normal colour vision were divided by decades into 7 groups from 10 to 80 years old. The matches were analysed to estimate their cone spectral sensitivities and photopigment, macular and lens optical densities. The experimental results show relatively little variability with age, except as expected increases in lens density with age, and older observers showed more inter-observer variability. The analysis suggests that some of the mean colour matching parameters assumed by the CIE, such as the macular pigment optical density, do not apply for these observers.

Whether two stimuli appear to be of different colors depends on a host of factors, ranging from the observer, via viewing conditions to content and context. Previously, studies have explored just noticeable difference thresholds for uniform colors viewed with or without spatial separation, for complex images and for fine features like lines in architectural drawings. An important case that has not been characterized to date is that of continuous color transitions, such as those obtained when selecting two colors and generating a sequence of intermediate colors between them. Such transitions are often part of natural scenes (e.g., sunsets, the sky, curved surfaces, soft shadows, etc.) and are also commonly used in visual design, including for backgrounds and various graphical elements. Where the just noticeable difference lies in this case will be explored here by way of a small-scale, pilot experiment, conducted in an uncontrolled, on-line way. Its results suggest a threshold in the region of 0.5 to 0.8 ΔE2000 for the few stimuli evaluated in the pilot experiment reported here and indicate a behavior that is in the region of viewing solid colors without a gap. A pilot verification with complex images also showed thresholds with a comparable range.

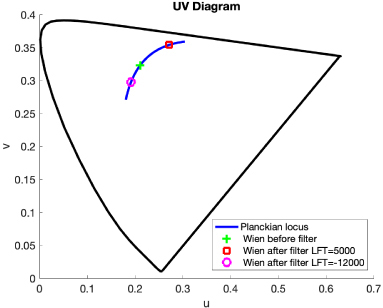

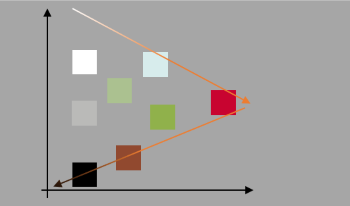

Recently, a theoretical framework was presented for designing colored filters called Locus Filters. Locus filters are designed so that any Wien-Planckian light, post filtering, is mapped to another Wien-Planckian light. Moreover, it was also shown that only filters designed according to the locus filter framework have this locus-to-locus mapping property. In this paper, we investigate how locus filters work in the real world. We make two main contributions. First, for daylights, we introduce a new daylight locus with respect to which a locus filter always maps a daylight to another daylight (and their correlated color temperature maps in analogy to the Wien-Planckian temperatures). Importantly, we show that our new locus is close to the standard daylight locus (but has a simpler and more elegant formalism). Secondly, we evaluate the extent to which some commercially available light balancing and color correction filters behave like locus filters.

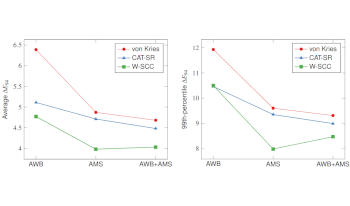

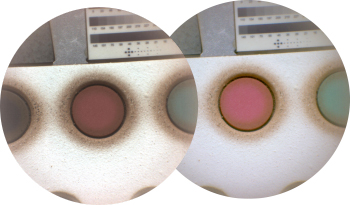

This paper presents a novel approach for spectral illuminant correction in smartphone imaging systems, aiming to improve color accuracy and enhance image quality. The methods introduced include Spectral Super Resolution and Weighted Spectral Color Correction (W-SCC). These techniques leverage the spectral information of both the image and the illuminant to perform effective color correction. Experimental evaluations were conducted using a dataset of 100 synthetic images, whose acquisition is simulated using the transmittance information of a Huawei P50 smartphone camera sensor and an Ambient light Multispectral Sensor (AMS). The results demonstrate the superiority of the proposed methods compared to traditional trichromatic pipelines, achieving significant reductions in colorimetric errors measured in terms of ΔE94 units. The W-SCC technique, in particular, incorporates per-wavelength weight optimization, further enhancing the accuracy of spectral illuminant correction. The presented approaches have valuable applications in various fields, including color analysis, computer vision, and image processing. Future research directions may involve exploring additional optimization techniques and incorporating advanced machine learning algorithms to further advance spectral illuminant correction in smartphone imaging systems.

Geologists consider it crucial to work on faithful images of Mars. However, no color correction is yet done systematically on those images, especially due to the poor knowledge of the local martian weather. The weather is highly fluctuating and with the low gravity of the planet, it tends to set the conditions for varying amounts of dust in the atmosphere and ground illumination variations as well. Low discrimination of light variations by the Human Visual System is explained by Chromatic Adaptation (CA). Color images processing therefore often accounts for a step related to CA. This study investigates whether this step has to be applied to Mars images as well and is done through an illumination discrimination task performed on 15 observers for stimuli along daylight locus and solight locus (lights of Mars planet) generated through a 7-LEDs lighting system. This study gives outputs in agreement with other on daylight locus while showing low differences between results under daylight and solight.

Vividness and Depth are widely used in image and textile industry. And these scales were derived from one-dimensional scales of CIELAB L* and C*ab. However, these scales are limited to relative scales with a reference white, which makes it difficult for them to adapt to the variation in the world. This paper has introduced an experiment that focuses on assessing the wide range of luminance levels in a visual context, ranging from 10 cd/m2 to 10000 cd/m2. The experiment employed a method called magnitude estimation to gauge the perception of Vividness, Depth, Whiteness, and Blackness scales. The judgments were obtained through 10 observer x 40 NCS Sample x 4 lux level x 4 scales x 1.1 (10% repeat set), resulting in a total of 7040 assessments. This article mainly introduces the development of vividness and depth scales using combinations of relative scales, absolute scales, and the mixture scales like CAM16-UCS.