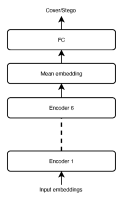

In batch steganography, the sender communicates a secret message by hiding it in a bag of cover objects. The adversary performs the so-called pooled steganalysis in that she inspects the entire bag to detect the presence of secrets. This is typically realized by using a detector trained to detect secrets within a single object, applying it to all objects in the bag, and feeding the detector outputs to a pooling function to obtain the final detection statistic. This paper deals with the problem of building the pooler while keeping in mind that the Warden will need to be able to detect steganography in variable size bags carrying variable payload. We propose a flexible machine learning solution to this challenge in the form of a Transformer Encoder Pooler, which is easily trained to be agnostic to the bag size and payload and offers a better detection accuracy than previously proposed poolers.

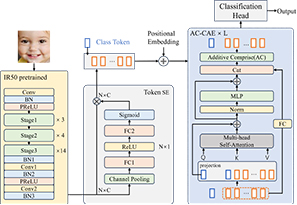

Facial Expression Recognition (FER) models based on the Vision Transformer (ViT) have demonstrated promising performance on diverse datasets. However, the computational cost of the transformer encoder poses challenges in scenarios where strong computational resources are required. The utilization of large feature maps enhances expression information, but leads to a significant increase in token length. Consequently, the computational complexity grows quadratically with the length of the tokens as O(N2). Tasks involving large feature maps, such as high-resolution FER, encounter computational bottlenecks. To alleviate these challenges, we propose the Additively Comprised Class Attention Encoder as a substitute for the original ViT encoder, which reduces the complexity of the attention computation from O(N2) to O(N). Additionally, we introduce a novel token-level Squeeze-and-Excitation method to facilitate the model’s learning of more efficient representations. Experimental evaluations on the RAF-DB and FERplus datasets show that our approach can improve running speed by at least 27% (for 7 × 7 feature maps) while maintaining comparable accuracy, and it performs more efficiently on larger feature maps (about 49% speedup for 14 × 14 feature maps, and triple the speed for 28 × 28 feature maps).