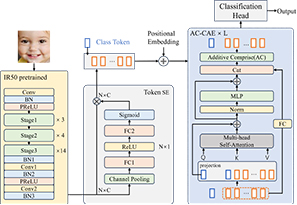

Facial Expression Recognition (FER) models based on the Vision Transformer (ViT) have demonstrated promising performance on diverse datasets. However, the computational cost of the transformer encoder poses challenges in scenarios where strong computational resources are required. The utilization of large feature maps enhances expression information, but leads to a significant increase in token length. Consequently, the computational complexity grows quadratically with the length of the tokens as O(

Jiasen Wang, Yuanjing Hu, Aibin Huang, "Efficient Facial Expression Recognition Transformer with Additively Comprised Class Attention Encoder" in Journal of Imaging Science and Technology, 2025, pp 1 - 7, https://doi.org/10.2352/J.ImagingSci.Technol.2025.69.1.010410

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed