Accurate segmentation and recognition of retinal vessels is a very important medical image analysis technique, which enables clinicians to precisely locate and identify vessels and other tissues in fundus images. However, there are two problems with most existing U-net-based vessel segmentation models. The first is that retinal vessels have very low contrast with the image background, resulting in the loss of much detailed information. The second is that the complex curvature patterns of capillaries result in models that cannot accurately capture the continuity and coherence of the vessels. To solve these two problems, we propose a joint Transformer–Residual network based on a multiscale attention feature (MSAF) mechanism to effectively segment retinal vessels (MATR-Net). In MATR-Net, the convolutional layer in U-net is replaced with a Residual module and a dual encoder branch composed with Transformer to effectively capture the local information and global contextual information of retinal vessels. In addition, an MSAF module is proposed in the encoder part of this paper. By combining features of different scales to obtain more detailed pixels lost due to the pooling layer, the segmentation model effectively improves the feature extraction ability for capillaries with complex curvature patterns and accurately captures the continuity of vessels. To validate the effectiveness of MATR-Net, this study conducts comprehensive experiments on the DRIVE and STARE datasets and compares it with state-of-the-art deep learning models. The results show that MATR-Net exhibits excellent segmentation performance with Dice similarity coefficient and Precision of 84.57%, 80.78%, 84.18%, and 80.99% on DRIVE and STARE, respectively.

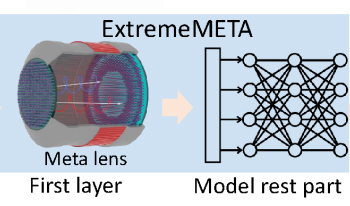

Deep neural networks (DNNs) have heavily relied on traditional computational units, such as CPUs and GPUs. However, this conventional approach brings significant computational burden, latency issues, and high power consumption, limiting their effectiveness. This has sparked the need for lightweight networks such as ExtremeC3Net. Meanwhile, there have been notable advancements in optical computational units, particularly with metamaterials, offering the exciting prospect of energy-efficient neural networks operating at the speed of light. Yet, the digital design of metamaterial neural networks (MNNs) faces precision, noise, and bandwidth challenges, limiting their application to intuitive tasks and low-resolution images. In this study, we proposed a large kernel lightweight segmentation model, ExtremeMETA. Based on ExtremeC3Net, our proposed model, ExtremeMETA maximized the ability of the first convolution layer by exploring a larger convolution kernel and multiple processing paths. With the large kernel convolution model, we extended the optic neural network application boundary to the segmentation task. To further lighten the computation burden of the digital processing part, a set of model compression methods was applied to improve model efficiency in the inference stage. The experimental results on three publicly available datasets demonstrated that the optimized efficient design improved segmentation performance from 92.45 to 95.97 on mIoU while reducing computational FLOPs from 461.07 MMacs to 166.03 MMacs. The large kernel lightweight model ExtremeMETA showcased the hybrid design’s ability on complex tasks.

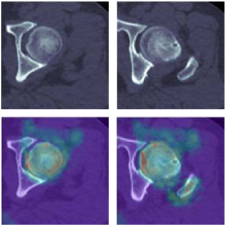

The segment anything model (SAM) was released as a foundation model for image segmentation. The promptable segmentation model was trained by over 1 billion masks on 11M licensed and privacy-respecting images. The model supports zero-shot image segmentation with various segmentation prompts (e.g., points, boxes, masks). It makes the SAM attractive for medical image analysis, especially for digital pathology where the training data are rare. In this study, we evaluate the zero-shot segmentation performance of SAM model on representative segmentation tasks on whole slide imaging (WSI), including (1) tumor segmentation, (2) non-tumor tissue segmentation, (3) cell nuclei segmentation. Core Results: The results suggest that the zero-shot SAM model achieves remarkable segmentation performance for large connected objects. However, it does not consistently achieve satisfying performance for dense instance object segmentation, even with 20 prompts (clicks/boxes) on each image. We also summarized the identified limitations for digital pathology: (1) image resolution, (2) multiple scales, (3) prompt selection, and (4) model fine-tuning. In the future, the few-shot fine-tuning with images from downstream pathological segmentation tasks might help the model to achieve better performance in dense object segmentation.

Stereo matching algorithms are useful for estimating a dense depth characteristic of a scene by finding corresponding points from stereo images of the scene. Several factors such as occlusion, noise, and illumination inconsistencies in the scene affect the disparity estimates and make this process challenging. Algorithms developed to overcome these challenges can be broadly categorized as learning-based and non-learning based disparity estimation algorithms. The learning-based approaches are more accurate but computationally expensive. In contrary, non-learning based algorithms are widely used and are computationally efficient algorithms. In this paper, we propose a new stereo matching algorithm using guided image filtering (GIF)-based cost aggregation. The main contribution of our approach is a cost calculation framework which is a hybrid of cross-correlation between stereo-image pairs and scene segmentation (HCS). The performance of our HCS technique was compared with state-ofthe- art techniques using version 3 of the benchmark Middlebury dataset. Our results confirm the effective performance of the HCS technique.

Segmentation is usually performed in the spatial domain and is likely hindered by similar intensity, intensity inhomogeneity, and partial volume effect. In this article, a visual-selection method is proposed to carry out segmentation in the intensity space such that the aforementioned difficulties are alleviated and better results can be produced. The proposed procedure utilizes volume rendering to explore the input data and builds a transfer function, encoding the intensity distribution of the target. Then, by using this transfer function and image processing techniques, a region of interest (ROI) is constructed in the intensity field. At the following stage, a texture-based region growing computation is conducted to extract the target from the ROI. Experiments show that the proposed method produces high quality results for a phantom which is composed of plates with similar intensities and textures. It also out-performs a traditional segmentation system in separating organs and tissues from a torso CT-scan data set.

This paper presents a new method for segmenting medical images is based on Hamiltonian quaternions and the associative algebra, method of the active contour model and LPA-ICI (local polynomial approximation - the intersection of confidence intervals) anisotropic gradient. Since for segmentation tasks, the image is usually converted to grayscale, this leads to the loss of important information about color, saturation, and other important information associated color. To solve this problem, we use the quaternion framework to represent a color image to consider all three channels simultaneously when segmenting the RGB image. As a method of noise reduction, adaptive filtering based on local polynomial estimates using the ICI rule is used. The presented new approach allows obtaining clearer and more detailed boundaries of objects of interest. The experiments performed on real medical images (Z-line detection) show that our segmentation method of more efficient compared with the current state-of-art methods.

In this work, we explore the ability to estimate vehicle fuel consumption using imagery from overhead fisheye lens cameras deployed as traffic sensors. We utilize this information to simulate vision-based control of a traffic intersection, with a goal of improving fuel economy with minimal impact to mobility. We introduce the ORNL Overhead Vehicle Data set (OOVD), consisting of a data set of paired, labeled vehicle images from a ground-based camera and an overhead fisheye lens traffic camera. The data set includes segmentation masks based on Gaussian mixture models for vehicle detection. We show the data set utility through three applications: estimation of fuel consumption based on segmentation bounding boxes, vehicle discrimination for vehicles with large bounding boxes, and fine-grained classification on a limited number of vehicle makes and models using a pre-trained set of convolutional neural network models. We compare these results with estimates based on a large open-source data set of web-scraped imagery. Finally, we show the utility of the approach using reinforcement learning in a traffic simulator using the open source Simulation of Urban Mobility (SUMO) package. Our results demonstrate the feasibility of the approach for controlling traffic lights for better fuel efficiency based solely on visual vehicle estimates from commercial, fisheye lens cameras.

The possible achievements of accurate and intuitive 3D image segmentation are endless. For our specific research, we aim to give doctors around the world, regardless of their computer knowledge, a virtual reality (VR) 3D image segmentation tool which allows medical professionals to better visualize their patients’ data sets, thus attaining the best understanding of their respective conditions.We implemented an intuitive virtual reality interface that can accurately display MRI and CT scans and quickly and precisely segment 3D images, offering two different segmentation algorithms. Simply put, our application must be able to fit into even the most busy and practiced physicians’ workdays while providing them with a new tool, the likes of which they have never seen before.