The usability and accessibility of digitised archival data can be improved using deep learning solutions. In this paper, the authors present their work in developing a named entity recognition (NER) model for digitised archival data, specifically state authority documents. The entities for the model were chosen based on surveying different user groups. In addition to common entities, two new entities were created to identify businesses (FIBC) and archival documents (JON). The NER model was trained by fine-tuning an existing Finnish BERT model. The training data also included modern digitally born texts to achieve good performance with various types of inputs. The finished model performs fairly well with OCR-processed data, achieving an overall F1 score of 0.868, and particularly well with the new entities (F1 scores of 0.89 and 0.97 for JON and FIBC, respectively).

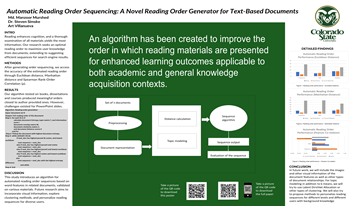

There are many electronic documents salient to read for each given topic; however, finding a suitable reading order for pedagogical purposes has been underserved historically by the text analytics community. In this research, we propose an automatic reading order generation technique that can suggest a suitable and optimal reading order for curriculum generation quantitatively. It is necessary to read the relevant documents in some logical order to understand the topics clearly. There are many learning pedagogies advanced, so for our purposes we use the author-supplied reading orders of salient content sets for ground truth. Our method suggests the best reading order automatically by checking the relevant topics, document distances, and semantic structure of the given documents. The system will generate a suitable and efficient reading sequence by analyzing the information, similarity, overlap of contents, and distances using word frequency, and topic sets. We measure the similarity, relevance, distance, and overlap of different documents using cosine similarity, entropy relevance, Euclidean distances, and Jaccard similarities respectively. We propose an algorithm that will generate the best possible reading order for a set of given documents. We evaluated the performance of our system against the ground truth reading order using different kinds of textbooks and generalized the finding for any given set of documents.

Captchas are used on many websites in the Internet to prevent automated web requests. Likewise, marketplaces in the darknet commonly use captchas to secure themselves against DDoS attacks and automated web scrapers. This complicates research and investigations regarding the content and activity of darknet marketplaces. In this work we focus on the darknet and provide an overview about the variety of captchas found in darknet marketplaces. We propose a workflow and recommendations for building automated captcha solvers and present solvers based on machine learning models for 5 different captcha types we found. With our solvers we were able to achieve accuracies between 65% and 99% which significantly improved our ability to collect data from the corresponding marketplaces with automated web scrapers.

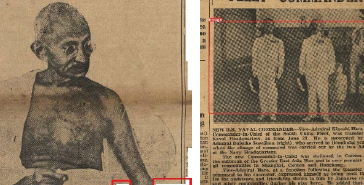

This paper presents the methodologies to extract the headline and illustrations from a historical newspaper for storytelling to support digital scholarship. It explored the ways in which new digital tools can facilitate the understanding of the newspaper content in the setting of time and space, "The Hongkong News" was selected from Hong Kong Early Tabloid Newspaper for the case study owing to its uniqueness in historical value towards the scholars. The proposed methodologies were evaluated in OCR (Optical Character Recognition) with scraping and Deep Learning Object Detection models. Two visualization products were developed to showcase the feasibility of our proposed methods to serve the storytelling purpose.

With the initiatives like Collections as Data and Computational Archival Science, archives are no longer seen as a static documentation of objects, but evolving sources of cultural and historical data. This work emphasizes the potential updates in preserving and documenting digital audiovisual (AV) content from a data perspective, considering the recent developments in natural language processing and computer vision tasks, as well as the emergence of interactive and embodied experiences and interfaces for innovatively accessing archival content. As part of Swiss national scientific fund Sinergia project, this work was able to work end-to-end with real-world AV archives like Télévision Suisse Romande (RTS). Resorting to an updated narrative model for mapping data that can be obtained from the content as well as the consumer, this work proposed an experimental attempt to build an ontology to formally sum up the potential new paradigm for preservation and accessibility from a data perspective for modern archives, in the hope for nurturing a digital and data-driven mind-set for archive practices.

Advancements in sensing, computing, image processing, and computer vision technologies are enabling unprecedented growth and interest in autonomous vehicles and intelligent machines, from self-driving cars to unmanned drones, to personal service robots. These new capabilities have the potential to fundamentally change the way people live, work, commute, and connect with each other, and will undoubtedly provoke entirely new applications and commercial opportunities for generations to come. The main focus of AVM is perception. This begins with sensing. While imaging continues to be an essential emphasis in all EI conferences, AVM also embraces other sensing modalities important to autonomous navigation, including radar, LiDAR, and time of flight. Realization of autonomous systems also includes purpose-built processors, e.g., ISPs, vision processors, DNN accelerators, as well core image processing and computer vision algorithms, system design and architecture, simulation, and image/video quality. AVM topics are at the intersection of these multi-disciplinary areas. AVM is the Perception Conference that bridges the imaging and vision communities, connecting the dots for the entire software and hardware stack for perception, helping people design globally optimized algorithms, processors, and systems for intelligent “eyes” for vehicles and machines.

In order to train a learning-based prediction model, large datasets are typically required. One of the major restrictions of machine learning applications using customized databases is the cost of human labor. In the previous papers [3, 4, 5], it is demonstrated through experiments that the correlation between thin-film nitrate sensor performance and surface texture exists. In the previous papers, several methods for extracting texture features from sensor images are explored, repeated cross-validation and a hyperparameter auto-tuning method are performed, and several machine learning models are built to improve prediction accuracy. In this paper, a new way to achieve the same accuracy with a much smaller dataset of labels by using an active learning structure is presented.

The spectral sensitivity functions of a digital image sensor determine the sensor’s color response to scene-radiated light. Knowing these spectral sensitivity functions is very important for applications that require accurate color, such as computer vision. Traditional measurements of these functions are time consuming, and require expensive lab equipment to generate narrow-band monochromatic light. Previous works have shown that sensitivity curves can be estimated using images of a color checker chart with known spectral reflectances, using either numerical optimization or machine learning. However, previous works in the literature have not considered sensitivity functions for CFAs (color filter arrays) other than RGB, such as RCCB (Red Clear Blue) or RYYCy (Red Yellow Cyan). Non-RGB CFAs have been shown to be useful for automotive and security camera applications, especially in low light situations. We propose a machine learning method to estimate the sensitivity curves of sensors with non-RGB filters, in addition to the RGB filters addressed previously in the literature, using a single image of a color chart under unknown illumination. Including non-RGB filters makes the estimation problem much more challenging, since the resulting space of color filters is no longer modelled by simple Gaussian shapes.

Deep learning, which has been very successful in recent years, requires a large amount of data. Active learning has been widely studied and used for decades to reduce annotation costs and now attracts lots of attention in deep learning. Many real-world deep learning applications use active learning to select the informative data to be annotated. In this paper, we first investigate laboratory settings for active learning. We show significant gaps between the results from different laboratory settings and describe our practical laboratory setting that reasonably reflects the active learning use cases in real-world applications. Then, we introduce a problem setting of blind imbalanced domains. Any data set includes multiple domains, e.g., individuals in handwritten character recognition with different social attributes. Major domains have many samples, and minor domains have few samples in the training set. However, we must accurately infer both major and minor domains in the test phase. We experimentally compare different methods of active learning for blind imbalanced domains in our practical laboratory setting. We show that a simple active learning method using softmax margin and a model training method using distance-based sampling with center loss, both working in the deep feature space, perform well.

Recent progress at the intersection of deep learning and imaging has created a new wave of interest in imaging and multimedia analytics topics, from social media sharing to augmented reality, from food and nutrition to health surveillance, from remote sensing and agriculture to wildlife and environment monitoring. Compared to many subjects in traditional imaging, these topics are more multi-disciplinary in nature. This conference will provide a forum for researchers and engineers from various related areas, both academic and industrial, to exchange ideas and share research results in this rapidly evolving field.