Feature-Product networks (FP-nets) are a novel deep-network architecture inspired by principles of biological vision. These networks contain the so-called FP-blocks that learn two different filters for each input feature map, the outputs of which are then multiplied. Such an architecture is inspired by models of end-stopped neurons, which are common in cortical areas V1 and especially in V2. The authors here use FP-nets on three image quality assessment (IQA) benchmarks for blind IQA. They show that by using FP-nets, they can obtain networks that deliver state-of-the-art performance while being significantly more compact than competing models. A further improvement that they obtain is due to a simple attention mechanism. The good results that they report may be related to the fact that they employ bio-inspired design principles.

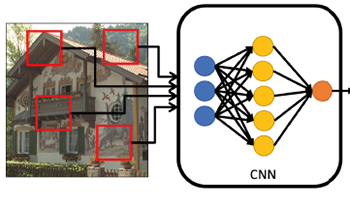

The ultimate goal in any proposed Image Quality Metrics (IQMs) is to accurately predict the subjective quality scores given by observers. In the case of most IQMs the quality score is calculated by pooling the quality scores from what is referred to as a quality map of an image. While different pooling methods have been proposed, most such approaches use various types of a weighting average over the quality map to calculate the image quality score. One such approach is to use saliency maps as a weighting factor in our pooling process. Such an approach will result in giving a higher weight to the salient regions of the image. In this work we study if we can evaluate the quality of an image by only calculating the quality of the most salient region in the image. Such an approach could possibly reduce the computational time and power needed for image quality assessment. Results show that in most cases, depending on the saliency calculation method used, we can improve the accuracy of IQMs by simply calculating the quality of a region in the image which covers as low as 20% of the salient energy.