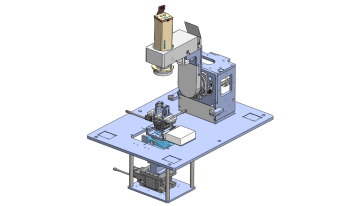

Vehicle-borne cameras vary greatly in imaging properties, e.g., angle of view, working distance and pixel count, to meet the diverse requirements of various applications. In addition, auto parts must tolerate dramatic variations in ambient temperature. These pose considerable challenges to the automotive industry when it comes to the evaluation of automotive cameras in terms of imaging performance. In this paper, an integrated and fully automated system, developed specifically to address these issues, is described. The key components include a collimator unit incorporating a LED light source and a transmissive test target, a mechanical structure that holds and moves the collimator and the camera under test, and a software suite that communicates with the controllers and computes the images captured by the camera. With the multifunctional system, imaging performance of cameras can be conveniently measured at a high degree of accuracy, precision and compatibility. The results are consistent with those obtained from tests conducted with conventional methods. Preliminary results demonstrate the potential of the system in terms of functionality and flexibility with continuing development.

We live in a visual world. The perceived quality of images is of crucial importance in industrial, medical, and entertainment application environments. Developments in camera sensors, image processing, 3D imaging, display technology, and digital printing are enabling new or enhanced possibilities for creating and conveying visual content that informs or entertains. Wireless networks and mobile devices expand the ways to share imagery and autonomous vehicles bring image processing into new aspects of society. The power of imaging rests directly on the visual quality of the images and the performance of the systems that produce them. As the images are generally intended to be viewed by humans, a deep understanding of human visual perception is key to the effective assessment of image quality.

Most cameras use a single-sensor arrangement with Color Filter Array (CFA). Color interpolation techniques performed during image demosaicing are normally the reason behind visual artifacts generated in a captured image. While the severity of the artifacts depends on the demosaicing methods used, the artifacts themselves are mainly zipper artifacts (block artifacts across the edges) and false-color distortions. In this study and to evaluate the performance of demosaicing methods, a subjective pair-comparison method with 15 observers was performed on six different methods (namely Nearest Neighbours, Bilinear interpolation, Laplacian, Adaptive Laplacian, Smooth hue transition, and Gradient-Based image interpolation) and nine different scenes. The subjective scores and scene images are then collected as a dataset and used to evaluate a set of no-reference image quality metrics. Assessment of the performance of these image quality metrics in terms of correlation with the subjective scores show that many of the evaluated no-reference metrics cannot predict perceived image quality.

Structure-aware halftoning algorithms aim at improving their non-structure-aware version by preserving high-frequency details, structures, and tones and by employing additional information from the input image content. The recently proposed achromatic structure-aware Iterative Method Controlling the Dot Placement (IMCDP) halftoning algorithm uses the angle of the dominant line in each pixel’s neighborhood as supplementary information to align halftone structures with the dominant orientation in each region and results in sharper halftones, gives a more three-dimensional impression, and improves the structural similarity and tone preservation. However, this method is developed only for monochrome halftoning, the degree of sharpness enhancement is constant for the entire image, and the algorithm is prohibitively expensive for large images. In this paper, we present a faster and more flexible approach for representing the image structure using a Gabor-based orientation extraction technique which improves the computational performance of the structure-aware IMCDP by an order of magnitude while improving the visual qualities. In addition, we extended the method to color halftoning and studied the impact of orientation information in different color channels on improving sharpness enhancement, preserving structural similarity, and decreasing color reproduction error. Furthermore, we propose a dynamic sharpness enhancement approach, which adaptively varies the local sharpness of the halftone image based on different textures across the image. Our contributions in the present work enable the algorithm to adaptively work on large images with multiple regions and different textures.

Structure-aware halftoning algorithms aim at improving their non-structure-aware version by preserving high-frequency details, structures, and tones and by employing additional information from the input image content. The recently proposed achromatic structure-aware Iterative Method Controlling the Dot Placement (IMCDP) halftoning algorithm uses the angle of the dominant line in each pixel’s neighborhood as supplementary information to align halftone structures with the dominant orientation in each region and results in sharper halftones, gives a more three-dimensional impression, and improves the structural similarity and tone preservation. However, this method is developed only for monochrome halftoning, the degree of sharpness enhancement is constant for the entire image, and the algorithm is prohibitively expensive for large images. In this paper, we present a faster and more flexible approach for representing the image structure using a Gabor-based orientation extraction technique which improves the computational performance of the structure-aware IMCDP by an order of magnitude while improving the visual qualities. In addition, we extended the method to color halftoning and studied the impact of orientation information in different color channels on improving sharpness enhancement, preserving structural similarity, and decreasing color reproduction error. Furthermore, we propose a dynamic sharpness enhancement approach, which adaptively varies the local sharpness of the halftone image based on different textures across the image. Our contributions in the present work enable the algorithm to adaptively work on large images with multiple regions and different textures.

In this paper, we study individual quality scores given by different observers for various image distortions (saturation, contrast, and color quantization) at different levels. We created a database that contains a total of 232 images, derived from 21 pristine images, three distortions, and five levels. The database was rated by 31 participants collected through an online platform. The study shows that observers have distinguishable patterns with respect to different distortions. Using quadratic regression models, we visualized the behavior patterns of different groups of observers. The database and the individual scores collected are publicly available and can be further used for quality assessment research.

Recent advances in convolutional neural networks and vision transformers have brought about a revolution in the area of computer vision. Studies have shown that the performance of deep learning-based models is sensitive to image quality. The human visual system is trained to infer semantic information from poor quality images, but deep learning algorithms may find it challenging to perform this task. In this paper, we study the effect of image quality and color parameters on deep learning models trained for the task of semantic segmentation. One of the major challenges in benchmarking robust deep learning-based computer vision models is lack of challenging data covering different quality and colour parameters. In this paper, we have generated data using the subset of the standard benchmark semantic segmentation dataset (ADE20K) with the goal of studying the effect of different quality and colour parameters for the semantic segmentation task. To the best of our knowledge, this is one of the first attempts to benchmark semantic segmentation algorithms under different colour and quality parameters, and this study will motivate further research in this direction.

Recent advances in convolutional neural networks and vision transformers have brought about a revolution in the area of computer vision. Studies have shown that the performance of deep learning-based models is sensitive to image quality. The human visual system is trained to infer semantic information from poor quality images, but deep learning algorithms may find it challenging to perform this task. In this paper, we study the effect of image quality and color parameters on deep learning models trained for the task of semantic segmentation. One of the major challenges in benchmarking robust deep learning-based computer vision models is lack of challenging data covering different quality and colour parameters. In this paper, we have generated data using the subset of the standard benchmark semantic segmentation dataset (ADE20K) with the goal of studying the effect of different quality and colour parameters for the semantic segmentation task. To the best of our knowledge, this is one of the first attempts to benchmark semantic segmentation algorithms under different colour and quality parameters, and this study will motivate further research in this direction.

We live in a visual world. The perceived quality of images is of crucial importance in industrial, medical, and entertainment application environments. Developments in camera sensors, image processing, 3D imaging, display technology, and digital printing are enabling new or enhanced possibilities for creating and conveying visual content that informs or entertains. Wireless networks and mobile devices expand the ways to share imagery and autonomous vehicles bring image processing into new aspects of society. The power of imaging rests directly on the visual quality of the images and the performance of the systems that produce them. As the images are generally intended to be viewed by humans, a deep understanding of human visual perception is key to the effective assessment of image quality.

While RGB is the status quo in machine vision, other color spaces offer higher utility in distinct visual tasks. Here, the authors have investigated the impact of color spaces on the encoding capacity of a visual system that is subject to information compression, specifically variational autoencoders (VAEs) with a bottleneck constraint. To this end, they propose a framework-color conversion-that allows a fair comparison of color spaces. They systematically investigated several ColourConvNets, i.e. VAEs with different input-output color spaces, e.g. from RGB to CIE L* a* b* (in total five color spaces were examined). Their evaluations demonstrate that, in comparison to the baseline network (whose input and output are RGB), ColourConvNets with a color-opponent output space produce higher quality images. This is also evident quantitatively: (i) in pixel-wise low-level metrics such as color difference (ΔE), peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM); and (ii) in high-level visual tasks such as image classification (on ImageNet dataset) and scene segmentation (on COCO dataset) where the global content of reconstruction matters. These findings offer a promising line of investigation for other applications of VAEs. Furthermore, they provide empirical evidence on the benefits of color-opponent representation in a complex visual system and why it might have emerged in the human brain.