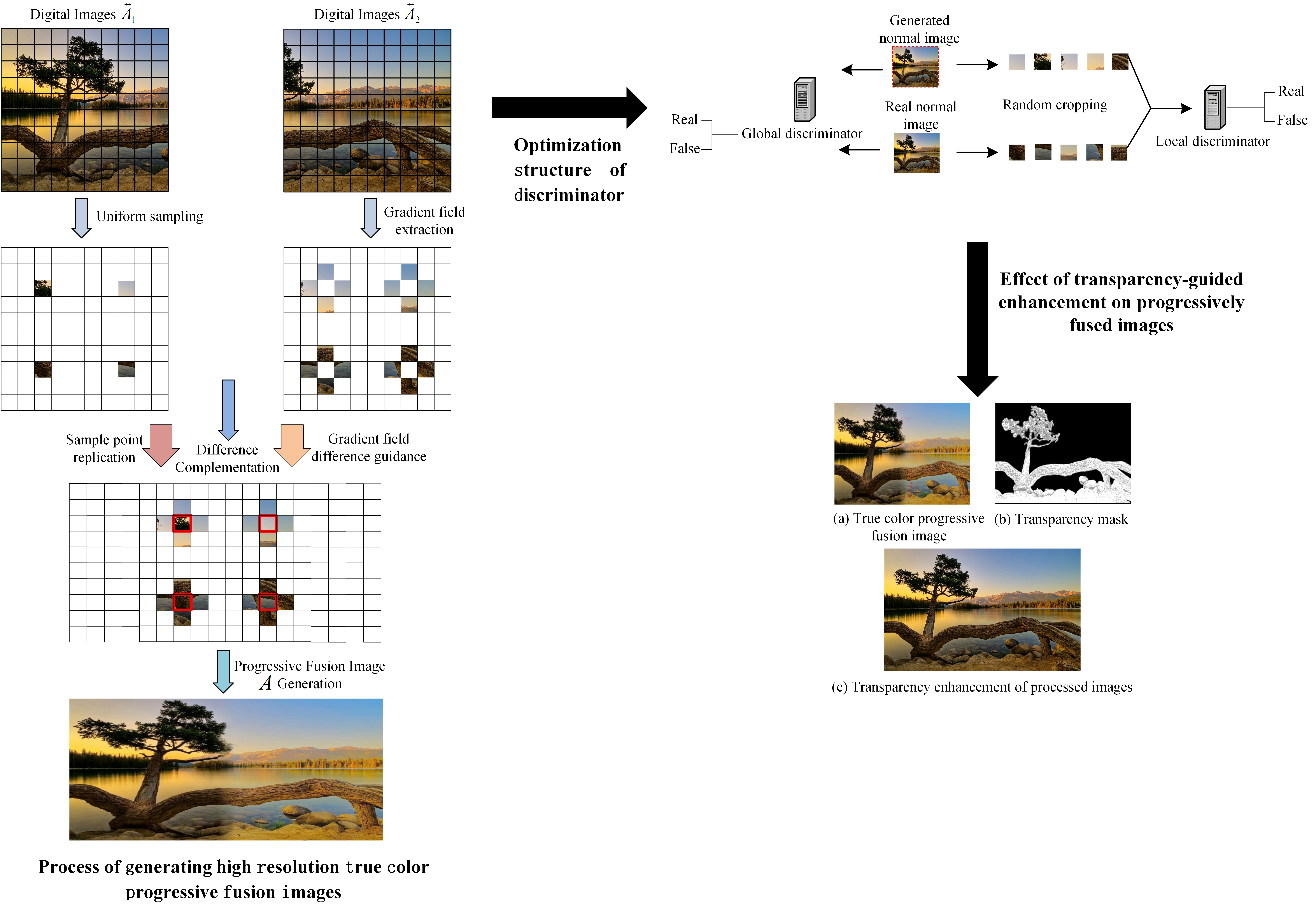

The progressive fusion algorithm enhances image boundary smoothness, preserves details, and improves visual harmony. However, issues with multi-scale fusion and improper color space conversion can lead to blurred details and color distortion, which do not meet modern image processing standards for high-quality output. Therefore, a progressive fusion image transparency-guided enhancement algorithm based on generative adversarial learning is proposed. The method combines wavelet transform with gradient field fusion to enhance image details, preserve spectral features, and generate high-resolution true-color fused images. It extracts the image mean, standard deviation, and smoothness features, and uses these along with the original image input to generate an adversarial network. The optimization design introduces global context, transparency mask prediction, and a dual-discriminator structure to enhance the transparency of progressively fused images. The experimental results showed that using the designed method, the information entropy was 7.638, the blind image quality index was 24.331, the natural image quality evaluator value was 3.611, and the processing time was 0.036 s. The overall evaluation indices were excellent, effectively restoring image detail information and spatial color while avoiding artifacts. The processed images exhibited high quality with complete detail preservation.

Single image dehazing is very important in intelligent vision systems. Since the dark channel prior (DCP) is invalid in bright areas such as the sky part of the image and will cause the recovered image to suffer severe distortion in the bright area. Therefore, we propose a novel dehazing method based on transmission map segmentation and prior knowledge. First, we divide the hazy input into bright areas and non-bright areas, then estimate the transmission map via DCP in the non-bright area, and propose a transmission map compensation function for correction in the bright area. Then we fuse the DCP and the bright channel prior (BCP) to accurately estimate the atmospheric light, and finally restore the clear image according to the physical model. Experiments show that our method well solves the DCP distortion problem in bright regions of images and is competitive with state-of-the-art methods.

Model-based approaches to imaging, such as specialized image enhancements in astronomy, facilitate explanations of relationships between observed inputs and computed outputs. These models may be expressed with extended matrix-vector (EMV) algebra, especially when they involve only scalars, vectors, and matrices, and with n-mode or index notations, when they involve multidimensional arrays, also called numeric tensors or, simply, tensors. Although this paper features an example, inspired by exoplanet imaging, that employs tensors to reveal (inverse) 2D fast Fourier transforms in an image enhancement model, the work is actually about the tensor algebra and software, or tensor frameworks, available for model-based imaging. The paper proposes a Ricci-notation tensor (RT) framework, comprising a dual-variant index notation, with Einstein summation convention, and codesigned object-oriented software, called the RTToolbox for MATLAB. Extensions to Ricci notation offer novel representations for entrywise, pagewise, and broadcasting operations popular in EMV frameworks for imaging. Complementing the EMV algebra computable with MATLAB, the RTToolbox demonstrates programmatic and computational efficiency via careful design of numeric tensor and dual-variant index classes. Compared to its closest competitor, also a numeric tensor framework that uses index notation, the RT framework enables superior ways to model imaging problems and, thereby, to develop solutions.

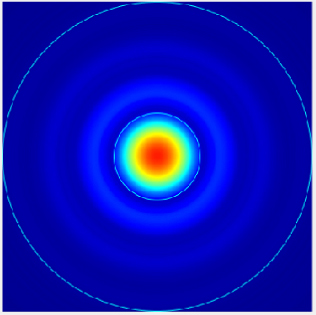

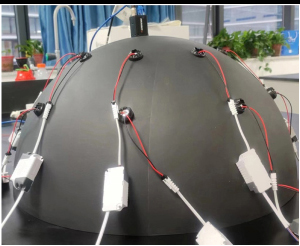

Along with the improvement of quality requirements in industrial production, surface inspection of workpiece has gradually become an indispensable and important process in the production of the workpiece. Aiming at the traditional methods in textured paper inspection, there are problems of low efficiency and large error; based on machine vision, we propose a “photometric stereo vision + fast Fourier enhancement + feature fusion” composite structure inspection method. First, as the traditional CCD camera produces obvious noise and scratches, which are difficult to distinguish from the background texture area, we propose combining the photometric stereo vision measurement algorithm to get the surface gradient information of the textured paper to obtain more gradient texture information; and then realize the secondary enhancement of the image through Fourier transform in spatial and frequency domains. Second, as the textured paper scratches are difficult to detect, the features are difficult to extract, and the threshold boundary is difficult to define, we propose dynamic threshold segmentation through multi-feature fusion to realize the surface scratch detection work of textured paper. We designed experiments using more than 300 different textured papers; and the results show that the composite structure detection method proposed in this paper is feasible and has advantages.

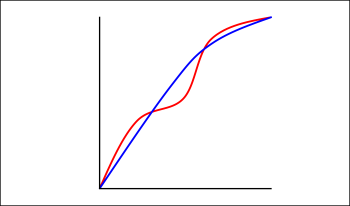

A single tone curve which is used to globally remap the brightness of each pixel in an image is one of the simplest ways to enhance an image. Tone curves might be the result of individual user edits or from algorithmic processing including in-camera processing pipelines. The precise shape of the tone curve is not strongly constrained other than it is usually limited to increasing functions of brightness. In this paper we constrain the shape further and define a simple tone adjustment, mathematically, to be a tone curve that has either no or one inflexion point. It follows that a complex tone curve is one with more than one inflexion point, visually making the curve appear ‘wiggly’. Empirically, complex tone curves do not seem to be used very often. For any given tone curve we show how the closest simple approximation can be efficiently found. We apply our approximation method to the MIT-Adobe FiveK dataset which comprises 5000 images that are manually tone-edited by 5 experts. For all 25,000 edited images - where some of the tone adjustments are complex - we find that they are all well-approximated by simple tone curve adjustments.

Traditionally, the appearance of an object in an image is edited to elicit a preferred perception. However, the editing method might be arbitrary and might not consider the human perception mechanism. In this study, the authors explored image-based leather “authenticity” editing using an estimation model that considers a perception mechanism derived in their previous work. They created leather rendered images by emphasizing or suppressing image properties corresponding to the “authenticity.” Subsequently, they performed two subjective experiments, one using fully edited images and another using partially edited images whose specular reflection intensity was constant. Participants observed the leather rendered images and evaluated the differences in the perception of “authenticity.” The authors found that the “authenticity” perception could be changed by manipulating the intensity of specular reflection and the texture (grain and surface irregularity) in the images. The results of this study could be used to tune the properties of images to make them more appealing.

In addition to colors and shapes, factors of material appearance such as glossiness, translucency, and roughness are important for reproducing the realistic feeling of an image. In general, these perceptual qualities are often degraded when reproduced as a digital color image. The authors have aimed to edit the material appearance of an image as measured by a general camera and reproduce it on a general display device. In their previous study, the authors found that the pupil diameter decreases slightly when observing the surface properties of an object and proposed an algorithm called “PuRet” for enhancing the material appearance based on the physiological models of the pupil and retina. However, to obtain an accurate reproduction, it was necessary to manually adjust two types of adaptation parameters in PuRet as related to the retinal response for each scene and the particular characteristics of the display device. This study realizes the management of the appearance of material objects on display devices by automatically deriving the optimum parameters in PuRet from captured RAW image data. The results indicate that the authors succeeded in estimating an adaptation parameter from the median value of the scene luminance as estimated from a RAW image. They also succeeded in estimating another adaptation parameter from the average value of the scene luminance and the luminance contrast value of the output display device. As a result of an experiment using an unknown display device that was not applied to derive the estimation model, it was confirmed that the proposed model works properly.

Nonlinear complementary metal-oxide semiconductor (CMOS) image sensors (CISs), such as logarithmic (log) and linearâ–”logarithmic (linlog) sensors, achieve high/wide dynamic ranges in single exposures at video frame rates. As with linear CISs, fixed pattern noise (FPN) correction and salt-and-pepper noise (SPN) filtering are required to achieve high image quality. This paper presents a method to generate digital integrated circuits, suitable for any monotonic nonlinear CIS, to correct FPN in hard real time. It also presents a method to generate digital integrated circuits, suitable for any monochromatic nonlinear CIS, to filter SPN in hard real time. The methods are validated by implementing and testing generated circuits using field-programmable gate array (FPGA) tools from both Xilinx and Altera. Generated circuits are shown to be efficient, in terms of logic elements, memory bits, and power consumption. Scalability of the methods to full high-definition (FHD) video processing is also demonstrated. In particular, FPN correction and SPN filtering of over 140 megapixels per second are feasible, in hard real time, irrespective of the degree of nonlinearity. c 2018 Society for Imaging Science and Technology.

We suggest a method for sharpening an image or video stream without using convolution, as in unsharp masking, or deconvolution, as in constrained least-squares filtering. Instead, our technique is based on a local analysis of phase congruency and hence focuses on perceptually important details. The image is partitioned into overlapping tiles, and is processed tile by tile. We perform a Fourier transform for each of the tiles, and define congruency for each of the components in such a way that it is large when the component's neighbours are aligned with it, and small otherwise. We then amplify weak components with high phase congruency and reduce strong components with low phase congruency. Following this method, we avoid strengthening the Fourier components corresponding to sharp edges, while amplifying those details that underwent a slight or moderate defocus blur. The tiles are then seamlessly stitched. As a result, the image sharpness is improved wherever perceptually important details are present.

In this paper we describe two general parametric, non symmetric 3×3 gradient models. Equations for calculating the coefficients of matrices of gradients are presented. These models for generating gradients in x-direction include the known gradient operators and new operators that can be used in graphics, computer vision, robotics, imaging systems and visual surveillance applications, object enhancement, edge detection and classification. The presented approach can be easier extended for large windows.