The first paper investigating the use of machine learning to learn the relationship between an image of a scene and the color of the scene illuminant was published by Funt et al. in 1996. Specifically, they investigated if such a relationship could be learned by a neural network. During the last 30 years we have witnessed a remarkable series of advancements in machine learning, and in particular deep learning approaches based on artificial neural networks. In this paper we want to update the method by Funt et al. by including recent techniques introduced to train deep neural networks. Experimental results on a standard dataset show how the updated version can improve the median angular error in illuminant estimation by almost 51% with respect to its original formulation, even outperforming recent illuminant estimation methods.

Multispectral images contain more spectral information of the scene objects compared to color images. The captured information of the scene reflectance is affected by several capture conditions, of which the scene illuminant is dominant. In this work, we implemented an imaging pipeline for a spectral filter array camera, where the focus is the estimation of the scene reflectances when the scene illuminant is unknown. We simulate three scenarios for reflectance estimation from multispectral images, and we evaluate the estimation accuracy on real captured data. We evaluate two camera model-based reflectance estimation methods that use a Wiener filter, and two other linear regression models for reflectance estimation that do not require an image formation model of the camera. Regarding the model-based approaches, we propose to use an estimate for the illuminant's spectral power distribution. The results show that our proposed approach stabilizes and marginally improves the estimation accuracy over the method that estimates the illuminant in the sensor space only. The results also provide a comparison of reflectance estimation using common approaches that are suited for different realistic scenarios.

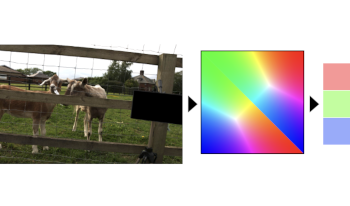

The aim of colour constancy is to discount the effect of the scene illumination from the image colours and restore the colours of the objects as captured under a ‘white’ illuminant. For the majority of colour constancy methods, the first step is to estimate the scene illuminant colour. Generally, it is assumed that the illumination is uniform in the scene. However, real world scenes have multiple illuminants, like sunlight and spot lights all together in one scene. We present in this paper a simple yet very effective framework using a deep CNN-based method to estimate and use multiple illuminants for colour constancy. Our approach works well in both the multi and single illuminant cases. The output of the CNN method is a region-wise estimate map of the scene which is smoothed and divided out from the image to perform colour constancy. The method that we propose outperforms other recent and state of the art methods and has promising visual results.

Achieving color constancy is an important step to support visual tasks. In general, a linear transformation using a 3 × 3 illuminant modeling matrix is applied in the RGB color space of a camera to achieve color balance. Most of the studies for color constancy adopt this linear model, but the relationship of illumination and the camera spectral sensitivity (CSS) is only partially understood. Therefore, in this paper, we analyze linear combination of the illumination spectrum and the CSS using hyperspectral data that have much more information than RGB. After estimating the illumination correction matrix we elucidate the accuracy dependence on illumination spectrum and the camera sensor response, which can be applied to CSS.