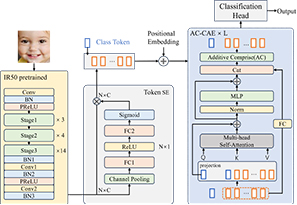

Facial Expression Recognition (FER) models based on the Vision Transformer (ViT) have demonstrated promising performance on diverse datasets. However, the computational cost of the transformer encoder poses challenges in scenarios where strong computational resources are required. The utilization of large feature maps enhances expression information, but leads to a significant increase in token length. Consequently, the computational complexity grows quadratically with the length of the tokens as O(N2). Tasks involving large feature maps, such as high-resolution FER, encounter computational bottlenecks. To alleviate these challenges, we propose the Additively Comprised Class Attention Encoder as a substitute for the original ViT encoder, which reduces the complexity of the attention computation from O(N2) to O(N). Additionally, we introduce a novel token-level Squeeze-and-Excitation method to facilitate the model’s learning of more efficient representations. Experimental evaluations on the RAF-DB and FERplus datasets show that our approach can improve running speed by at least 27% (for 7 × 7 feature maps) while maintaining comparable accuracy, and it performs more efficiently on larger feature maps (about 49% speedup for 14 × 14 feature maps, and triple the speed for 28 × 28 feature maps).

Emotions play an important role in our life as a response to our interactions with others, decisions, and so on. Among various emotional signals, facial expression is one of the most powerful and natural means for humans to convey their emotions and intentions, and it has the advantage of easily obtaining information using only a camera, so facial expression-based emotional research is being actively conducted. Facial expression recognition(FER) have been studied by classifying them into seven basic emotions: anger, disgust, fear, happiness, sadness, surprise, and normal. Before the appearance of deep learning, handcrafted feature extractors and simple classifiers such as SVM, Adaboost was used to extracted Facial emotion. With the advent of deep learning, it is now possible to extract facial expression without using feature extractors. Despite its excellent performance in FER research, it is still challenging task due to external factors such as occlusion, illumination, and pose, and similarity problems between different facial expressions. In this paper, we propose a method of training through a ResNet [1] and Visual Transformer [2] called FViT and using Histogram of Oriented Gradients(HOGs) [3] data to solve the similarity problem between facial expressions.