In recent years, deep learning has achieved excellent results in several applications across various fields. However, as the scale of deep learning models increases, the training time of the models also increases dramatically. Furthermore, hyperparameters have a significant influence on model training results and selecting the model’s hyperparameters efficiently is essential. In this study, the orthogonal array of the Taguchi method is used to find the best experimental combination of hyperparameters. This research uses three hyperparameters of the you only look once-version 3 (YOLOv3) detector and five hyperparameters of data augmentation as the control factor of the Taguchi method in addition to the traditional signal-to-noise ratio (S/N ratio) analysis method with larger-the-better (LB) characteristics.

Experimental results show that the mean average precision of the blood cell count and detection dataset is 84.67%, which is better than the available results in literature. The method proposed herein can provide a fast and effective search strategy for optimizing hyperparameters in deep learning.

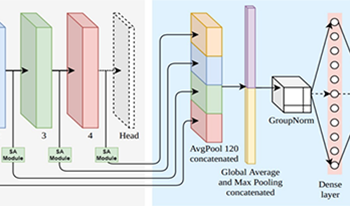

Innovations in computer vision have steered research towards recognizing compound facial emotions, a complex mix of basic emotions. Despite significant advancements in deep convolutional neural networks improving accuracy, their inherent limitations, such as gradient vanishing/exploding problem, lack of global contextual information, and overfitting issues, may degrade performance or cause misclassification when processing complex emotion features. This study proposes an ensemble method in which three pre-trained models, DenseNet-121, VGG-16, and ResNet-18 are concatenated instead of utilizing individual models. It is a significant layer-sharing method, and we have added dropout layers, fully connected layers, activation functions, and pooling layers to each model after removing their heads before concatenating them. This enables the model to get a chance to learn more before combining the individual learned features. The proposed model uses an early stopping mechanism to prevent it from overfitting and improve performance. The proposed ensemble method surpassed the state-of-the-art (SOTA) with 74.4% and 71.8% accuracy on RAF-DB and CFEE datasets, respectively, offering a new benchmark for real-world compound emotion recognition research.

Deep learning (DL) has advanced computer-aided diagnosis, yet the limited data available at local medical centers and privacy concerns associated with centralized AI approaches hinder collaboration. Federated learning (FL) offers a privacy-preserving solution by enabling distributed DL training across multiple medical centers without sharing raw data. This article reviews research conducted from 2016 to 2024 on the use of FL in cancer detection and diagnosis, aiming to provide an overview of the field’s development. Studies show that FL effectively addresses privacy concerns in DL training across centers. Future research should focus on tackling data heterogeneity and domain adaptation to enhance the robustness of FL in clinical settings. Improving the interpretability and privacy of FL is crucial for building trust. This review promotes FL adoption and continued research to advance cancer detection and diagnosis and improve patient outcomes.

Image compression is an essential technology in image processing as it reduces video storage, which is increasingly popular. Deep learning-based image compression has made significant progress, surpassing traditional coding and decoding approaches in specific cases. Current methods employ autoencoders, typically consisting of convolutional neural networks, to map input images to lower-dimensional latent spaces for compression. However, these approaches often overlook low-frequency information, leading to sub-optimal compression performance. To address this challenge, this study proposed a novel image compression technique, Transformer and Convolutional Dual Channel Networks (TCDCN). This method extracts both edge detail and low-frequency information, achieving a balance between high and low-frequency compression. The study also utilized a variational autoencoder architecture with parallel stacked transformer and convolutional networks to create a compact representation of the input image through end-to-end training. This content-adaptive transform captured low-frequency information dynamically, leading to improved compression efficiency. Compared to the classic JPEG method, our model showed significant improvements in Bjontegaard Delta rate up to 19.12% and 18.65% on Kodak and CLIC test datasets, respectively. These improvements also surpassed the state-of-the-art solutions by notable margins of 0.47% and 0.74%, signifying a substantial enhancement in the image compression encoding efficiency. The results underscore the effectiveness of our approach in enhancing the capabilities of existing techniques, marking a significant step forward in the field of image compression.

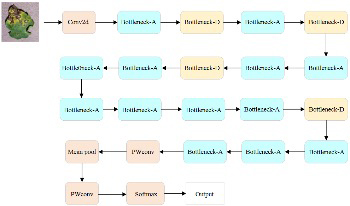

Crop diseases have always been a major threat to agricultural production, significantly reducing both yield and quality of agricultural products. Traditional methods for disease recognition suffer from high costs and low efficiency, making them inadequate for modern agricultural requirements. With the continuous development of artificial intelligence technology, utilizing deep learning for crop disease image recognition has become a research hotspot. Convolutional neural networks can automatically extract features for end-to-end learning, resulting in better recognition performance. However, they also face challenges such as high computational costs and difficulties in deployment on mobile devices. In this study, we aim to improve the recognition accuracy of models, reduce computational costs, and scale down for deployment on mobile platforms. Specifically targeting the recognition of tomato leaf diseases, we propose an innovative image recognition method based on a lightweight MCA-MobileNet and WGAN. By incorporating an improved multiscale feature fusion module and coordinate attention mechanism into MobileNetV2, we developed the lightweight MCA-MobileNet model. This model focuses more on disease spot information in tomato leaves while significantly reducing the model’s parameter count. We employ WGAN for data augmentation to address issues such as insufficient and imbalanced original sample data. Experimental results demonstrate that using the augmented dataset effectively improves the model’s recognition accuracy and enhances its robustness. Compared to traditional networks, MCA-MobileNet shows significant improvements in parameters such as accuracy, precision, recall, and F1-score. With a training parameter count of only 2.75M, it exhibits outstanding performance in recognizing tomato leaf diseases and can be widely applied in mobile or embedded devices.

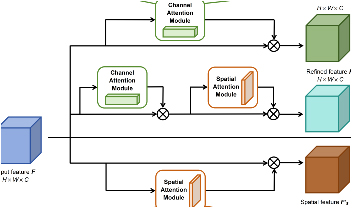

The rapid evolution of modern society has triggered a surge in the production of diverse waste in daily life. Effective implementation of waste classification through intelligent methods is essential for promoting green and sustainable development. Traditional waste classification techniques suffer from inefficiencies and limited accuracy. To address these challenges, this study proposed a waste image classification model based on DenseNet-121 by adding an attention module. To enhance the efficiency and accuracy of waste classification techniques, publicly available waste datasets, TrashNet and Garbage classification, were utilized for their comprehensive coverage and balanced distribution of waste categories. 80% of the dataset was allocated for training, and the remaining 20% for testing. Within the architecture of DenseNet-121, an enhanced attention module, series-parallel attention module (SPAM), was integrated, building upon convolutional block attention module (CBAM), resulting in a new network model called dense series-parallel attention neural network (DSPA-Net). DSPA-Net was trained and evaluated alongside other CNN models on TrashNet and Garbage classification. DSPA-Net demonstrated superior performance and achieved accuracies of 90.2% and 92.5% on TrashNet and Garbage classification, respectively, surpassing DenseNet-121 and alternative image classification algorithms. These findings underscore the potential for executing efficient and accurate intelligent waste classification.

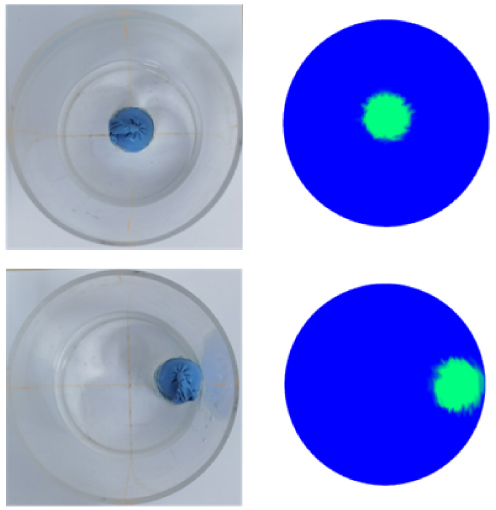

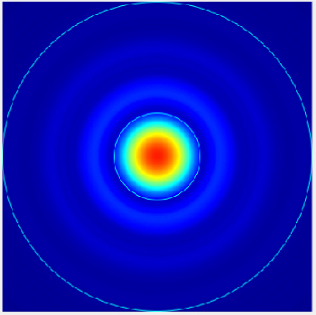

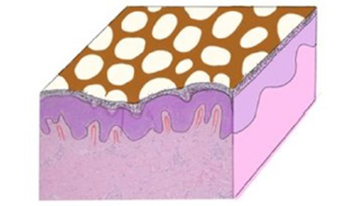

Accurate and precise classification/quantification of skin pigmentation is critical to address health inequities such as for example racial bias in pulse oximetry. Current skintone classification methods rely on measuring or estimating the color. These methods include a measurement device or subjective matching with skintone color scales. Robust detection of skin type and melanin index is challenging, as these methods require precise calibration. And recently acquired sun exposure may affect the measurements due to tanning or erythema. The proposed system differentiates and quantifies skin type and melanin index by exploiting the variance in skin structures and skin pigmentation network across skin types. Our result with a small study shows skin structure patterns are a robust, color independent method for skin tone classification. A real-time system demo shows the practical viability of the method.

This work addresses the challenge of identifying the provenance of illicit cultural artifacts, a task often hindered by the lack of specialized expertise among law enforcement and customs officials. To facilitate immediate assessments, we propose an improved deep learning model based on a pre-trained ResNet model, fine-tuned for archaeological artifact recognition through transfer learning. Our model uniquely integrates multi-level feature extraction, capturing both textural and structural features of artifacts, and incorporates self-attention mechanisms to enhance contextual understanding. In addition, we developed two different artifact datasets: a dataset with mixed types of earthenware and a dataset for coins. Both datasets are categorized according to the age and region of artifacts. Evaluations of the proposed model on these datasets demonstrate improved recognition accuracy thanks to the enhanced feature representation.

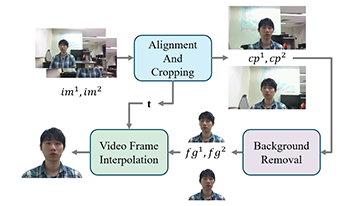

In this paper, we propose a new solution for synthesizing frontal human images in video conferencing, aimed at enhancing immersive communication. Traditional methods such as center staging, gaze correction, and background replacement improve the user experience, but they do not fully address the issue of off-center camera placement. We introduce a system that utilizes two arbitrary cameras positioned on the top bezel of a display monitor to capture left and right images of the video participant. A facial landmark detection algorithm identifies key points on the participant’s face, from which we estimate the head pose. A segmentation model is employed to remove the background, isolating the user. The core component of our method is a video frame interpolation technique that synthesizes a realistic frontal view of the participant by leveraging the two captured angles. This method not only enhances visual alignment between users but also maintains natural facial expressions and gaze direction, resulting in a more engaging and life-like video conferencing experience.

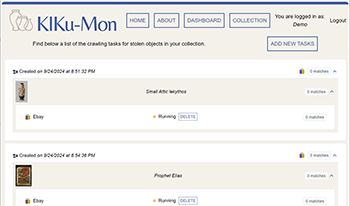

Tracking and identifying stolen cultural artifacts on online marketplaces is a daunting task that has to be accomplished through manual search. In this paper, an automated monitoring tool is developed to track and identify stolen cultural goods on targeted online sales platforms. In case of theft, the original owner can upload descriptive keywords and photos of the stolen objects to start monitoring tasks to track and identify the stolen objects on targeted online marketplaces and get alerted when identical or highly similar objects appear on the monitored sales platforms. The technical challenges posed by automated monitoring are addressed by proposed advanced crawling and image feature extraction and matching solutions. With the support of proposed novel techniques, the developed monitoring tool can efficiently and effectively monitor stolen artifacts on online marketplaces, significantly reducing the manual inspection effort.