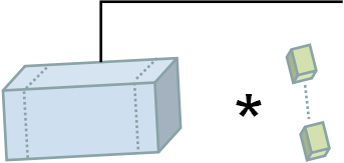

In response to challenges such as large parameter count, difficulty in deployment, low accuracy, and slow speed of facial state recognition models in driver fatigue detection, the authors propose a lightweight real-time facial state recognition model called YOLOv5-fatigue based on YOLOv5n. First, a bilateral convolution is proposed, which can fully utilize the feature information in the channel. Then an innovative deep lightweight module is proposed, which reduces the number of network parameters as well as the computational effort by replacing the ordinary convolution in the neck network. Lastly, the normalization-based attention module is added to solve the problem of accuracy decline caused by lightweight models while keeping the number of parameters unchanged. In this paper, we first recognize the facial state by YOLOv5-fatigue and then use the proportion of eyes closed per unit of time and the proportion of mouth closed per unit of time to determine fatigue. In comparison experiments conducted on our self-built VIGP-fatigue dataset with other detection algorithms, our proposed method achieved an increase of 1% in AP50 compared to the baseline model YOLOv5n, reaching 92.6%. The inference time was reduced by 9% to 2.1 ms, and the parameter count decreased by 42.6% to 1.01 M.

Chunman Yan, Jiale Li, "Real-time Facial State Recognition and Fatigue Analysis based on Deep Neural Networks" in Journal of Imaging Science and Technology, 2024, pp 1 - 11, https://doi.org/10.2352/J.ImagingSci.Technol.2024.68.6.060507

Find this author on Google Scholar

Find this author on Google Scholar Find this author on PubMed

Find this author on PubMed