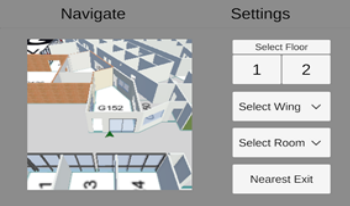

During emergencies, accurate and timely dissemination of evacuation information plays a critical role in saving lives and minimizing damage. As technology continues to advance, there is an increasing need to explore innovative approaches that enhance emergency evacuation and navigation in an indoor environment to facilitate efficient decision-making. This paper presents a mobile augmented reality application (MARA) for indoor emergency evacuation and navigation in a building environment in real time. The location of the user is determined by the device camera and translated to a position within a Digital Twin of the Building. AI-generated navigation meshes and augmented reality foundation image tracking technology are used for localization. Through the visualization of integrated geographic information systems and real-time data analysis, the proposed MARA provides the current location of the person, the number of exits, and user-specific personalized evacuation routes. The MARA can also be used for acquiring spatial analysis, situational awareness, and visual communication. In emergencies such as fire and smoke visibility becomes poor inside the building. The proposed MARA provides information to support effective decision-making for both building occupants and emergency responders during emergencies.

The race to commercialize self-driving vehicles is in high gear. As carmakers and tech companies focus on creating cameras and sensors with more nuanced capabilities to achieve maximal effectiveness, efficiency, and safety, an interesting paradox has arisen: the human factor has been dismissed. If fleets of autonomous vehicles are to enter our roadways they must overcome the challenges of scene perception and cognition and be able to understand and interact with us humans. This entails a capacity to deal with the spontaneous, rule breaking, emotional, and improvisatory characteristics of our behaviors. Essentially, machine intelligence must integrate content identification with context understanding. Bridging the gap between engineering and cognitive science, I argue for the importance of translating insights from human perception and cognition to autonomous vehicle perception R&D.