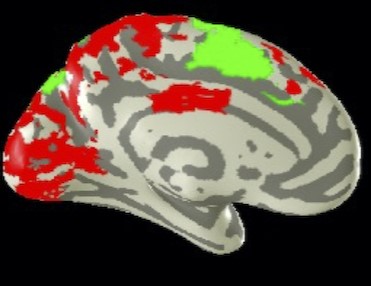

Does the functional organization of the brain connectivity of the visual motion areas depend on the loss of form vision? We report large-scale reorganization in low vision individuals following a unique form of non-visual spatial navigation training. Participants completed five sessions of the haptic Cognitive-Kinesthetic Memory-Drawing Training with raised-line tactile navigational maps. Pre- and post-training, whole-brain fMRI scans were conducted while participants (i) haptically explored and memorized raised-line maps and then (ii) drew the maps from haptic memory while blindfolded. Granger Causal connectivity was assessed across the network of brain areas activated during these tasks. Before training, the participants’ hMT+ connectivity in low vision was predominantly top-down from the sensorimotor pre- and post-central cortices, supplementary motor areas, and insular cortices, with only weak outputs to occipital and cingulate cortex. Large-scale reorganization of the connectivity strengthen the pre-top-down inputs to hMT+, but also dramatically extended its outputs throughout the occipital and parietal cortices, especially from right hMT+. These results are consistent with the fact that low vision individuals have a lifetime of visual inputs to hMT+, and it may become one of the principal perceptual inputs as their form vision is reduced, making it a key source of navigational information during the unfolding of the drawing trajectory. When forced to perform the spatiomotor task of haptic drawing without vision, however, hMT+ becomes a key conduit for signals from the executive control regions of prefrontal cortex to the medial navigational network and occipito-parieto-temporal spatial representation regions. These findings uncover novel forms of brain reorganization that have strong implications for the principles of rapid learning-driven neuroplasticity.

Contrast is an imperative perceptible attribute embodying the image quality. In medical images, the poor quality specifically low contrast inhibits precise interpretation of the image. Contrast enhancement is, therefore, applied not merely to improve the visual quality of images but also enabling them to facilitate further processing tasks. We propose a contrast enhancement approach based on cross-modal learning in this paper. Cycle-GAN (Generative Adversarial Network) is used for this purpose, where UNet augmented with global features acts as a generator. Besides, individual batch normalization has been used to make generators adapt specifically to their input distributions. The proposed method accepts low contrast T2-weighted (T2-w) Magnetic Resonance images (MRI) and uses the corresponding high contrast T1-w MRI to learn the global contrast characteristics. The experiments were conducted on a publicly available IXI dataset. Comparison with recent CE methods and quantitative assessment using two prevalent metrics FSIM and BRISQUE validate the superior performance of the proposed method.