Rudd and Zemach analyzed brightness/lightness matches performed with disk/annulus stimuli under four contrast polarity conditions, in which the disk was either a luminance increment or decrement with respect to the annulus, and the annulus was either an increment or decrement with respect to the background. In all four cases, the disk brightness—measured by the luminance of a matching disk—exhibited a parabolic dependence on the annulus luminance when plotted on a log-log scale. Rudd further showed that the shape of this parabolic relationship can be influenced by instructions to match the disk’s brightness (perceived luminance), brightness contrast (perceived disk/annulus luminance ratio), or lightness (perceived reflectance) under different assumptions about the illumination. Here, I compare the results of those experiments to results of other, recent, experiments in which the match disk used to measure the target disk appearance was not surrounded by an annulus. I model the entire body of data with a neural model involving edge integration and contrast gain control in which top-down influences controlling the weights given to edges in the edge integration process act either before or after the contrast gain control stage of the model, depending on the stimulus configuration and the observer’s assumptions about the nature of the illumination.

Grayscale images are essential in image processing and computer vision tasks. They effectively emphasize luminance and contrast, highlighting important visual features, while also being easily compatible with other algorithms. Moreover, their simplified representation makes them efficient for storage and transmission purposes. While preserving contrast is important for maintaining visual quality, other factors such as preserving information relevant to the specific application or task at hand may be more critical for achieving optimal performance. To evaluate and compare different decolorization algorithms, we designed a psychological experiment. During the experiment, participants were instructed to imagine color images in a hypothetical ”colorless world” and select the grayscale image that best resembled their mental visualization. We conducted a comparison between two types of algorithms: (i) perceptual-based simple color space conversion algorithms, and (ii) spatial contrast-based algorithms, including iteration-based methods. Our experimental findings indicate that CIELAB exhibited superior performance on average, providing further evidence for the effectiveness of perception-based decolorization algorithms. On the other hand, the spatial contrast-based algorithms showed relatively poorer performance, possibly due to factors such as DC-offset and artificial contrast generation. However, these algorithms demonstrated shorter selection times. Notably, no single algorithm consistently outperformed the others across all test images. In this paper, we will delve into a comprehensive discussion on the significance of contrast and luminance in color-to-grayscale mapping based on our experimental results and analysis.

Nowadays, industrial gloss evaluation is mostly limited to the specular gloss meter, focusing on a single attribute of surface gloss. The correlation of such meters with the human gloss appraisal is thus rather weak. Although more advanced image-based gloss meters have become available, their application is typically restricted to niche industries due to the high cost and complexity. This paper extends a previous design of a comprehensive and affordable image-based gloss meter (iGM) for the determination of each of the five main attributes of surface gloss (specular gloss, DOI, haze, contrast and surface-uniformity gloss). Together with an extensive introduction on surface gloss and its evaluation, the iGM design is described and some of its capabilities and opportunities are illustrated.

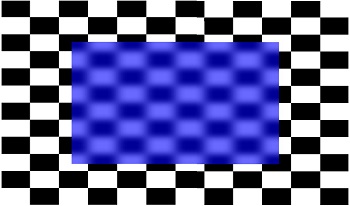

Translucency is an appearance attribute, which primarily results from subsurface scattering of light. The visual perception of translucency has gained attention in the past two decades. However, the studies mostly address thick and complex 3D objects that completely occlude the background. On the other hand, the perception of transparency of flat and thin see-through filters has been studied more extensively. Despite this, perception of translucency in see-through filters that do not completely occlude the background remains understudied. In this work, we manipulated the sharpness and contrast of black-and-white checkerboard patterns to simulate the impression of see-through filters. Afterward, we conducted paired-comparison psychophysical experiments to measure how the amount of background blur and contrast relates to perceived translucency. We found that while both blur and contrast affect translucency, the relationship is neither monotonic, nor straightforward.

Computer simulations of an extended version of a neural model of lightness perception [1,2] are presented. The model provides a unitary account of several key aspects of spatial lightness phenomenology, including contrast and assimilation, and asymmetries in the strengths of lightness and darkness induction. It does this by invoking mechanisms that have also been shown to account for the overall magnitude of dynamic range compression in experiments involving lightness matches made to real-world surfaces [2]. The model assumptions are derived partly from parametric measurements of visual responses of ON and OFF cells responses in the lateral geniculate nucleus of the macaque monkey [3,4] and partly from human quantitative psychophysical measurements. The model’s computations and architecture are consistent with the properties of human visual neurophysiology as they are currently understood. The neural model's predictions and behavior are contrasted though the simulations with those of other lightness models, including Retinex theory [5] and the lightness filling-in models of Grossberg and his colleagues [6].

Contrast sensitivity functions (CSFs) describe the smallest visible contrast across a range of stimulus and viewing parameters. CSFs are useful for imaging and video applications, as contrast thresholds describe the maximum of color reproduction error that is invisible to the human observer. However, existing CSFs are limited. First, they are typically only defined for achromatic contrast. Second, even when they are defined for chromatic contrast, the thresholds are described along the cardinal dimensions of linear opponent color spaces, and therefore are difficult to relate to the dimensions of more commonly used color spaces, such as sRGB or CIE L*a*b*. Here, we adapt a recently proposed CSF to what we call color threshold functions (CTFs), which describe thresholds for color differences in more commonly used color spaces. We include color spaces with standard dynamic range gamut (sRGB, YCbCr, CIE L*a*b*, CIE L*u*v*) and high dynamic range gamut (PQ-RGB, PQ-YCbCr and ICTCP). Using CTFs, we analyze these color spaces in terms of coding efficiency and contrast threshold uniformity.