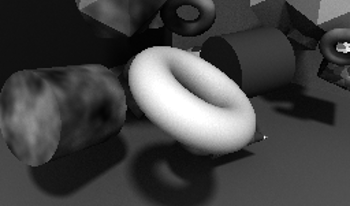

Lightness perception is a long-standing topic in research on human vision, but very few image-computable models of lightness have been formulated. Recent work in computer vision has used artifical neural networks and deep learning to estimate surface reflectance and other intrinsic image properties. Here we investigate whether such networks are useful as models of human lightness perception. We train a standard deep learning architecture on a novel image set that consists of simple geometric objects with a few different surface reflectance patterns. We find that the model performs well on this image set, generalizes well across small variations, and outperforms three other computational models. The network has partial lightness constancy, much like human observers, in that illumination changes have a systematic but moderate effect on its reflectance estimates. However, the network generalizes poorly beyond the type of images in its training set: it fails on a lightness matching task with unfamiliar stimuli, and does not account for several lightness illusions experienced by human observers.

Research on human lightness perception has revealed important principles of how we perceive achromatic surface color, but has resulted in few image-computable models. Here we examine the performance of a recent artificial neural network architecture in a lightness matching task. We find similarities between the network’s behaviour and human perception. The network has human-like levels of partial lightness constancy, and its log reflectance matches are an approximately linear function of log illuminance, as is the case with human observers. We also find that previous computational models of lightness perception have much weaker lightness constancy than is typical of human observers. We briefly discuss some challenges and possible future directions for using artificial neural networks as a starting point for models of human lightness perception.