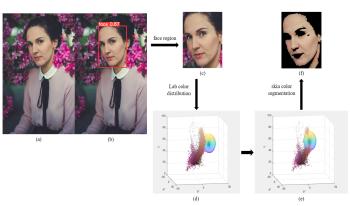

In area of white balance, the process of large colored background images seems to be a problem. Regarding this issue, a white balance algorithm based on facial skin color was proposed. A neural network-based object detection algorithm and an adaptive threshold segmentation algorithm were combined to achieve the accurate segmentation of skin color pixels. Then a 3-dimension color gamut mapping method in CIELAB color space was used to do the illumination estimation. Last, CAT16 model was applied to rendering the images to standard lighting condition. Besides, an ill white balanced images dataset taken against large colored backgrounds were prepared to test the present algorithm and others’ performance. The results show the proposed algorithm performs better on the dataset.

The first paper investigating the use of machine learning to learn the relationship between an image of a scene and the color of the scene illuminant was published by Funt et al. in 1996. Specifically, they investigated if such a relationship could be learned by a neural network. During the last 30 years we have witnessed a remarkable series of advancements in machine learning, and in particular deep learning approaches based on artificial neural networks. In this paper we want to update the method by Funt et al. by including recent techniques introduced to train deep neural networks. Experimental results on a standard dataset show how the updated version can improve the median angular error in illuminant estimation by almost 51% with respect to its original formulation, even outperforming recent illuminant estimation methods.

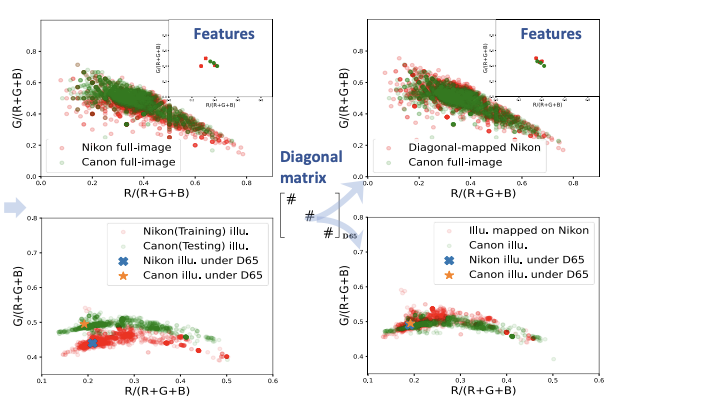

Deep Neural Networks (DNNs) have been widely used for illumination estimation, which is time-consuming and requires sensor-specific data collection. Our proposed method uses a dual-mapping strategy and only requires a simple white point from a test sensor under a D65 condition. This allows us to derive a mapping matrix, enabling the reconstructions of image data and illuminants. In the second mapping phase, we transform the reconstructed image data into sparse features, which are then optimized with a lightweight multi-layer perceptron (MLP) model using the re-constructed illuminants as ground truths. This approach effectively reduces sensor discrepancies and delivers performance on par with leading cross-sensor methods. It only requires a small amount of memory (∼0.003 MB), and takes ∼1 hour training on an RTX3070Ti GPU. More importantly, the method can be implemented very fast, with ∼0.3 ms and ∼1 ms on a GPU or CPU respectively, and is not sensitive to the input image resolution. Therefore, it offers a practical solution to the great challenges of data recollection that is faced by the industry.

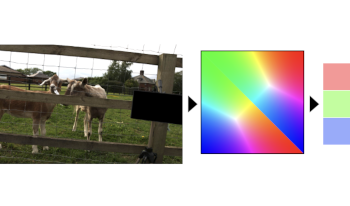

An object’s color is affected by the color of the light incident upon it, and the illuminant-dependent nature of color creates problems for convolutional neural networks performing tasks such as image classification and object recognition. Such networks would benefit from illuminant-invariant representation of the image colors. The Laplacian of the logarithm of the image is introduced as an effective color invariant. Applying the Laplacian in log space makes the input colors approximately illuminationinvariant. The illumination invariance derives from the fact that finite-difference differentiation in log space is equivalent to ratios of neighboring pixels in the original space. For narrow-band sensors, rationing neighboring pixels cancels out their shared illumination component. The resulting color representation is no longer absolute, but rather is a relative color representation. Testing shows that when using the Laplacian of the logarithm as input to a Convolutional Neural Network designed for classification its performance is: (i) approximately equal to that of the same network trained on sRGB data under white light, and (ii) largely unaffected by changes in the illumination.

Cataract surgery replaces the aged human lens with a transparent silicone implant. The new lens removes optical distortions and light scattering media, as well as a yellow filter. This talk describes the appearances of natural scenes before and after cataract surgery. While Color Constancy experiments showed small changes, color discrimination experiments had large changes. These results provide a mechanistic signature of Color Constancy and Discrimination.

Without sunlight, imaging devices typically depend on various artificial light sources. However, scenes captured with the artificial light sources often violate the assumptions employed in color constancy algorithms. These violations of the scenes, such as non-uniformity or multiple light sources, could disturb the computer vision algorithms. In this paper, complex illumination of multiple artificial light sources is decomposed into each illumination by considering the sensor responses and the spectrum of the artificial light sources, and the fundamental color constancy algorithms (e.g., gray-world, gray-edge, etc.) are improved by employing the estimated illumination energy. The proposed method effectively improves the conventional methods, and the results of the proposed algorithms are demonstrated using the images captured under laboratory settings for measuring the accuracy of the color representation.

Illuminant color estimation in an image under multiple illuminations is proposed. In the most conventional methods, the image is divided into small regions and estimated the local illuminant in each region by applying the methods for one illuminant. By unifying the derived local illuminants, scene illuminants are estimated. The methods for one illuminant used in the conventional ones are typically gray-world, white-patch, first-order and second-order gray-edge, and so on. However, these methods are not modified properly. Therefore, they have possibilities for improving in illuminant color estimation. Proposed method is gray-world based and applies to each small region to estimate the local illuminant. There are two features in the methods. The first feature is the selection of the small regions; the method uses criteria for the regions whether they satisfy the gray-world assumption and estimates the local illuminants in the selected small ones. The second one is the use of multi-layered small regions; in general, appropriate size of the small region depends on the image, thus, several-sized small regions corresponding to the resolution are used and unified. Experiment results using Mondrian pattern images under the reddish and white illuminants show that the estimation error by the proposed method is relatively smaller than that by the conventional one.