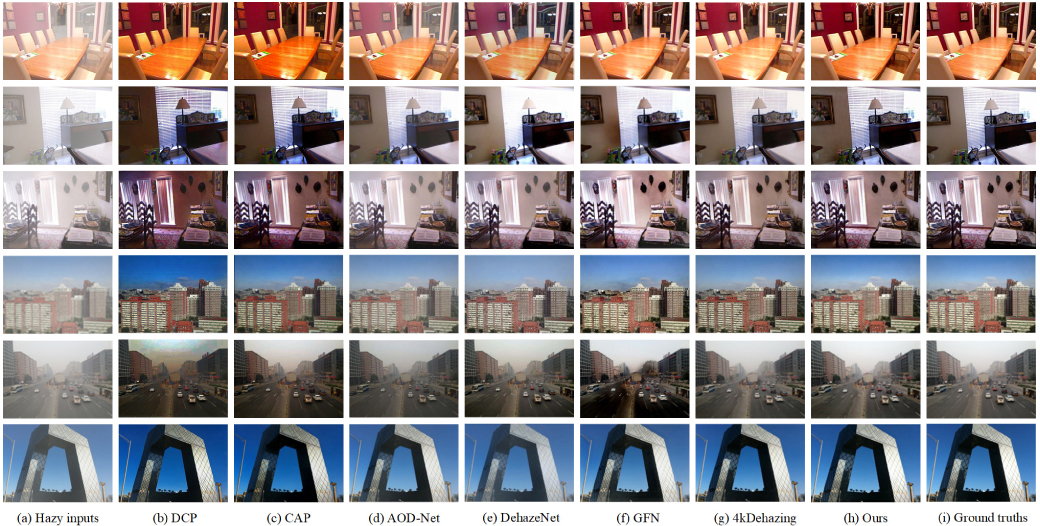

We propose a new convolutional neural network called Physics-guided Encoder–Decoder Network (PEDNet) designed for end-to-end single image dehazing. The network uses a reformulated atmospheric scattering model, which is embedded into the network for end-to-end learning. The overall structure is in the form of an encoder–decoder, which fully extracts and fuses contextual information from four different scales through skip connections. In addition, in view of the uneven spread of haze in the real world, we design a Res2FA module based on Res2Net, which introduces a Feature Attention block that is able to focus on important information at a finer granularity. The PEDNet is more adaptable when handling various hazy image types since it employs a physically driven dehazing model. The efficacy of every network module is demonstrated by ablation experiment results. Our suggested solution is superior to current state-of-the-art methods according to experimental results from both synthetic and real-world datasets.

Haze is one of the sources cause image degradation. Haze affects contrast and saturation of not only for the real world image, but also the road scenes. Most haze removal algorithms use an atmospheric scattering model for removing the effect of haze. Most of haze removal algorithms are based on the single scattering model which does not consider the blur in the haze image. In this paper, a novel haze removal algorithm using a multiple scattering model with deconvolution is proposed. The proposed algorithm considers blurring effect in the haze image. Down sampling of the haze image is also used for estimating the atmospheric light efficiently. The synthetic road scenes with and without haze are used to evaluate the performance of the proposed method. Experimental result demonstrates that the proposed algorithm performs better for restoring images affected by haze both qualitatively and quantitatively.