Visual content has the ability to convey and impact human emotions. It is crucial to understanding the emotions being communicated and the ways in which they are implied by the visual elements in images. This study evaluates the aesthetic emotion of portrait art generated by our Generative AI Portraiture System. Using the Visual Aesthetic Wheel of Emotion (VAWE), aesthetic responses were documented and subsequently analyzed using heatmaps and circular histograms with the aim of identifying the emotions evoked by the generated portrait art. The data from 160 participants were used to categorize and validate VAWE’s 20 emotions with selected AI portrait styles. The data were then used in a smaller self-portrait qualitative study to validate the developed prototype for an Emotionally Aware Portrait System, capable of generating a personalized stylization of a user’s self-portrait, expressing a particular aesthetic emotional state from VAWE. The findings bring forth a new vision towards blending affective computing with computational creativity and enabling generative systems with awareness in terms of the emotions they wish their output to elicit.

The challenges of film restoration demand versatile tools, making machine learning (ML)—through training custom models—an ideal solution. This research demonstrates that custom models effectively restore color in deteriorated films, even without direct references, and recover spatial features using techniques like gauge and analog video reference recovery. A key advantage of this approach is its ability to address restoration tasks that are difficult or impossible with traditional methods, which rely on spatial and temporal filters. While general-purpose video generation models like Runway, Sora, and Pika Labs have advanced significantly, they often fall short in film restoration due to limitations in temporal consistency, artifact generation, and lack of precise control. Custom ML models offer a solution by providing targeted restoration and overcoming the inherent limitations of conventional filtering techniques. Results from employing these local models are promising; however, developing highly specific models tailored to individual restoration scenarios is crucial for greater efficiency.

We applied computational style transfer, specifically coloration and brush stroke style, to achromatic images of a ghost painting beneath Vincent van Gogh's <i>Still life with meadow flowers and roses</i>. Our method is an extension of our previous work in that it used representative artworks by the ghost painting\rq s author to train a Generalized Adversarial Network (GAN) for integrating styles learned from stylistically distinct groups of works. An effective amalgam of these learned styles is then transferred to the target achromatic work.

We discuss the problem of computationally generating images resembling those of lost cultural patrimony, specifically two-dimensional artworks such as paintings and drawings. We view the problem as one of computing an estimate of the image in the lost work that best conforms to surviving information in a variety of forms: works by the source artist, including preparatory works such as cartoons for the target work; copies of the target by other artists; other works by these artists that reveal aspects of their style; historical knowledge of art methods. and materials; stylistic conventions of the relevant era; textual descriptions of the lost work and as well as more generally, images associated with stories given by the target’s title. Some of the general information linking images and text can be learned from large corpora of natural photographs and accompanying text scraped from the web. We present some preliminary, proof-of-concept simulations for recovering lost artworks with a special focus on textual information about target artworks. We outline our future directions, such as methods for assessing the contributions of different forms of information in the overall task of recovering lost artworks.

As Machine Vision (MV) and Artificial Intelligence (AI) systems are incorporated to an ever-increasing range of imaging applications, there is a corresponding need for camera measurements that can accurately predict the performance of these systems. At the present time, the standard practice is to separately measure the two major factors, sharpness and noise (or Signal-to-Noise Ratio), along with several additional factors, then to estimate system performance based on a combination of these factors. This estimate is usually based on experience, and is often more of an art than a science. Camera information capacity (C), based on Claude Shannon's ground-breaking work on information theory, holds great promise as a figure of merit for a variety of imaging systems, but it has traditionally been difficult to measure. We describe a new method for measuring camera information capacity that uses the popular slanted-edge test pattern, specified by the ISO 12233:2014/2017 standard. Measuring information capacity requires no extra effort: it essentially comes for free with slanted-edge MTF measurements. C has units of bits per pixel or bits per image for a specified ISO speed and chart contrast, making it easy to compare very different cameras. The new measurement can be used to solve some important problems, such as finding a camera that meets information capacity requirements with a minimum number of pixels, important because fewer pixels mean faster processing as well as lower cost.

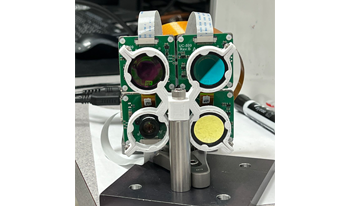

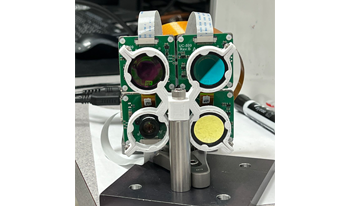

We demonstrate a physics-aware transformer for feature-based data fusion from cameras with diverse resolution, color spaces, focal planes, focal lengths, and exposure. We also demonstrate a scalable solution for synthetic training data generation for the transformer using open-source computer graphics software. We demonstrate image synthesis on arrays with diverse spectral responses, instantaneous field of view and frame rate.

We demonstrate a physics-aware transformer for feature-based data fusion from cameras with diverse resolution, color spaces, focal planes, focal lengths, and exposure. We also demonstrate a scalable solution for synthetic training data generation for the transformer using open-source computer graphics software. We demonstrate image synthesis on arrays with diverse spectral responses, instantaneous field of view and frame rate.

Machine learning for scientific imaging is a rapidly growing area of research used to characterize physical, material, chemical, and biological processes in both large and small scale scientific experiments. Physics inspired machine learning differs from more general machine learning research in that it emphasizes quantitative reproducibility and the incorporation of physical models. ML methods used for scientific imaging typically incorporate physics-based imaging processes or physics-based models of the underlying data. These models can be based on partial differential equations (PDEs), integral equations, symmetries or other regularity conditions in two or more dimensions. Physics aware models enhance the ability of the ML methods to generalize and robustly operate in the presence of modeling error, incomplete data, and measurement uncertainty. Contributions to the conference are solicited on topics ranging from fundamental theoretical advances to detailed implementations and novel applications for scientific discovery.

While RGB is the status quo in machine vision, other color spaces offer higher utility in distinct visual tasks. Here, the authors have investigated the impact of color spaces on the encoding capacity of a visual system that is subject to information compression, specifically variational autoencoders (VAEs) with a bottleneck constraint. To this end, they propose a framework-color conversion-that allows a fair comparison of color spaces. They systematically investigated several ColourConvNets, i.e. VAEs with different input-output color spaces, e.g. from RGB to CIE L* a* b* (in total five color spaces were examined). Their evaluations demonstrate that, in comparison to the baseline network (whose input and output are RGB), ColourConvNets with a color-opponent output space produce higher quality images. This is also evident quantitatively: (i) in pixel-wise low-level metrics such as color difference (ΔE), peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM); and (ii) in high-level visual tasks such as image classification (on ImageNet dataset) and scene segmentation (on COCO dataset) where the global content of reconstruction matters. These findings offer a promising line of investigation for other applications of VAEs. Furthermore, they provide empirical evidence on the benefits of color-opponent representation in a complex visual system and why it might have emerged in the human brain.

The automatic analysis of fine art paintings presents a number of novel technical challenges to artificial intelligence, computer vision, machine learning, and knowledge representation quite distinct from those arising in the analysis of traditional photographs. The most important difference is that many realist paintings depict stories or episodes in order to convey a lesson, moral, or meaning. One early step in automatic interpretation and extraction of meaning in artworks is the identifications of figures (“actors”). In Christian art, specifically, one must identify the actors in order to identify the Biblical episode or story depicted, an important step in “understanding” the artwork. We designed an auto-matic system based on deep convolutional neural net-works and simple knowledge database to identify saints throughout six centuries of Christian art based in large part upon saints’ symbols or attributes. Our work rep-resents initial steps in the broad task of automatic se- mantic interpretation of messages and meaning in fine art.