In this paper, we introduce a unified handwriting and scene-text recognition model tailored to discern both printed and hand-written text images. Our primary contribution is the incorporation of the self-attention mechanism, a salient feature of the transformer architecture. This incorporation leads to two significant advantages: 1) A substantial improvement in the recognition accuracy for both scene-text and handwritten text, and 2) A notable decrease in inference time, addressing a prevalent challenge faced by modern recognizers that utilize sequence-based decoding with attention.

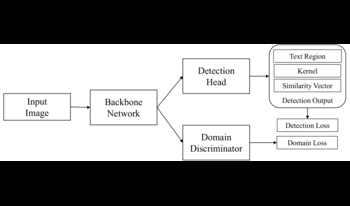

In this paper, we present a deep-learning approach that unifies handwriting and scene-text detection in images. Specifically, we adopt adversarial domain generalization to improve text detection across different domains and extend the conventional dice loss to provide extra training guidance. Furthermore, we build a new benchmark dataset that comprehensively captures various handwritten and scene text scenarios in images. Our extensive experimental results demonstrate the effectiveness of our approach in generalizing detection across both handwriting and scene text.