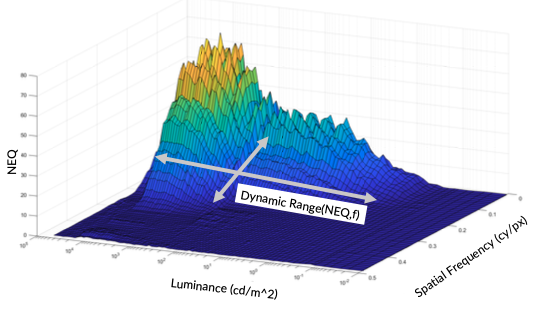

This paper investigates the application of Noise Equivalent Quanta (NEQ) as a comprehensive metric for assessing dynamic range in imaging systems. Building on previous work that demonstrated NEQ’s utility in characterizing noise and resolution trade-offs in imaging systems using the Dead Leaves technique, this study seeks to validate the use of NEQ for dynamic range characterization, especially in high-dynamic-range (HDR) systems where conventional metrics may fall short. This paper makes use of previous work that showed the possibility to measure noise and NEQ on the dead leaves pattern which is otherwise typically used for the measurement of the loss of low contrast fine details, also called texture loss. This shall now be used to improve the measurement of the dynamic range.

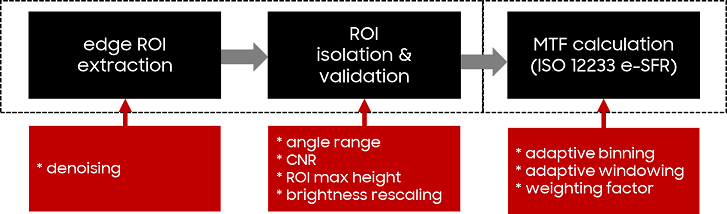

Evaluating spatial frequency response (SFR) in natural scenes is crucial for understanding camera system performance and its implications for image quality in various applications, including machine learning and automated recognition. Natural Scene derived Spatial Frequency Response (NS-SFR) represented a significant advancement by allowing for direct assessment of camera performance without the need for charts, which have been traditionally limited. However, the existing NS-SFR methods still face limitations related to restricted angular coverage and susceptibility to noise, undermining measurement accuracy. In this paper, we propose a novel methodology that can enhance the NS-SFR by employing an adaptive oversampling rate (OSR) and phase shift (PS) to broaden angular coverage and by applying a newly developed adaptive window technique that effectively reduces the impact of noise, leading to more reliable results. Furthermore, by simulation and comparison with theoretical modulation transfer function (MTF) values, as well as in natural scenes, the proposed method demonstrated that our approach successfully addresses the challenges of the existing methods, offering a more accurate representation of camera performance in natural scenes.

The edge-based Spatial Frequency Response (e-SFR) method was first developed for evaluating camera image resolution and image sharpness. The method was described in the first version of the ISO 12233 standard. Since then, the method has been applied in a wide range of applications, including medical, security, archiving, and document processing. However, with this broad application, several of the assumptions of the method are no longer closely followed. This has led to several improvements aimed at broadening its application, for example for lenses with spatial distortion. We can think of the evaluation of image quality parameters as an estimation problem, based on the gathered data, often from digital images. In this paper, we address the mitigation of measurement error that is introduced when the analysis is applied to low-exposure (and therefore, noisy) applications and those with small analysis regions. We consider the origins of both bias and variation in the resulting SFR measurement and present practical ways to reduce them. We describe the screening of outlier edge-location values as a method for improved edge detection. This, in turn, is related to a reduction in negative bias in the resulting SFR.

The edge-based Spatial Frequency Response (e-SFR) method is well established and has been included in the ISO 12233 standard since the first version in 2000. A new 4th edition of the standard is proceeding, with additions and changes that are intended to broaden its application and improve reliability. We report on results for advanced edge-fitting which, although reported before, was not previously included in the standard. The application of the e-SFR method to a range of edge-feature angles is enhanced by the inclusion of an angle-based correction, and use of a new test chart. We present examples of the testing completed for a wider range of edge test features than previously addressed by ISO 12233, for near-zero- and -45-degree orientations. Various smoothing windows were compared, including the Hamming and Tukey forms. We also describe a correction for image non-uniformity, and the computing of an image sharpness measure (acutance) that will be included in the updated standard.

The edge-based Spatial Frequency Response (e-SFR) is an established measure for camera system quality performance, traditionally measured under laboratory conditions. With the increasing use of Deep Neural Networks (DNNs) in autonomous vision systems, the input signal quality becomes crucial for optimal operation. This paper proposes a method to estimate the system e-SFR from pictorial natural scene derived SFRs (NSSFRs) as previously presented, laying the foundation for adapting the traditional method to a real-time measure.In this study, the NS-SFR input parameter variations are first investigated to establish suitable ranges that give a stable estimate. Using the NS-SFR framework with the established parameter ranges, the system e-SFR, as per ISO 12233, is estimated. Initial validation of results is obtained from implementing the measuring framework with images from a linear and a non-linear camera system. For the linear system, results closely approximate the ISO 12233 e-SFR measurement. Non-linear system measurements exhibit scene-dependant characteristics expected from edge-based methods. The requirements to implement this method in real-time for autonomous systems are then discussed.

The dead leaves pattern is very useful to obtain an SFR from a stochastic pattern and can be used to measure texture loss due to noise reduction or compression in images and video streams. In this paper, we present results from experiments that use the pattern and different analysis approaches to measure the dynamic range of a camera system as well as to describe the dependency of the SFR on object contrast and light intensity. The results can be used to improve the understanding of the performance of modern camera systems. These systems work adaptively and are scene aware but are not well described by standard image quality metrics.

Simulation is an established tool to develop and validate camera systems. The goal of autonomous driving is pushing simulation into a more important and fundamental role for safety, validation and coverage of billions of miles. Realistic camera models are moving more and more into focus, as simulations need to be more then photo-realistic, they need to be physical-realistic, representing the actual camera system onboard the self-driving vehicle in all relevant physical aspects – and this is not only true for cameras, but also for radar and lidar. But when the camera simulations are becoming more and more realistic, how is this realism tested? Actual, physical camera samples are tested in laboratories following norms like ISO12233, EMVA1288 or the developing P2020, with test charts like dead leaves, slanted edge or OECF-charts. In this article we propose to validate the realism of camera simulations by simulating the physical test bench setup, and then comparing the synthetical simulation result with physical results from the real-world test bench using the established normative metrics and KPIs. While this procedure is used sporadically in industrial settings we are not aware of a rigorous presentation of these ideas in the context of realistic camera models for autonomous driving. After the description of the process we give concrete examples for several different measurement setups using MTF and SFR, and show how these can be used to characterize the quality of different camera models.

The ISO 12233 standard for digital camera resolution includes two methods for the evaluation of camera performance in terms of a Spatial Frequency Response (SFR). In many cases, the measured SFR can be taken as a measurement of the camera-system Modulation Transfer Function (MTF), used in optical design. In this paper, we investigate how the ISO 12233 method for slantededge analysis can be applied to such an optical design. Recent improvements to the ISO method aid in the computing of both sagittal and tangential MTF, as commonly specified for optical systems. From computed optical simulations of actual designs, we apply the slanted-edge analysis over the image field. The simulations include the influence of optical aberrations, and these can present challenges to the ISO methods. We find, however, that when the slanted-edge methods are applied with care, consistent results can be obtained.

The Modulation Transfer Function (MTF) is a wellestablished measure of camera system performance, commonly employed to characterize optical and image capture systems. It is a measure based on Linear System Theory; thus, its use relies on the assumption that the system is linear and stationary. This is not the case with modern-day camera systems that incorporate non-linear image signal processes (ISP) to improve the output image. Nonlinearities result in variations in camera system performance, which are dependent upon the specific input signals. This paper discusses the development of a novel framework, designed to acquire MTFs directly from images of natural complex scenes, thus making the use of traditional test charts with set patterns redundant. The framework is based on extraction, characterization and classification of edges found within images of natural scenes. Scene derived performance measures aim to characterize non-linear image processes incorporated in modern cameras more faithfully. Further, they can produce ‘live’ performance measures, acquired directly from camera feeds.

Aliasing is a well-known effect in imaging which leads potentially to disturbing artefacts on structures. While the high pixel count of todays devices helps to reduce this effect, at the same time optical anti-aliasing filter are more often removed from sensor stacks to improve on system SFR and quantum efficiency. While the artefact is easy to see, an objective measurement and quantification of aliasing is not standardised or established. In this paper we show an extension to existing SFR measurement procedures described in ISO12233 which can measure and quantify the existence of aliasing in the imaging system. It utilises the harmonic Siemens star of the s-SFR method and can be included into existing systems, so does not require the capture of additional images.