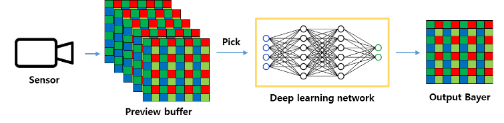

With the emergence of 200 mega pixel QxQ Bayer pattern image sensors, the remosaic technology that rearranges color filter arrays (CFAs) into Bayer patterns has become increasingly important. However, the limitations of the remosaic algorithm in the sensor often result in artifacts that degrade the details and textures of the images. In this paper, we propose a deep learning-based artifact correction method to enhance image quality within a mobile environment while minimizing shutter lag. We generated a dataset for training by utilizing a high-performance remosaic algorithm and trained a lightweight U-Net based network. The proposed network effectively removes these artifacts, thereby improving the overall image quality. Additionally, it only takes about 15 ms to process a 4000x3000 image on a Galaxy S22 Ultra, making it suitable for real-time applications.

In autonomous driving applications, cameras are a vital sensor as they can provide structural, semantic and navigational information about the environment of the vehicle. While image quality is a concept well understood for human viewing applications, its definition for computer vision is not well defined. This gives rise to the fact that, for systems in which human viewing and computer vision are both outputs of one video stream, historically the subjective experience for human viewing dominates over computer vision performance when it comes to tuning the image signal processor. However, the rise in prominence of autonomous driving and computer vision brings to the fore research in the area of the impact of image quality in camera-based applications. In this paper, we provide results quantifying the accuracy impact of sharpening and contrast on two image feature registration algorithms and pedestrian detection. We obtain encouraging results to illustrate the merits of tuning image signal processor parameters for vision algorithms.