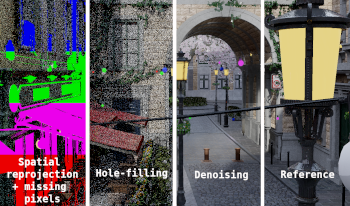

Spatial reprojection can be utilized to lower the computational complexity of stereoscopic path tracing and reach real-time requirements. However it adds dependencies in the pipeline. We perform the handling of data dependencies through a task scheduler framework that embeds workload and dependency information at each stage of the pipeline. We propose a novel image-parallel stereoscopic path tracing pipeline, parallelizing the spatial reprojection stage, the hole-filling stage and post-processing algorithms (denoising, tonemapping) to multiple GPUs. Distributing the workload of the spatial reprojection stage to each GPU allows to locally detect the holes in the images, which are caused by non-reprojected pixels. For the spatial reprojection, denoising and hole-filling stages, we have respectively a speedup of ×2.75, ×4.2 and ×2.89 per GPU on animated scenes. Overall, our pipeline shows a speedup of ×2.25 compared to the open source state-of-the-art path tracer Tauray which only parallelizes path tracing.

Video conferencing usage dramatically increased during the pandemic and is expected to remain high in hybrid work. One of the key aspects of video experience is background blur or background replacement, which relies on good quality portrait segmentation in each frame. Software and hardware manufacturers have worked together to utilize depth sensor to improve the process. Existing solutions have incorporated depth map into post processing to generate a more natural blurring effect. In this paper, we propose to collect background features with the help of depth map to improve the segmentation result from the RGB image. Our results show significant improvements over methods using RGB based networks and runs faster than model-based background feature collection models.

Virtual background has become an increasingly important feature of online video conferencing due to the popularity of remote work in recent years. To enable virtual background, a segmentation mask of the participant needs to be extracted from the real-time video input. Most previous works have focused on image based methods for portrait segmentation. However, portrait video segmentation poses additional challenges due to complicated background, body motion, and inter-frame consistency. In this paper, we utilize temporal guidance to improve video segmentation, and propose several methods to address these challenges including prior mask, optical flow, and visual memory. We leverage an existing portrait segmentation model PortraitNet to incorporate our temporal guided methods. Experimental results show that our methods can achieve improved segmentation performance on portrait videos with minimum latency.

Despite all major developments in graphics hardware, realistic rendering is still a computational challenge in real-time augmented reality (AR) applications deployed on portable devices. We have developed a real-time photo-realistic AR system which captures environment lighting dynamically, computes second order spherical harmonic (SH) coefficients of it on the CPU and the resulting nine coefficients on the AR device for real-time rendering. Our technique provides a very computationally efficient rendering procedure for diffuse objects in real-time rendering scenarios. We use two options for dynamic photometric registration of environments: a Ricoh Theta S 360° camera, and a Raspberry Pi Zero mini PC with a camera with a 180° fisheye lens. We tested our system successfully with software developed in Unity 3D with the Vuforia AR package, running on a Microsoft Hololens, and also an Oculus Rift DK2 with a stereo camera add-on.