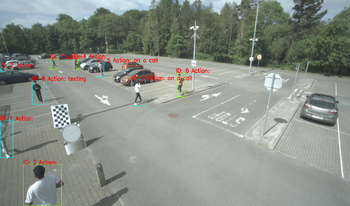

In this paper, we present a database consisting of the annotations of videos showing a number of people performing several actions in a parking lot. The chosen actions represent situations in which the pedestrian could be distracted and not fully aware of her surroundings. Those are “looking behind”, “on a call”, and “texting”, with another one labeled as “no action” when none of the previous actions is performed by the person. In addition to actions, also the speed of the person is labeled. There are three possible values for such speed: “standing”, “walking” and “running”. Bounding boxes of people present in each frame are also provided, along with a unique identifier for each person. The main goal is to provide the research community with examples of actions that can be of interest for surveillance or safe autonomous driving. The addition of the speed of the person when performing the action can also be of interest, as it can be treated as a more dangerous behavior “running” than “waking”, when “on a call” or “looking behind”, for example, providing the researchers with richer information.

Multi-modal pedestrian detection has been developed actively in the research field for the past few years. Multi-modal pedestrian detection with visible and thermal modalities outperforms visible-modal pedestrian detection by improving robustness to lighting effects and cluttered backgrounds because it can simultaneously use complementary information from visible and thermal frames. However, many existing multi-modal pedestrian detection algorithms assume that image pairs are perfectly aligned across those modalities. The existing methods often degrade the detection performance due to misalignment. This paper proposes a multi-modal pedestrian detection network for a one-stage detector enhanced by a dual-regressor and a new algorithm for learning multi-modal data, so-called object-based training. This study focuses on Single Shot MultiBox Detector (SSD), one of the most common one-stage detectors. Experiments demonstrate that the proposed method outperforms current state-of-the-art methods on artificial data with large misalignment and is comparable or superior to existing methods on existing aligned datasets.

Deep neural networks have been utilized in an increasing number of computer vision tasks, demonstrating superior performance. Much research has been focused on making deep networks more suitable for efficient hardware implementation, for low-power and low-latency real-time applications. In [1], Isikdogan et al. introduced a deep neural network design that provides an effective trade-off between flexibility and hardware efficiency. The proposed solution consists of fixed-topology hardware blocks, with partially frozen/partially trainable weights, that can be configured into a full network. Initial results in a few computer vision tasks were presented in [1]. In this paper, we further evaluate this network design by applying it to several additional computer vision use cases and comparing it to other hardware-friendly networks. The experimental results presented here show that the proposed semi-fixed semi-frozen design achieves competitive performanc on a variety of benchmarks, while maintaining very high hardware efficiency.