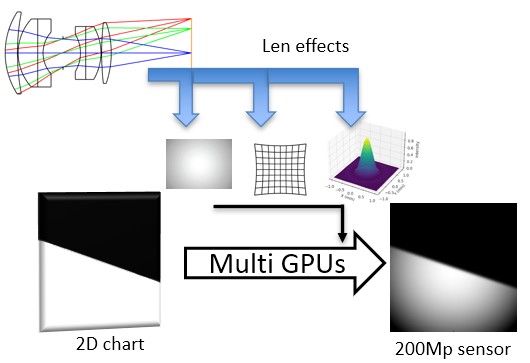

In a lens-based camera, light from an object is projected onto an image sensor through a lens. This projection involves various optical effects, including the point spread function (PSF), distortion, and relative illumination (RI), which can be modeled using lens design software such as Zemax and Code V. To evaluate lens performance, a simulation system can be implemented to apply these effects to a scene image. In this paper, we propose a lens-based multispectral image simulation system capable of handling images with 200 million pixels, matching the resolution of the latest mobile devices. Our approach optimizes the spatially variant PSF (SVPSF) algorithm and leverages a distributed multi-GPU system for massive parallel computing. The system processes 200M-pixel multispectral 2D images across 31 wavelengths ranging from 400 nm to 700 nm. We compare edge spread function, RI, and distortion results with Zemax, achieving approximately 99% similarity. The entire computation for a 200M-pixel, 31-wavelength image is completed in under two hours. This efficient and accurate simulation system enables pre-evaluation of lens performance before manufacturing, making it a valuable tool for optical design and analysis.