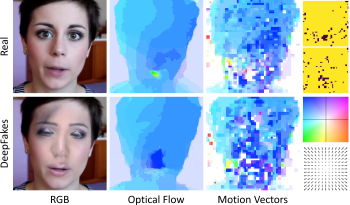

Video DeepFakes are fake media created with Deep Learning (DL) that manipulate a person’s expression or identity. Most current DeepFake detection methods analyze each frame independently, ignoring inconsistencies and unnatural movements between frames. Some newer methods employ optical flow models to capture this temporal aspect, but they are computationally expensive. In contrast, we propose using the related but often ignored Motion Vectors (MVs) and Information Masks (IMs) from the H.264 video codec, to detect temporal inconsistencies in DeepFakes. Our experiments show that this approach is effective and has minimal computational costs, compared with per-frame RGB-only methods. This could lead to new, real-time temporally-aware DeepFake detection methods for video calls and streaming.

There is a surging need across the world for protection against gun violence. There are three main areas that we have identified as challenging in research that tries to curb gun violence: temporal location of gunshots, gun type prediction and gun source (shooter) detection. Our task is gun source detection and muzzle head detection, where the muzzle head is the round opening of the firing end of the gun. We would like to locate the muzzle head of the gun in the video visually, and identify who has fired the shot. In our formulation, we turn the problem of muzzle head detection into two sub-problems of human object detection and gun smoke detection. Our assumption is that the muzzle head typically lies between the gun smoke caused by the shot and the shooter. We have interesting results both in bounding the shooter as well as detecting the gun smoke. In our experiments, we are successful in detecting the muzzle head by detecting the gun smoke and the shooter.