Naturalistic driving studies consist of drivers using their personal vehicles and provide valuable real-world data, but privacy issues must be handled very carefully. Drivers sign a consent form when they elect to participate, but passengers do not for a variety of practical reasons. However, their privacy must still be protected. One large study includes a blurred image of the entire cabin which allows reviewers to find passengers in the vehicle; this protects the privacy but still allows a means of answering questions regarding the impact of passengers on driver behavior. A method for automatically counting the passengers would have scientific value for transportation researchers. We investigated different image analysis methods for automatically locating and counting the non-drivers including simple face detection and fine-tuned methods for image classification and a published object detection method. We also compared the image classification using convolutional neural network and vision transformer backbones. Our studies show the image classification method appears to work the best in terms of absolute performance, although we note the closed nature of our dataset and nature of the imagery makes the application somewhat niche and object detection methods also have advantages. We perform some analysis to support our conclusion.

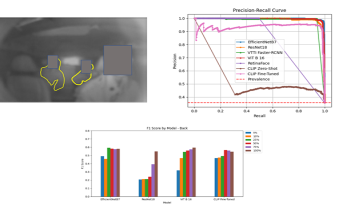

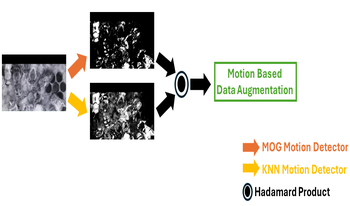

In this paper, we address the task of detecting honey bees inside a beehive using computer vision with the goal of monitoring their activity. Conventionally, beekeepers monitor the activities of honey bees by watching colony entrances or by opening their colonies and examining bee movement and behavior during inspections. However, these methods either miss important information or alter honey bee behavior. Therefore, we installed simple cameras and IR lighting into honey bee colonies for a proof of concept study whether deep-learning techniques could assist in-hive observations. However, the lighting conditions across different beehives are diverse, which leads to varied appearances of both the beehive backgrounds and the honey bees. This phenomenon significantly degrades the performance of detection using Deep Neural Networks. In this paper, we propose to apply domain randomization based on motion to train honey bee detectors for inside the beehive. Our experiments were conducted on the images captured from beehives both seen and unseen during training. The results show that our proposed method boosts the performance of honey bee detection, especially for small bees which are more likely to be affected by the lighting conditions.

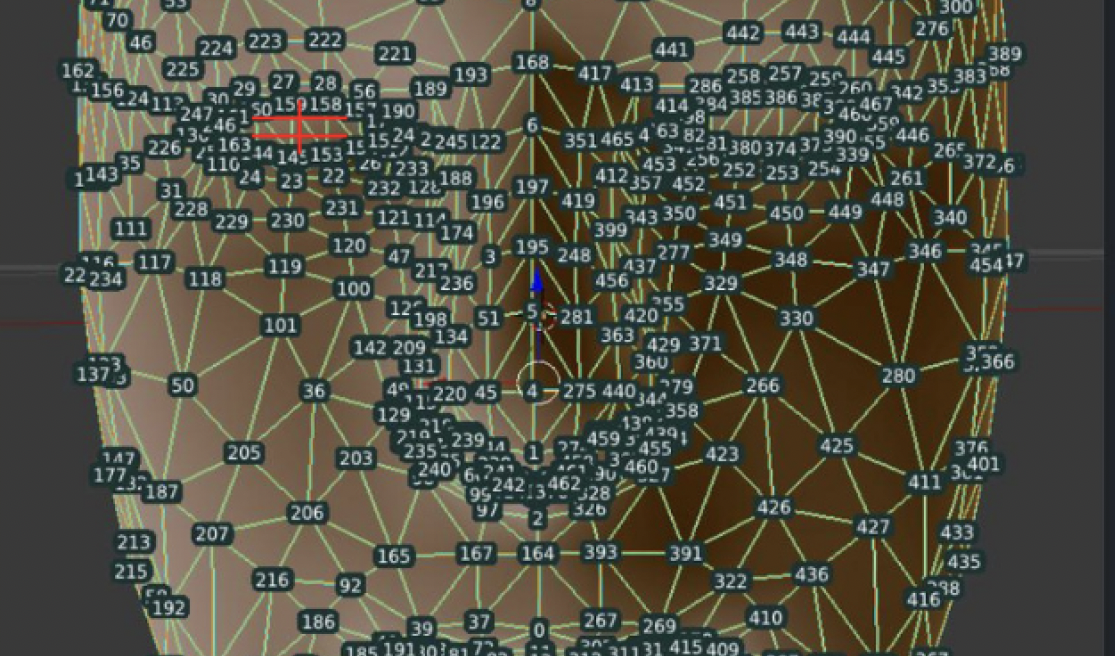

The Driver Monitoring System (DMS) presented in this work aims to enhance road safety by continuously monitoring a drivers behavior and emotional state during vehicle operation. The system utilizes computer vision and machine learning techniques to analyze the drivers face and actions, providing real-time alerts to mitigate potential hazards. The primary components of the DMS include gaze detection, emotion analysis, and phone usage detection. The system tracks the drivers eye movements to detect drowsiness and distraction through blink patterns and eye-closure durations. The DMS employs deep learning models to analyze the drivers facial expressions and extract dominant emotional states. In case of detected emotional distress, the system offers calming verbal prompts to maintain driver composure. Detected phone usage triggers visual and auditory alerts to discourage distracted driving. Integrating these features creates a comprehensive driver monitoring solution that assists in preventing accidents caused by drowsiness, distraction, and emotional instability. The systems effectiveness is demonstrated through real-time test scenarios, and its potential impact on road safety is discussed.

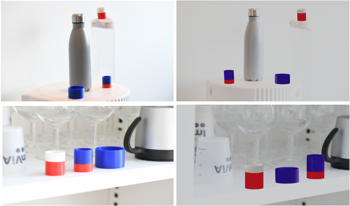

Metrology plays a critical role in the rapid progress of Artificial Intelligence (AI), particularly in computer vision. This article explores the importance of metrology in image synthesis for computer vision tasks, with a particular focus on object detection for quality control. The aim is to improve the accuracy, reliability and quality of AI models. Through the use of precise measurements, standards and calibration techniques, a carefully constructed dataset has been generated and used to train AI models. By incorporating metrology into AI models, we aim at improving their overall performance and robustness.

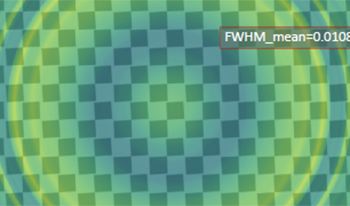

We present a novel metric Spatial Recall Index to assess the performance of machine-learning (ML) algorithms for automotive applications, focusing on where in the image which performance occurs. Typical metrics like intersection-over-union (IoU), precisionrecallcurves or average precision (AP) quantify the performance over a whole database of images, neglecting spatial performance variations. But as the optics of camera systems are spatially variable over the field of view, the performance of ML-based algorithms is also a function of space, which we show in simulation: A realistic objective lens based on a Cooke-triplet that exhibits typical optical aberrations like astigmatism and chromatic aberration, all variable over field, is modeled. The model is then applied to a subset of the BDD100k dataset with spatially-varying kernels. We then quantify local changes in the performance of the pre-trained Mask R-CNN algorithm. Our examples demonstrate the spatial dependence of the performance of ML-based algorithms from the optical quality over field, highlighting the need to take the spatial dimension into account when training ML-based algorithms, especially when looking forward to autonomous driving applications.

Diet is an important aspect of our health. Good dietary habits can contribute to the prevention of many diseases and improve overall quality of life. To better understand the relationship between diet and health, image-based dietary assessment systems have been developed to collect dietary information. We introduce the Automatic Ingestion Monitor (AIM), a device that can be attached to one’s eye glasses. It provides an automated hands-free approach to capture eating scene images. While AIM has several advantages, images captured by the AIM are sometimes blurry. Blurry images can significantly degrade the performance of food image analysis such as food detection. In this paper, we propose an approach to pre-process images collected by the AIM imaging sensor by rejecting extremely blurry images to improve the performance of food detection.

With the growing demand for robust object detection algorithms in self-driving systems, it is important to consider the varying lighting and weather conditions in which cars operate all year round. The goal of our work is to gain a deeper understanding of meaningful strategies for selecting and merging training data from currently available databases and self-annotated videos in the context of automotive night scenes. We retrain an existing Convolutional Neural Network (YOLOv3) to study the influence of different training dataset combinations on the final object detection results in nighttime and low-visibility traffic scenes. Our evaluation shows that a suitable selection of training data from the GTSRD, VIPER, and BDD databases in conjunction with selfrecorded night scenes can achieve an mAP of 63,5% for ten object classes, which is an improvement of 16,7% when compared to the performance of the original YOLOv3 network on the same test set.

Deep learning has significantly improved the accuracy and robustness of computer vision techniques but is fundamentally limited by access to training data. Pretrained networks and public datasets have enabled the building of many applications with minimal data collection. However, these datasets are often biased: they largely contain images with conventional poses of common objects (e.g., cars, furniture, dogs, cats, etc.). In specialized applications such as user assistance for servicing complex equipment, the objects in question are often not represented in popular datasets (e.g., fuser roll assembly in a printer) and require a variety of unusual poses and lighting conditions making the training of these applications expensive and slow. To overcome these limitations, we propose a fast labeling tool using an Augmented Reality (AR) platform that leverages the 3D geometry and tracking afforded by modern AR systems. Our technique, which we call WARHOL, allows a user to mark boundaries of an object once in world coordinates and then automatically project these to an enormous range of poses and conditions automatically. Our experiments show that object labeling using WARHOL achieves 90% of the localization accuracy in object detection tasks with only 5% of the labeling effort compared to manual labeling. Crucially, WARHOL also allows the annotation of objects with parts that have multiple states (e.g., drawers open or closed, removable parts present or not) with minimal extra user effort. WARHOL also improves on typical object detection bounding boxes using a bounding box refinement network to create perspective-aligned bounding boxes that dramatically improve the localization accuracy and interpretability of detections.

CMOS Image sensors play a vital role in the exponentially growing field of Artificial Intelligence (AI). Applications like image classification, object detection and tracking are just some of the many problems now solved with the help of AI, and specifically deep learning. In this work, we target image classification to discern between six categories of fruits — fresh/ rotten apples, fresh/ rotten oranges, fresh/ rotten bananas. Using images captured from high speed CMOS sensors along with lightweight CNN architectures, we show the results on various edge platforms. Specifically, we show results using ON Semiconductor’s global-shutter based, 12MP, 90 frame per second image sensor (XGS-12), and ON Semiconductor’s 13 MP AR1335 image sensor feeding into MobileNetV2, implemented on NVIDIA Jetson platforms. In addition to using the data captured with these sensors, we utilize an open-source fruits dataset to increase the number of training images. For image classification, we train our model on approximately 30,000 RGB images from the six categories of fruits. The model achieves an accuracy of 97% on edge platforms using ON Semiconductor’s 13 MP camera with AR1335 sensor. In addition to the image classification model, work is currently in progress to improve the accuracy of object detection using SSD and SSDLite with MobileNetV2 as the feature extractor. In this paper, we show preliminary results on the object detection model for the same six categories of fruits.

To achieve one of the tasks required for disaster response robots, this paper proposes a method for locating 3D structured switches’ points to be pressed by the robot in disaster sites using RGBD images acquired by Kinect sensor attached to our disaster response robot. Our method consists of the following five steps: 1)Obtain RGB and depth images using an RGB-D sensor. 2) Detect the bounding box of switch area from the RGB image using YOLOv3. 3)Generate 3D point cloud data of the target switch by combining the bounding box and the depth image.4)Detect the center position of the switch button from the RGB image in the bounding box using Convolutional Neural Network (CNN). 5)Estimate the center of the button’s face in real space from the detection result in step 4) and the 3D point cloud data generated in step3) In the experiment, the proposed method is applied to two types of 3D structured switch boxes to evaluate the effectiveness. The results show that our proposed method can locate the switch button accurately enough for the robot operation.