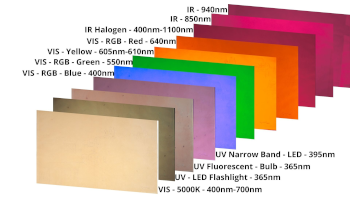

Non-invasive scientific imaging is increasingly becoming an important research tool in the study of cultural heritage objects, combining sustainability and preservation in a responsible and conscious manner. The purpose of this study is to make a legibility by digital restoration of two drawings by Brazilian modernist artist Guignard, dated 1956 and 1958, respectively, one created with ink and pen nib, and the other presumably with graphite. Both drawings have likely suff ered from photodegradation, with almost total loss of visibility due to fading and erasure of the drawn lines. Scientific photography techniques were employed, including infrared refl ectance photography, visible light photography, ultraviolet fl uorescence, Multispectral Imaging, transmitted light photography, raking light photography, and Refl ectance Transformation Imaging (RTI). These techniques, used in combination, returned positive results, enhancing legibility and revealing traces and details that were no longer visible to the naked eye.

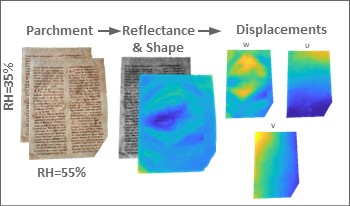

A multimodal optomechatronics system is presented for measuring and monitoring change in cultural heritage objects exposed to environmental condition fluctuations or conservation treatments. It combines structured light, 3D colour digital image correlation and multispectral imaging, delivering information about an object's 3D shape, displacements, strains and reflectivity. The high functionality and applicability of the system are presented with the example of historical parchment subjected to changes in relative humidity.

We consider the problem of estimating surface-spectral reflectance with a smoothness constraint from image data. The total variation of a spectral reflectance over the visible wavelength range is defined as the measure of smoothness. A penalty on roughness, equivalent to smoothness, is added to the performance index to estimate the spectral reflectance functions. The optimal estimates of the spectral reflectance functions are determined to minimize a total cost function consisting of the estimation error and the roughness of the spectral functions. An RGB camera and multiple LED light sources are used to construct the multispectral image acquisition system. We model the observed images using spectral sensitivities, illuminant spectrum, unknown spectral reflectance, a gain parameter, and an additive noise term. The estimation algorithms are developed for the two estimation methods of PCA and LMMSE. The optimal estimators are derived based on the least-square criterion for PCA and the mean squared error minimization criterion for LMMSE. The feasibility of the proposed method is shown in an experiment using three mobile phone cameras. It is confirmed that the optimal estimators improve the accuracy for both original PCA and LMMS estimators.

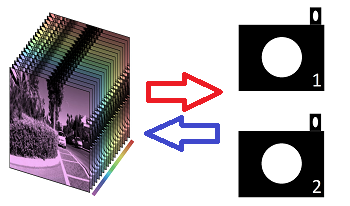

Spectral Reconstruction (SR) algorithms seek to map RGB images to their hyperspectral image counterparts. Statistical methods such as regression, sparse coding, and deep neural networks are used to determine the SR mapping. All these algorithms are optimized ‘blindly’ and the provenance of the RGBs is not considered. In this paper, we benchmark the performance of SR methods—in order of increasing complexity: regression, sparse coding, and deep neural network—when different RGB camera spectral sensitivity functions are used. In effect, we ask: “Are some cameras better able to recover spectra from RGBs than others?”. In our experiments, RGB images are generated by numerical integration for a fixed set of hyperspectral images using 9 different camera response functions (each from a different camera manufacturer) plus the CIE 1964 color matching functions. Then, we train SR methods on the respective RGB image sets. Our experiments show three important results. First, different cameras <strong>do</strong> support slightly better or worse spectral reconstruction but, secondly, that changing the spectral sensitivities alone does not change the ranking of different algorithms. Finally, we show that sometimes switching the used camera for SR can give a greater performance boost than switching to use a more complex SR method.

In Spectral Reconstruction (SR), we recover hyperspectral images from their RGB counterparts. Most of the recent approaches are based on Deep Neural Networks (DNN), where millions of parameters are trained mainly to extract and utilize the contextual features in large image patches as part of the SR process. On the other hand, the leading Sparse Coding method ‘A+’—which is among the strongest point-based baselines against the DNNs—seeks to divide the RGB space into neighborhoods, where locally a simple linear regression (comprised by roughly 102 parameters) suffices for SR. In this paper, we explore how the performance of Sparse Coding can be further advanced. We point out that in the original A+, the sparse dictionary used for neighborhood separations are optimized for the spectral data but used in the projected RGB space. In turn, we demonstrate that if the local linear mapping is trained for each spectral neighborhood instead of RGB neighborhood (and theoretically if we could recover each spectrum based on where it locates in the spectral space), the Sparse Coding algorithm can actually perform much better than the leading DNN method. In effect, our result defines one potential (and very appealing) upper-bound performance of point-based SR.

Mercury (Hg) and Arsenic (As) have been recognized as chemical threats to human health. Still, the detection of lower contamination levels using traditional image analysis remains challenging due to the small number of available data samples and the insufficient utilization of the spatial information contained in the sensor pad images. To overcome this challenge, we use the spectra data of the colorimetric response pads and propose two kinds of classification models for differentiating contaminant levels with high test accuracy. In the first model, we use the SMOTE method to solve the imbalanced data problem, then apply the sequential forward selection algorithm to select optimal wavelength features in combination with the k-NN classifier to discriminate five contaminant levels. The second technique comprises principal component analysis (PCA) used as a dimensionality reduction technique combined with the random forest (RF) classifier to classify five contaminant levels. Our proposed system is trained and evaluated on a limited dataset of 126 spectral responses of five contamination levels. Our algorithms can yield 77% and 87% average accuracy, respectively. We will present an overview of the base model, the pipelines and the comparison of our proposed two classification models, and the phone-based narrow-band spectral imaging system that can obtain the camera spectral response for accurate and precise heavy metals analyses with the aid of narrow bandpass filters in front of a cell phone’s camera lens.

Multispectral imaging has been a valuable technique for discovering hidden texts in manuscripts, learning the provenance of antique books, and generally studying cultural heritage objects. Standard software used in displaying and analyzing such multispectral images are often complex and requires installation and maintenance of custom packages and libraries. We present an easy-to-use web-based multispectral imaging visualization tool that enables simultaneous interaction with the information captured in different spectral bands.

It is difficult to describe facial skin color through a solidcolor as it varies from region to region. In this article, the authors utilized image analysis to identify the facial color representative region. A total of 1052 female images from Humanae project were selected as a solid color was generated for each image as their representative skin colors by the photographer. Using the open CV-based libraries, such as EOS of Surrey Face Models and DeepFace, 3448 facial landmarks together with gender and race information were detected. For an illustrative and intuitive analysis, they then re-defined 27 visually important sub-regions to cluster the landmarks. The 27 sub-region colors for each image were finally derived and recorded in L*, a*, and b*. By estimating the color difference among representative color and 27 sub-regions, we discovered that sub-regions of below lips (low Labial) and central cheeks (upper Buccal) were the most representative regions across four major ethnicity groups. In future study, the methodology is expected to be applied for more image sources. c 2020 Society for Imaging Science and Technology.

A color image is the result of a very complex physical process. This process involves both light reflectance due to the surface of the object and the sensor. The sensor is the human eye or the image acquisition system. To avoid metamerism phenomena and to give better rendering to the color images resulting from the synthesis, it is sometimes necessary to work in the spectral field. Now, we meet two classes of methods that enable the closest spectral image to be produced from color images. The first method uses circular and exponential functions. The second method uses the Penrose inverse or the Wiener inverse. In this article, we first of all describe the two methods used in a variety of fields from image synthesis to colorimetry not to forget satellite imagery. We then propose a new method linked with the neural network so as to improve the first two approximation methods. This new method can also be used for calibrating most of color digitization systems and sub wavelengths color array filters.