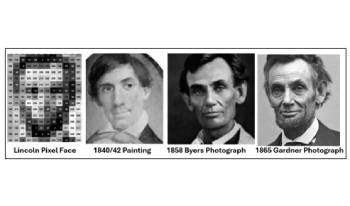

Are we there yet? All the puzzle pieces are here: a 2” miniature portrait on ivory dated circa 1840-1842, discovered alongside a letter detailing the owner’s familial ties to Mary Todd Lincoln. This portrait’s distinctive features echo President Lincoln’s unique facial asymmetry. However, despite intensive investigation, no historical document has been found to definitively link this miniature to Lincoln. This research aims to bridge art and science to determine whether this painting represents the earliest image of Abraham Lincoln, potentially opening avenues for future collaborations in identifying historical faces from the past. A key contributor to this effort is Dr. David Stork, an Adjunct Professor at Stanford University and a leading expert in computer-based image analysis. Dr. Stork holds 64 U.S. patents and has authored over 220 peer-reviewed publications in fields such as machine learning, pattern recognition, computational optics, and the image analysis of art. His recent book, Pixels and Paintings: Foundations of Computer-Assisted Connoisseurship1, fosters a dialogue between art scholars and the computer vision community.

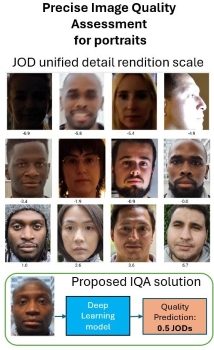

Portraits are one of the most common use cases in photography, especially in smartphone photography. However, evaluating portrait quality in real portraits is costly and difficult to reproduce. We propose a new method to evaluate a large range of detail preservation rendering on real portrait images. Our approach is based on 1) annotating a set of portrait images grouped by semantic content using pairwise comparison 2) taking advantage of the fact that we are focusing on portraits, using cross-content annotations to align the quality scales 3) training a machine learning model on the global quality scale. On top of providing a fine-grained wide range detail preservation quality output, numerical experiments show that the proposed method correlates highly with the perceptual evaluation of image quality experts.

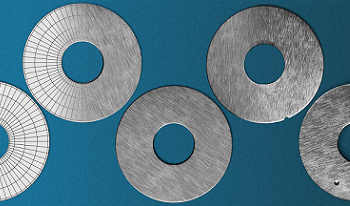

Portraits are one of the most common use cases in photography, especially in smartphone photography. However, evaluating portrait quality in real portraits is costly, inconvenient, and difficult to reproduce. We propose a new method to evaluate a large range of detail preservation renditions on realistic mannequins. This laboratory setup can cover all commercial cameras from videoconference to high-end DSLRs. Our method is based on 1) the training of a machine learning method on a perceptual scale target 2) the usage of two different regions of interest per mannequin depending on the quality of the input portrait image 3) the merge of the two quality scales to produce the final wide range scale. On top of providing a fine-grained wide range detail preservation quality output, numerical experiments show that the proposed method is robust to noise and sharpening, unlike other commonly used methods such as the texture acutance on the Dead Leaves chart.

This paper presents a method for synthesizing 2D and 3D sensor data for various machine vision tasks. Depending on the task, different processing steps can be applied to a 3D model of an object. For object detection, segmentation and pose estimation, random object arrangements are generated automatically. In addition, objects can be virtually deformed in order to create realistic images of non-rigid objects. For automatic visual inspection, synthetic defects are introduced into the objects. Thus sensor-realistic datasets with typical object defects for quality control applications can be created, even in the absence of defective parts. The simulation of realistic images uses physically based rendering techniques. Material properties and different lighting situations are taken into account in the 3D models. The resulting tuples of 2D images and their ground truth annotations can be used to train a machine learning model, which is subsequently applied to real data. In order to minimize the reality gap, a random parameter set is selected for each image, resulting in images with high variety. Considering the use cases damage detection and object detection, it has been shown that a machine learning model trained only on synthetic data can also achieve very good results on real data.

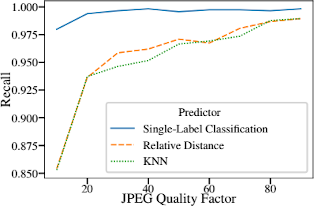

There are advantages and disadvantages to both robust and cryptographic hash methods. Integrating the qualities of robustness and cryptographic confidentiality would be highly desirable. However, the challenge is that the concept of similarity is not applicable to cryptographic hashes, preventing direct comparison between robust and cryptographic hashes. Therefore, when incorporating robust hashes into cryptographic hashes, it becomes essential to develop methods that effectively capture the intrinsic properties of robust hashes without compromising their robustness. In order to accomplish this, it is necessary to anticipate the hash bits that are most susceptible to modification, such as those that are affected by JPEG compression. Our work demonstrates that the prediction accuracy of existing approaches can be significantly improved by using a new hybrid hash comparison strategy.

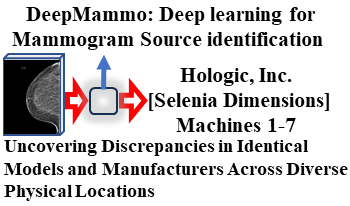

Advances in AI allow for fake image creation. These techniques can be used to fake mammograms. This could impact patient care and medicolegal cases. One method to verify that an image is original is to confirm the source of the image. A deep-learning algorithm(DeepMammo)-based on CNNs and FCNNs, used to identify the machine that created any mammogram. We analyze mammograms of 1574 patients obtained on 7-different mammography machines and randomly split the dataset by patient into training/validation(80%) and test(20%) datasets. DeepMammo has an accuracy of 98.09%, AUC of 95.96% in the test dataset.

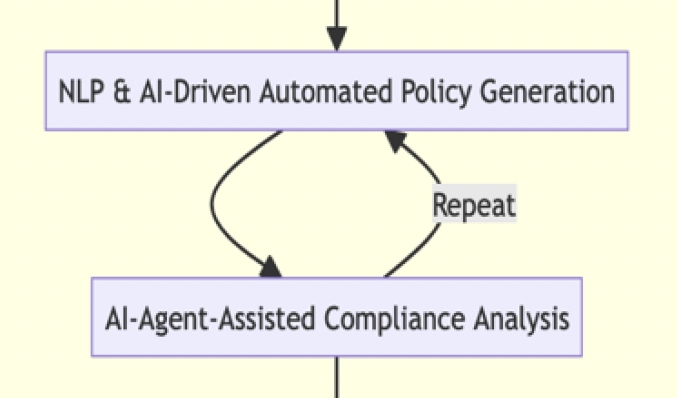

This paper introduces AI-based Cybersecurity Management Consulting (AI-CMC) as a disruptive technology to address the growing complexity of cybersecurity threats. AI-CMC combines advanced AI techniques with cybersecurity management, offering proactive and adaptive strategies through machine learning, natural language processing, and big data analytics. It enables real-time threat detection, predictive analytics, and intelligent decision-making. The paper explores AI-CMC's data-driven approach, learning models, and collaborative framework, demonstrating its potential to revolutionize cyber-security. It examines AI-CMC's benefits, challenges, and ethical considerations, emphasizing transparency and bias mitigation. A roadmap for transitioning to AI-CMC and its implications for industry standards, policies, and global strategies are discussed. Despite potential limitations and vulnerabilities, AI-CMC offers transformative solutions for enhancing threat resilience and safeguarding digital assets, calling for collaborative efforts and responsible use for a secure digital future.

Deep learning (DL)-based algorithms are used in many integral modules of ADAS and Automated Driving Systems. Camera based perception, Driver Monitoring, Driving Policy, Radar and Lidar perception are few of the examples built using DL algorithms in such systems. These real-time DL applications requires huge compute requires up to 250 TOPs to realize them on an edge device. To meet the needs of such SoCs efficiently in-terms of Cost and Power silicon vendor provide a complex SoC with multiple DL engines. These SoCs also comes with all the system resources like L2/L3 on-chip memory, high speed DDR interface, PMIC etc to feed the data and power to utilize these DL engines compute efficiently. These system resource would scale linearly with number of DL engines in the system. This paper proposes solutions to optimizes these system resource to provide cost and Power efficient solution. (1) Co-operative and Adaptive asynchronous DL engines scheduling to optimize the peak resources usage in multiple vectors like memory size, throughput, Power/ Current. (2) Orchestration of Co-operative and Adaptive Multi-core DL Engines to achieve synchronous execution to achieve maximum utilization of all the resources. The proposed solution achieves upto 30% power saving or reducing overhead by 75% in 4 core configuration consisting of 32 TOPS.

In this paper, we propose a multimodal unsupervised video learning algorithm designed to incorporate information from any number of modalities present in the data. We cooperatively train a network corresponding to each modality: at each stage of training, one of these networks is selected to be trained using the output of the other networks. To verify our algorithm, we train a model using RGB, optical flow, and audio. We then evaluate the effectiveness of our unsupervised learning model by performing action classification and nearest neighbor retrieval on a supervised dataset. We compare this triple modality model to contrastive learning models using one or two modalities, and find that using all three modalities in tandem provides a 1.5% improvement in UCF101 classification accuracy, a 1.4% improvement in R@1 retrieval recall, a 3.5% improvement in R@5 retrieval recall, and a 2.4% improvement in R@10 retrieval recall as compared to using only RGB and optical flow, demonstrating the merit of utilizing as many modalities as possible in a cooperative learning model.

Scientific user facilities present a unique set of challenges for image processing due to the large volume of data generated from experiments and simulations. Furthermore, developing and implementing algorithms for real-time processing and analysis while correcting for any artifacts or distortions in images remains a complex task, given the computational requirements of the processing algorithms. In a collaborative effort across multiple Department of Energy national laboratories, the "MLExchange" project is focused on addressing these challenges. MLExchange is a Machine Learning framework deploying interactive web interfaces to enhance and accelerate data analysis. The platform allows users to easily upload, visualize, label, and train networks. The resulting models can be deployed on real data while both results and models could be shared with the scientists. The MLExchange web-based application for image segmentation allows for training, testing, and evaluating multiple machine learning models on hand-labeled tomography data. This environment provides users with an intuitive interface for segmenting images using a variety of machine learning algorithms and deep-learning neural networks. Additionally, these tools have the potential to overcome limitations in traditional image segmentation techniques, particularly for complex and low-contrast images.