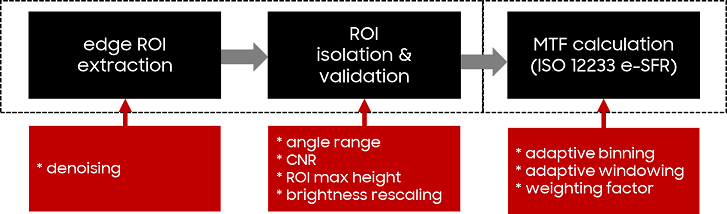

Evaluating spatial frequency response (SFR) in natural scenes is crucial for understanding camera system performance and its implications for image quality in various applications, including machine learning and automated recognition. Natural Scene derived Spatial Frequency Response (NS-SFR) represented a significant advancement by allowing for direct assessment of camera performance without the need for charts, which have been traditionally limited. However, the existing NS-SFR methods still face limitations related to restricted angular coverage and susceptibility to noise, undermining measurement accuracy. In this paper, we propose a novel methodology that can enhance the NS-SFR by employing an adaptive oversampling rate (OSR) and phase shift (PS) to broaden angular coverage and by applying a newly developed adaptive window technique that effectively reduces the impact of noise, leading to more reliable results. Furthermore, by simulation and comparison with theoretical modulation transfer function (MTF) values, as well as in natural scenes, the proposed method demonstrated that our approach successfully addresses the challenges of the existing methods, offering a more accurate representation of camera performance in natural scenes.

With the development of image-based applications, assessing the quality of images has become increasingly important. Although our perception of image quality changes as we age, most existing image quality assessment (IQA) metrics make simplifying assumptions about the age of observers, thus limiting their use for age-specific applications. In this work, we propose a personalized IQA metric to assess the perceived image quality of observers from different age groups. Firstly, we apply an age simulation algorithm to compute how an observer with a particular age would perceive a given image. More specifically, we process the input image according to an age-specific contrast sensitivity function (CSF), which predicts the reduction of contrast visibility associated with the aging eye. We combine age simulation with existing IQA metrics to calculate the age-specific perceived image quality score. To validate the effectiveness of our combined model, we conducted a psychophysical experiment in a controlled laboratory environment with young (18-31 y.o.), middle-aged (32-52 y.o.), and older (53+ y.o.) adults, measuring their image quality preferences for 84 test images. Our analysis shows that the predictions by our age-specific IQA metric are well correlated with the collected subjective IQA results from our psychophysical experiment.

With the development of various autofocusing (AF) technologies, sensor manufacturers are demanded to evaluate their performance accurately. The basic method of evaluating AF performance is to measure the time to get the refocused image and the sharpness of the image while repeatedly inducing the refocusing process. Traditionally, this process was conducted manually by covering and uncovering an object or sensor repeatedly, which can lead to unreliable results due to the human error and light blocking method. To deal with this problem, we propose a new device and solutions using a transparent display. Our method can provide more reliable results than the existing method by modulating the opacity, pattern, and repetition cycle of the target on the transparent display.

In recent years several different Image Quality Metrics (IQMs) have been introduced which are focused on comparing the feature maps extracted from different pre-trained deep learning models[1-3]. While such objective IQMs have shown a high correlation with the subjective scores little attention has been paid on how they could be used to better understand the Human Visual System (HVS) and how observers evaluate the quality of images. In this study, by using different pre-trained Convolutional Neural Networks (CNN) models we identify the most relevant features in Image Quality Assessment (IQA). By visualizing these feature maps we try to have a better understanding about which features play a dominant role when evaluating the quality of images. Experimental results on four benchmark datasets show that the most important feature maps represent repeated textures such as stripes or checkers, and feature maps linked to colors blue, or orange also play a crucial role. Additionally, when it comes to calculating the quality of an image based on a comparison of different feature maps, a higher accuracy can be reached when only the most relevant feature maps are used in calculating the image quality instead of using all the extracted feature maps from a CNN model. [1] Amirshahi, Seyed Ali, Marius Pedersen, and Stella X. Yu. "Image quality assessment by comparing CNN features between images." Journal of Imaging Science and Technology 60.6 (2016): 60410-1. [2] Amirshahi, Seyed Ali, Marius Pedersen, and Azeddine Beghdadi. "Reviving traditional image quality metrics using CNNs." Color and imaging conference. Vol. 2018. No. 1. Society for Imaging Science and Technology, 2018. [3] Gao, Fei, et al. "Deepsim: Deep similarity for image quality assessment." Neurocomputing 257 (2017): 104-114.

The wide use of cameras by the public has raised the interest of image quality evaluation and ranking. Current cameras embed complex processing pipelines that adapt strongly to the scene content by implementing, for instance, advanced noise reduction or local adjustment on faces. However, current methods of Image Quality assessment are based on static geometric charts which are not representative of the common camera usage that targets mostly portraits. Moreover, on non-synthetic content most relevant features such as detail preservation or noisiness are often un-tractable. To overcome this situation, we propose to mix classical measurements and Machine learning based methods: we reproduce realistic content triggering this complex processing pipelines in controlled conditions in the lab which allows for rigorous quality assessment. Then, ML based methods can reproduce perceptual quality annotated previously. In this paper, we focus on noise quality evaluation and test on two different set ups: closeup and distant portraits. These setups provide scene capture conditions flexibility, but most of all, allow the evaluation of all quality camera ranges from high quality DSLR to poor quality video conference. Our numerical results show the relevance of our solution compared to geometric charts and the importance of adapting to realistic content.

360-degree image quality assessment using deep neural networks is usually designed using a multi-channel paradigm exploiting possible viewports. This is mainly due to the high resolution of such images and the unavailability of ground truth labels (subjective quality scores) for individual viewports. The multi-channel model is hence trained to predict the score of the whole 360-degree image. However, this comes with a high complexity cost as multi neural networks run in parallel. In this paper, a patch-based training is proposed instead. To account for the non-uniformity of quality distribution of a scene, a weighted pooling of patches’ scores is applied. The latter relies on natural scene statistics in addition to perceptual properties related to immersive environments.

Image quality assessment (IQA) is an effective way to evaluate image/signal processes (ISPs). Here, we present a single value decomposition (SVD)-based IQA method to quantitatively evaluate morphological distortion of chessboard patterns. Incorrect ISP tuning parameters can create suboptimal images with artifacts on the edges of small text or high-frequency patterns. We reproduced those artifacts by using a small chessboard pattern and quantitatively evaluated the morphological distortion in the pattern. Then, we verified our method through qualitative evaluation survey and Pearson correlation. As a result, the score of the proposed method was in good agreement with the qualitative evaluation result and had a Pearson correlation coefficient (PCC) of 0.97.

In this paper, we propose a novel and standardized approach to the problem of camera-quality assessment on portrait scenes. Our goal is to evaluate the capacity of smartphone front cameras to preserve texture details on faces. We introduce a new portrait setup and an automated texture measurement. The setup includes two custom-built lifelike mannequin heads, shot in a controlled lab environment. The automated texture measurement includes a Region-of-interest (ROI) detection and a deep neural network. To this aim, we create a realistic mannequins database, which contains images from different cameras, shot in several lighting conditions. The ground-truth is based on a novel pairwise comparison technology where the scores are generated in terms of Just-Noticeable-differences (JND). In terms of methodology, we propose a Multi-Scale CNN architecture with random crop augmentation, to overcome overfitting and to get a low-level feature extraction. We validate our approach by comparing its performance with several baselines inspired by the Image Quality Assessment (IQA) literature.

Banding has been regarded as one of the most severe defects affecting the overall image quality in the printing industry. There has been a lot of research on it, but most of them focused on uniform pages or specific test images. Aiming at detecting banding on customer’s content pages, this paper proposes a banding processing pipeline that can automatically detect banding, identify periodic and isolated banding, and estimate the periodic interval. In addition, based on the detected banding characteristics, the pipeline predicts the overall quality of printed customer’s content pages and obtains predictions similar to human perceptual assessment.

Assessing the quality of images is a challenging task. To achieve this goal, the images must be evaluated by a pool of subjects following a well-defined assessment protocol or an objective quality metric must be defined. In this contribution, an objective metric based on neural networks is proposed. The model takes into account the human vision system by computing a saliency map of the image under test. The system is based on two modules: the first one is trained using normalized distorted images. It learns the features from the original and the distorted images and the estimated saliency map. Furthermore, an estimate of the prediction error is performed. The second module (non-linear regression module) is trained with the available subjective scores. The performances of the proposed metric have been evaluated by using state of the art quality assessment datasets. The achieved results show the effectiveness of the proposed system in matching the subjective quality score.