The National Gallery of Art developed a systematic approach to evaluate and categorize its extensive digital image collection spanning 20 years of technological evolution. This study addresses the challenge of inconsistent image quality resulting from varying capture technologies and methodologies over time. A four-tier rating system was created based on comprehensive analysis of capture devices, technical specifications, and workflow documentation. The system enables efficient assessment of image suitability for different applications while providing clear guidance for re-digitization decisions. The implementation includes integration with the institution's digital asset management system, offering a practical framework that other cultural heritage institutions can adapt for managing legacy digital collections while maintaining current quality standards.

This research investigated the influence of lightness, lightness contrast, observer characteristics, and display types on image preference and perception. Previous studies have emphasized the importance of color attributes in shaping image quality; in this study, we explored lightness attributes using CIECAM16 color space. Four experiments were conducted on OLED and QLED displays, during which participants adjusted color attributes of images to their preference and rated their preferred images relative to reference images. The results indicated that lightness attributes significantly impact image preference, and that observer characteristics and image content influence lightness preference on each display type.

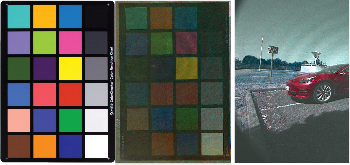

Quantification of the image sensor signal and noise is essential to derive key image quality performance indicators. Image sensors in automotive cameras are predominately activated in high dynamic range (HDR) mode, however, legacy procedures to quantify image sensor noise were optimized for operation in standard dynamic range mode. This work discusses the theoretical background and the workflow of the photon-transfer curve (PTC) test. Afterwards, it presents example implementations of the PTC test and its derivatives according to legacy procedures and according to procedures that were optimized for image sensors in HDR mode.

Conventional image quality metrics (IQMs), such as PSNR and SSIM, are designed for perceptually uniform gamma-encoded pixel values and cannot be directly applied to perceptually non-uniform linear high-dynamic-range (HDR) colors. Similarly, most of the available datasets consist of standard-dynamic-range (SDR) images collected in standard and possibly uncontrolled viewing conditions. Popular pre-trained neural networks are likewise intended for SDR inputs, restricting their direct application to HDR content. On the other hand, training HDR models from scratch is challenging due to limited available HDR data. In this work, we explore more effective approaches for training deep learning-based models for image quality assessment (IQA) on HDR data. We leverage networks pre-trained on SDR data (source domain) and re-target these models to HDR (target domain) with additional fine-tuning and domain adaptation. We validate our methods on the available HDR IQA datasets, demonstrating that models trained with with our combined recipe outperform previous baselines, converge much quicker, and reliably generalize to HDR inputs.

This paper investigates the relationship between image quality and computer vision performance. Two image quality metrics, as defined in the IEEE P2020 draft Standard for Image quality in automotive systems, are used to determine the impact of image quality on object detection. The IQ metrics used are (i) Modulation Transfer function (MTF), the most commonly utilized metric for measuring the sharpness of a camera; and (ii) Modulation and Contrast Transfer Accuracy (CTA), a newly defined, state-of-the-art metric for measuring image contrast. The results show that the MTF and CTA of an optical system are impacted by ISP tuning. Some correlation is shown to exist between MTF and object detection (OD) performance. A trend of improved AP5095 as MTF50 increases is observed in some models. Scenes with similar CTA scores can have widely varying object detection performance. For this reason, CTA is shown to be limited in its ability to predict object detection performance. Gaussian noise and edge enhancement produce similar CTA scores but different AP5095 scores. The results suggest MTF is a better predictor of ML performance than CTA.

This paper presents the design of an accurate rain model for the commercially-available Anyverse automotive simulation environment. The model incorporates the physical properties of rain and a process to validate the model against real rain is proposed. Due to the high computational complexity of path tracing through a particle-based model, a second more computationally efficient model is also proposed. For the second model, the rain is modeled using a combination of a particle-based model and an attenuation field. The attenuation field is fine-tuned against the particle-only model to minimize the difference between the models.

VCX or Valued Camera eXperience is a nonprofit organization dedicated to the objective and transparent evaluation of mobile phone cameras. The members continuously work on the development of a test scheme that can provide an objective score for the camera performance. Every device is tested for a variety of image quality factors while these typically based on existing standards. This paper presents that latest development with the newly released version 2023 and the process behind it. New metric included are extended tests on video dynamics, AE and AWB, dedicated tests on ultra wide modules and adjustments to the metric system based on a large scale subjective study.

The exploration of the Solar System using unmanned probes and rovers has improved the understanding of our planetary neighbors. Despite a large variety of instruments, optical and near-infrared cameras remain critical for these missions to understand the planet’s surrounding, its geology but also to communicate easily with the general public. However, missions on planetary bodies must satisfy strong constraints in terms of robustness, data size, and amount of onboard computing power. Although this trend is evolving, commercial image-processing software cannot be integrated. Still, as the optical and a spectral information of the planetary surfaces is a key science objective, spectral filter arrays (SFAs) provide an elegant, compact, and cost-efficient solution for rovers. In this contribution, we provide ways to process multi-spectral images on the ground to obtain the best image quality, while remaining as generic as possible. This study is performed on a prototype SFA. Demosaicing algorithms and ways to correct the spectral and color information on these images are also detailed. An application of these methods on a custom-built SFA is shown, demonstrating that this technology represents a promising solution for rovers.

Print margin and skew describe an image placed crookedly on the printed page. It is one of the most common defects in electrophotographic printers and dramatically affects print quality. It primarily might occur when using a two-sided printing module on the printer. To solve or correct the print margin and skew error, we should first accurately detect the print margin and skew on the printed page. This paper proposes a method to accurately detect the print margin and skew based on the Hough Lines Detection algorithm. There are three steps of this print margin and skew detection method. We first project the digital master images into the scanned test image with master image edges. The second step is the most challenging part of designing this method because all of our scanned test pages had only two or three and barely visible edges of the printed paper to the naked eye. We use an image processing method and Hough Lines Detection algorithm to extract the paper edges. The third step uses the projected master images edge and the extracted paper edges to calculate the print margin and skew result. Our algorithm is an efficient and accurate method to detect print margin and skew errors based on factual scanned image verification.

Many objective quality metrics have been developed during the last decade. A simple way to improve the efficiency of assessing the visual quality of images is to fuse several metrics into some combined ones. The goal of the fusion approach is to exploit the advantages of the used metrics and diminish the influence of their drawbacks. In this paper, a symbolic regression technique using an evolutionary algorithm known as multi-gene genetic programming (MGGP) is applied for predicting subject scores of images in datasets, by the combination of objective scores of a set of image quality metrics (IQM). By learning from image datasets, the MGGP can determine the appropriate image quality metrics, from 21 used metrics, whose objective scores employed as predictors, in the symbolic regression model, by optimizing simultaneously two competing objectives of model ’goodness of fit’ to data and model ’complexity’. Six largest publicly available image databases (namely LIVE, CSIQ, TID2008, TID2013, IVC and MDID) are used for learning and testing the predictive models, according the k-fold-cross-validation and the cross dataset strategies. The proposed approach is compared against state-of-the-art objective image quality assessment approaches. Results of comparison reveal that the proposed approach outperforms other state-of-the-art recently developed fusion approaches.