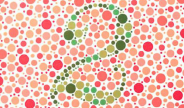

This paper describes a colour image enhancement method for those having colour-vision deficiencies. The proposed method can be divided into 3 stages. Firstly, a conversion relation between the wavelength shift (measured in nanometers) of a colour deficient observer (CDO) and the severity of colour deficiency was established. Secondly, the perceived colour gamut was built by applying the conversion relation. Finally, the original images were re-coloured by adopting a gamut mapping algorithm to map colours from the gamut of colour normal observer (CNO) to that of a CDO. Psychophysical experiments were then conducted to show the effectiveness of the method.

Diffraction X-ray images provide molecular level information in tissue under hydrated, physiological conditions at the physiologically relevant millisecond time scale. When processing diffraction x-ray images there is a need to subtract background produced during the capture process prior to making measurements. This is a non-uniform background that is strongest at the diffraction center and decays with increased distance from the center. Existing methods require careful parameter selection or assume a specific background model. In this paper we propose a novel approach for background subtraction in which we learn to subtract background based on labeled examples. The labeled examples are image pairs where in each pair one of the images has diffraction background and the second has the background removed. Using a deep convolutional neural network (CNN) we learn to map an image with background to an image without it. Experimental results demonstrate that the proposed approach is capable of learning background removal with results close to ground truth data (PSNR > 68, SSIM > 0.99) and without having to manually select background parameters.