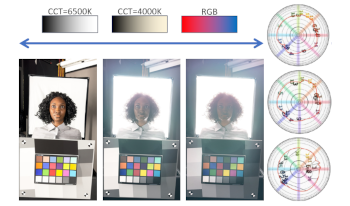

This article provides elements to answer the question: how to judge general stylistic color rendering choices made by imaging devices capable of recording HDR formats in an objective manner? The goal of our work is to build a framework to analyze color rendering behaviors in targeted regions of any scene, supporting both HDR and SDR content. To this end, we discuss modeling of camera behavior and visualization methods based on the IC T C P /ITP color spaces, alongside with example of lab as well as real scenes showcasing common issues and ambiguities in HDR rendering.

Images captured at low light suffers from underexposure and noise. These poor-quality images act as hindrance for computer vision algorithms as well as human vision. While this problem can be solved by increasing the exposure time, it also introduces new problems. In applications like ADAS, where there are fast moving objects in the scene, increasing the exposure time will cause motion blur. In applications, that demand higher frame rate, increasing the exposure time is not an option. Increasing the gain will result in noise as well as saturation of pixels at higher end. So, a real time scene adaptive algorithm is required for the enhancement of low light images. We propose a real time low light enhancement algorithm with more detail preservation compared to existing global based enhancement algorithms for low cost embedded platforms. The algorithm is integrated to image signal processing pipeline of TI’s TDA3x and achieved ˜50fps on c66x DSP for HD resolution video captured from Omnivision’s OV10640 Bayer image sensor.

A system-on-chip (SoC) platform having a dual-core microprocessor (μP) and a field-programmable gate array (FPGA), as well as interfaces for sensors and networking, is a promising architecture for edge computing applications in computer vision. In this paper, we consider a case study involving the low-cost Zynq- 7000 SoC, which is used to implement a three-stage image signal processor (ISP), for a nonlinear CMOS image sensor (CIS), and to interface the imaging system to a network. Although the highdefinition imaging system operates efficiently in hard real time, by exploiting an FPGA implementation, it sends information over the network on demand only, by exploiting a Linux-based μP implementation. In the case study, the Zynq-7000 SoC is configured in a novel way. In particular, to guarantee hard real time performance, the FPGA is always the master, communicating with the μP through interrupt service routines and direct memory access channels. Results include a validation of the overall system, using a simulated CIS, and an analysis of the system complexity. On this low-cost SoC, resources are available for significant additional complexity, to integrate a computer vision application, in future, with the nonlinear CMOS imaging system.