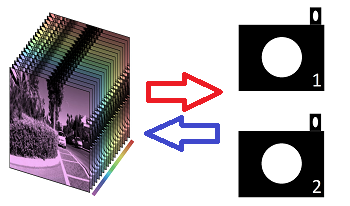

Spectral Reconstruction (SR) algorithms seek to map RGB images to their hyperspectral image counterparts. Statistical methods such as regression, sparse coding, and deep neural networks are used to determine the SR mapping. All these algorithms are optimized ‘blindly’ and the provenance of the RGBs is not considered. In this paper, we benchmark the performance of SR methods—in order of increasing complexity: regression, sparse coding, and deep neural network—when different RGB camera spectral sensitivity functions are used. In effect, we ask: “Are some cameras better able to recover spectra from RGBs than others?”. In our experiments, RGB images are generated by numerical integration for a fixed set of hyperspectral images using 9 different camera response functions (each from a different camera manufacturer) plus the CIE 1964 color matching functions. Then, we train SR methods on the respective RGB image sets. Our experiments show three important results. First, different cameras <strong>do</strong> support slightly better or worse spectral reconstruction but, secondly, that changing the spectral sensitivities alone does not change the ranking of different algorithms. Finally, we show that sometimes switching the used camera for SR can give a greater performance boost than switching to use a more complex SR method.

Drawing materials, such as soft graphite or charcoal applied on paper, may be prone to smearing during transport and handling. To mitigate this effect, it was common practice among 19th century artists to apply a fixative on drawings made in friable media. In many cases, the fixative has been imperative in preserving the drawings, although it may also have altered the appearance of the paper and/or the media. It is rarely possible to identify the type of fixative used, without using analytical techniques that require sample taking. As the fixative layer is very thin, any sample will often also contain small fragments of the paper. In this article, we are proposing a non-invasive approach for recognizing fixatives based on their spectral signatures in the visible and near-infrared range, collected with a hyperspectral imaging device. Our approach is tested on mock-up samples designed to contain fixatives of animal and vegetal origin, and on two drawings by the Norwegian artist, Thomas Fearnley.

In Spectral Reconstruction (SR), we recover hyperspectral images from their RGB counterparts. Most of the recent approaches are based on Deep Neural Networks (DNN), where millions of parameters are trained mainly to extract and utilize the contextual features in large image patches as part of the SR process. On the other hand, the leading Sparse Coding method ‘A+’—which is among the strongest point-based baselines against the DNNs—seeks to divide the RGB space into neighborhoods, where locally a simple linear regression (comprised by roughly 102 parameters) suffices for SR. In this paper, we explore how the performance of Sparse Coding can be further advanced. We point out that in the original A+, the sparse dictionary used for neighborhood separations are optimized for the spectral data but used in the projected RGB space. In turn, we demonstrate that if the local linear mapping is trained for each spectral neighborhood instead of RGB neighborhood (and theoretically if we could recover each spectrum based on where it locates in the spectral space), the Sparse Coding algorithm can actually perform much better than the leading DNN method. In effect, our result defines one potential (and very appealing) upper-bound performance of point-based SR.

Pigment classification of paintings is considered an important task in the field of cultural heritage. It helps to analyze the object and to know its historical value. This information is also essential for curators and conservators. Hyperspectral imaging technology has been used for pigment characterization for many years and has potential in its scientific analysis. Despite its advantages, there are several challenges linked with hyperspectral image acquisition. The quality of such acquired hyperspectral data can be influenced by different parameters such as focus, signal-to-noise ratio, illumination geometry, etc. Among several, we investigated the effect of four key parameters, namely focus distance, signal-to-noise ratio, integration time, and illumination geometry on pigment classification accuracy for a mockup using hyperspectral imaging in visible and near-infrared regions. The results obtained exemplify that the classification accuracy is influenced by the variation in these parameters. Focus distance and illumination angle have a significant effect on the classification accuracy compared to signal-to-noise ratio and integration time.