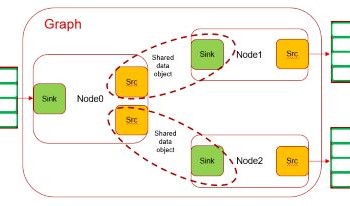

OpenVX is an open standard for accelerating computer vision applications on a heterogeneous platform with multiple processing elements. OpenVX is accepted by Automotive industry as a go-to framework for developing performance-critical, power-optimized and safety compliant computer vision processing pipelines on real-time heterogeneous embedded SoCs. Optimizing OpenVX development flow becomes a necessity with ever growing demand for variety of vision applications required in both Automotive and Industrial market. Although OpenVX works great when all the elements in the pipeline is implemented with OpenVX, it lacks utilities to effectively interact with other frameworks. We propose a software design to make OpenVX development faster by adding a thin layer on top of OpenVX which simplifies construction of an OpenVX pipeline and exposes simple interface to enable seamless interaction with other frameworks like v4l2, OpenMax, DRM etc....

A typical edge compute SoC capable of handling deep learning workloads at low power is usually heterogeneous by design. It typically comprises multiple initiators such as real-time IPs for capture and display, hardware accelerators for ISP, computer vision, deep learning engines, codecs, DSP or ARM cores for general compute, GPU for 2D/3D visualization. Every participating initiator transacts with common resources such as L3/L4/DDR memory systems to seamlessly exchange data between them. A careful orchestration of this dataflow is important to keep every producer/consumer at full utilization without causing any drop in real-time performance which is critical for automotive applications. The software stack for such complex workflows can be quite intimidating for customers to bring-up and more often act as an entry barrier for many to even evaluate the device for performance. In this paper we propose techniques developed on TI’s latest TDA4V-Mid SoC, targeted for ADAS and autonomous applications, which is designed around ease-of-use but ensuring device entitlement class of performance using open standards such as DL runtimes, OpenVx and GStreamer.