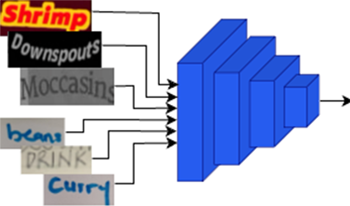

In this paper, we introduce a unified handwriting and scene-text recognition model tailored to discern both printed and hand-written text images. Our primary contribution is the incorporation of the self-attention mechanism, a salient feature of the transformer architecture. This incorporation leads to two significant advantages: 1) A substantial improvement in the recognition accuracy for both scene-text and handwritten text, and 2) A notable decrease in inference time, addressing a prevalent challenge faced by modern recognizers that utilize sequence-based decoding with attention.

Mobile devices typically support input from virtual keyboards or pen-based technologies, allowing handwriting to be a potentially viable text input solution for programming on touchscreen devices. The major problem, however, is that handwriting recognition systems are built to take advantage of the rules of natural languages rather than programming languages. In addition, mobile devices are also inherently restricted by the limitation of screen size and the inconvenient use of a virtual keyboard. In this work, we create a novel handwriting-to-code transformation system on a mobile platform to recognize and analyze source code written directly on a whiteboard or a piece of paper. First, the system recognizes and further compiles the handwritten source code into an executable program. Second, a friendly graphical user interface (GUI) is provided to visualize how manipulating different sections of code impacts the program output. Finally, the coding system supports an automatic error detection and correction mechanism to help address the common syntax and spelling errors during the process of whiteboard coding. The mobile application provides a flexible and user-friendly solution for realtime handwriting-based programming for learners under various environments where the keyboard or touchscreen input is not preferred.