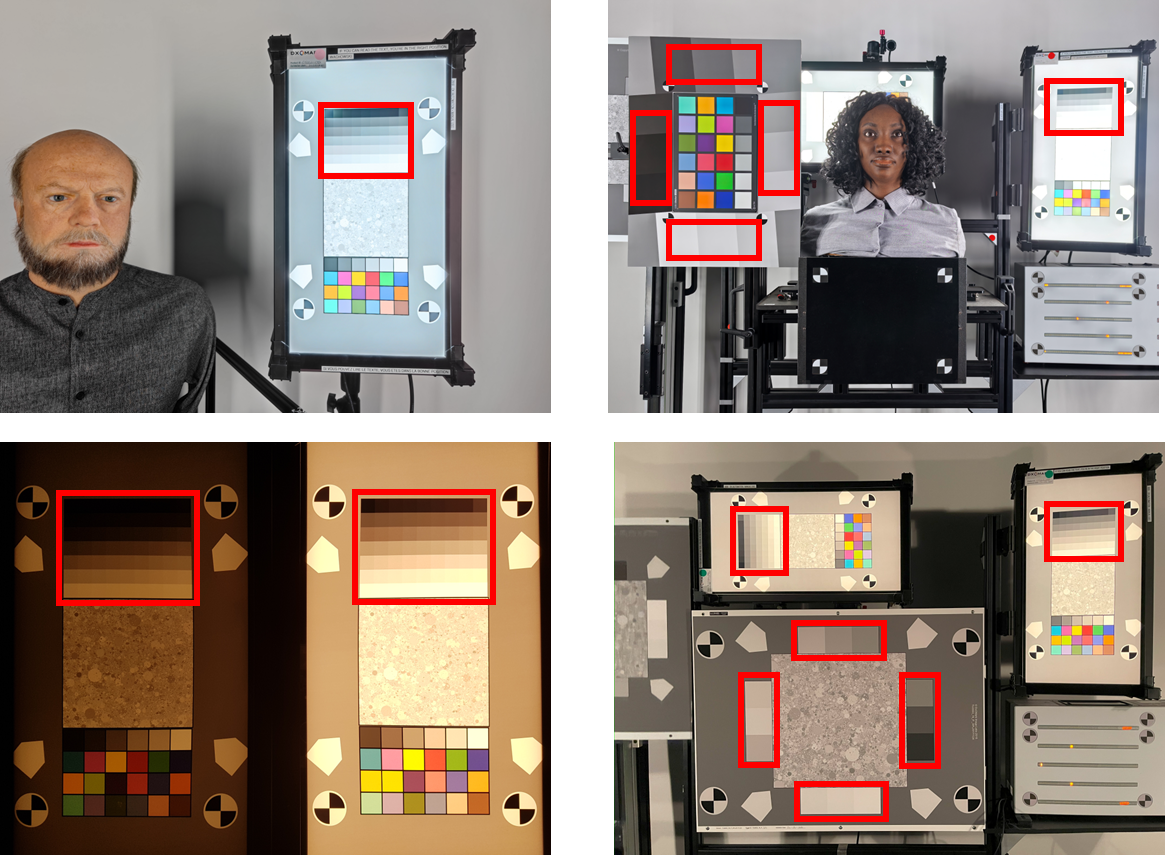

This work provides a novel glass-to-glass metric of local contrast, useful in the context of image quality evaluation of HDR content. This metric, called Local-Contrast Gain (LCG), uses the opto-optical transfer function (OOTF) of the imaging system and its first derivative to compute the incremental ratio between contrast in the scene and contrast on the display. In order to be perceptually meaningful, we chose Weber’s definition of contrast. In order know the OOTF in analytical form and to make the measurement robust to the uncertainty of measurements of the ground truth, we rely on a model that we propose and that expands upon our previously published work. We provide experimental validation of our metric on a variety of target charts, both reflective and transmissive, both in isolation and within complex setups spanning more than six EVs.

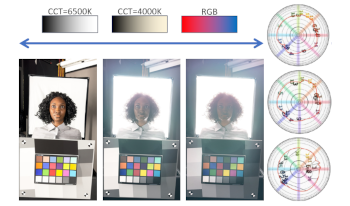

This article provides elements to answer the question: how to judge general stylistic color rendering choices made by imaging devices capable of recording HDR formats in an objective manner? The goal of our work is to build a framework to analyze color rendering behaviors in targeted regions of any scene, supporting both HDR and SDR content. To this end, we discuss modeling of camera behavior and visualization methods based on the IC T C P /ITP color spaces, alongside with example of lab as well as real scenes showcasing common issues and ambiguities in HDR rendering.

The motivation for use of biosensors in audiovisual media is made by highlighting problem of signal loss due to wide variability in playback devices. A metadata system that allows creatives to steer signal modifications as a function of audience emotion and cognition as determined by biosensor analysis.

With the release of the Apple iPhone 12 pro in 2020, various features were integrated that make it attractive as a recording device for scene-related computer graphics pipelines. The captured Apple RAW images have a much higher dynamic range than the standard 8-bit images. Since a scene-based workflow naturally has an extended dynamic range (HDR), the Apple RAW recordings can be well integrated. Another feature is the Dolby Vision HDR recordings, which are primarily adapted to the respective display of the source device. However, these recordings can also be used in the CG workflow since at least the basic HLG transfer function is integrated. The iPhone12pro's two Laser scanners can produce complex 3D models and textures for the CG pipeline. On the one hand, there is a scanner on the back that is primarily intended for capturing the surroundings for AR purposes. On the other hand, there is another scanner on the front for facial recognition. In addition, external software can read out the scanning data for integration in 3D applications. To correctly integrate the iPhone12pro Apple RAW data into a scene-related workflow, two command-line-based software solutions can be used, among others: dcraw and rawtoaces. Dcraw offers the possibility to export RAW images directly to ACES2065-1. Unfortunately, the modifiers for the four RAW color channels to address the different white points are unavailable. Experimental test series are performed under controlled studio conditions to retrieve these modifier values. Subsequently, these RAW-derived images are imported into computer graphics pipelines of various CG software applications (SideFx Houdini, The Foundry Nuke, Autodesk Maya) with the help of OpenColorIO (OCIO) and ACES. Finally, it will be determined if they can improve the overall color quality. Dolby Vision content can be captured using the native Camera app on an iPhone 12. It captures HDR video using Dolby Vision Profile 8.4, which contains a cross-compatible HLG Rec.2020 base layer and Dolby Vision dynamic metadata. Only the HLG base layer is passed on when exporting the Dolby Vision iPhone video without the corresponding metadata. It is investigated whether the iPhone12 videos transferred this way can increase the quality of the computer graphics pipeline. The 3D Scanner App software controls the two integrated Laser Scanners. In addition, the software provides a large number of export formats. Therefore, integrating the OBJ-3D data into industry-standard software like Maya and Houdini is unproblematic. Unfortunately, the models and the corresponding UV map are more or less machine-readable. So, manually improving the 3D geometry (filling holes, refining the geometry, setting up new topology) is cumbersome and time-consuming. It is investigated if standard techniques like using the ZRemesher in ZBrush, applying Texture- and UV-Projection in Maya, and VEX-snippets in Houdini can assemble these models and textures for manual editing.

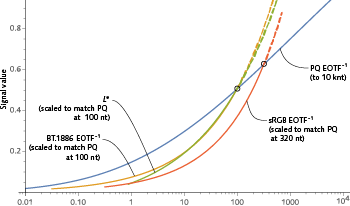

High dynamic range (HDR) technology enables a much wider range of luminances – both relative and absolute – than standard dynamic range (SDR). HDR extends black to lower levels, and white to higher levels, than SDR. HDR enables higher absolute luminance at the display to be used to portray specular highlights and direct light sources, a capability that was not available in SDR. In addition, HDR programming is mastered with wider color gamut, usually DCI P3, wider than the BT.1886 (“BT.709”) gamut of SDR. The capabilities of HDR strain the usual SDR methods of specifying color range. New methods are needed. A proposal has been made to use CIE LAB to quantify HDR gamut. We argue that CIE L* is only appropriate for applications having contrast range not exceeding 100:1, so CIELAB is not appropriate for HDR. In practice, L* cannot accurately represent lightness that significantly exceeds diffuse white – that is, L* cannot reasonably represent specular reflections and direct light sources. In brief: L* is inappropriate for HDR. We suggest using metrics based upon ST 2084/BT.2100 PQ and its associated color encoding, IC<sub>T</sub>C<sub>P</sub>.

Hue linearity is critically important to uniform color spaces and color appearance models. Past studies investigating hue linearity only covered relatively small color gamuts, which was generally acceptable for conventional display technologies. The recent development of HDR and WCG display technologies has motivated the development of new color spaces (e.g., IC<sub>T</sub>C<sub>P</sub> and J<sub>z</sub>a<sub>z</sub>b<sub>z</sub>). The hue linearity of these new color spaces, however, was not verified for the claimed HDR and WCG conditions, due to the lack of constant hue loci data. In this study, an experiment setup was carefully developed to produce HDR and WCG conditions, with the stimulus luminance of 3400 cd/m² and the diffuse white luminance of 1000 cd/m² and the stimulus chromaticities almost covering the Rec. 2020 gamut. The human observers performed a hue matching task, adjusting the hue of the test stimulus, with a hue angle step of 0.2°, at various chroma levels to match that of the reference stimulus at 21 different hues. The derived constant hue loci were used to test the various UCSs and suggested the need to improve the hue linearity of these spaces.

A Triple Conversion Gain (TCG) sensor with all-pixel auto focus based on 2PD of 1.4 um-pitch has been demonstrated for mobile applications. TCG was implemented by sharing adjacent Floating Diffusion (FD) without adding other capacitor. TCG helps to reduce the noise gap or slow the noise increase as user gain increases. An image with a Dynamic Range (DR) of 82.4 dB through a single exposure can be obtained through intra-scene TCG (i-TCG). Through this, a wider range of illuminance environments can be captured in the image. In addition, a more natural image can be obtained by reducing the SNR dip in one image by using TCG.

An experiment was carried out to investigate the change of color appearance for 13 surface stimuli viewed under a wide range of illuminance levels (15-32000 lux) using asymmetrical matching method. Addition to the above, in the visual field, observers viewed colours in a dark (10 lux) and a bright (200000 lux) illuminance level at the same time to simulate HDR viewing condition. The results were used to understand the relationship between the color changes under HDR conditions, to generate a corresponding color dataset and to verify color appearance model, such as CIECAM16.

Two colour appearance models based UCSs, CAM16-UCS and ZCAM-QMh, were tested using HDR, WCG and COMBVD datasets. As a comparison, two widely used UCSs, CIELAB and ICTCP, were tested. Metrics of the STRESS and correlation coefficient between predicted colour differences and visual differences, together with local and global uniformity based on their chromatic discrimination ellipses, were applied to test models' performance. The two UCSs give similar performance. The luminance parametric factor kL, and power factor γ, were introduced to optimize colour-difference models. Factors kL and γ of 0.75 and 0.5, gave marked improvement to predict the HDR dataset. Factor kL of 0.3 gave significant improvement in the test of WCG dataset. In the test of COMBVD dataset, optimization provide very limited improvement.

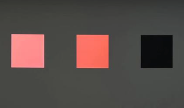

Exposure problems, due to standard camera sensor limitations, often lead to image quality degradations such as loss of details and change in color appearance. The quality degradations further hiders the performances of imaging and computer vision applications. Therefore, the reconstruction and enhancement of uderand over-exposed images is essential for various applications. Accordingly, an increasing number of conventional and deep learning reconstruction approaches have been introduced in recent years. Most conventional methods follow color imaging pipeline, which strongly emphasize on the reconstructed color and content accuracy. The deep learning (DL) approaches have conversely shown stronger capability on recovering lost details. However, the design of most DL architectures and objective functions don’t take color fidelity into consideration and, hence, the analysis of existing DL methods with respect to color and content fidelity will be pertinent. Accordingly, this work presents performance evaluation and results of recent DL based overexposure reconstruction solutions. For the evaluation, various datasets from related research domains were merged and two generative adversarial networks (GAN) based models were additionally adopted for tone mapping application scenario. Overall results show various limitations, mainly for severely over-exposed contents, and a promising potential for DL approaches, GAN, to reconstruct details and appearance.