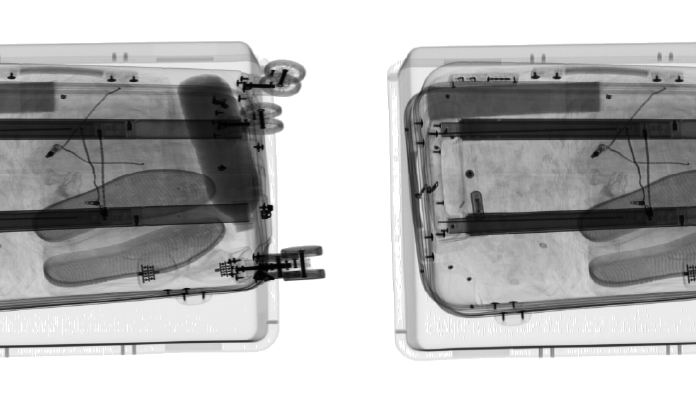

The utilization of dual-energy X-ray detection technology in security inspection plays a crucial role in ensuring public safety and preventing crimes. However, the X-ray images generated in such security checks often suffer from substantial noise due to the capture process. The noise significantly degrades the quality of the displayed image and affects the performance of the automatic threat detection pipeline. While deep learning-based image denoising methods have shown remarkable progress, most existing approaches rely on large training datasets and clean reference images, which are not readily available in security inspection scenarios. This limitation hampers the widespread application of these methods in the field of security inspection. In this paper, we addressed a denoising problem designed for X-ray images, where the noise model follows a Poisson-Gaussian distribution. Importantly, our method does not require clean reference images for training. Our denoising approach is built upon the Blindspot neural network, which effectively addresses the challenges associated with noise removal. To evaluate the effectiveness of our proposed approach, we conducted experiments on a real X-ray image dataset. The results indicate that our method achieves favorable BRISQUE scores across different baggage scenes.

In the dynamic realm of image processing, coordinate-based neural networks have made significant strides, especially in tasks such as 3D reconstruction, pose estimation, and traditional image/video processing. However, these Multi-Layer Perceptron (MLP) models often grapple with computational and memory challenges. Addressing these, this study introduces an innovative approach using Tensor-Product B-Spline (TPB), offering a promising solution to lessen computational demands without sacrificing accuracy. The central objective was to harness TPB’s potential for image denoising and super-resolution, aiming to sidestep computational burdens of neural fields. This was achieved by replacing iterative processes with deterministic TPB solutions, ensuring enhanced performance and reduced load. The developed framework adeptly manages both super-resolution and denoising, utilizing implicit TPB functions layered to optimize image reconstruction. Evaluation on the Set14 and Kodak datasets showed the TPB-based approach to be comparable to established methods, producing high-quality results in both quantitative metrics and visual evaluations. This pioneering methodology, emphasizing its novelty, offers a refreshed perspective in image processing, setting a promising trajectory for future advancements in the domain.

Recently, many deep learning applications have been used on the mobile platform. To deploy them in the mobile platform, the networks should be quantized. The quantization of computer vision networks has been studied well but there have been few studies for the quantization of image restoration networks. In previous study, we studied the effect of the quantization of activations and weight for deep learning network on image quality following previous study for weight quantization for deep learning network. In this paper, we made adaptive bit-depth control of input patch while maintaining the image quality similar to the floating point network to achieve more quantization bit reduction than previous work. Bit depth is controlled adaptive to the maximum pixel value of the input data block. It can preserve the linearity of the value in the block data so that the deep neural network doesn't need to be trained by the data distribution change. With proposed method we could achieve 5 percent reduction in hardware area and power consumption for our custom deep network hardware while maintaining the image quality in subejctive and objective measurment. It is very important achievement for mobile platform hardware.

Imaging through scattering media finds applications in diverse fields from biomedicine to autonomous driving. However, interpreting the resulting images is difficult due to blur caused by the scattering of photons within the medium. Transient information, captured with fast temporal sensors, can be used to significantly improve the quality of images acquired in scattering conditions. Photon scattering, within a highly scattering media, is well modeled by the diffusion approximation of the Radiative Transport Equation (RTE). Its solution is easily derived which can be interpreted as a Spatio-Temporal Point Spread Function (STPSF). In this paper, we first discuss the properties of the ST-PSF and subsequently use this knowledge to simulate transient imaging through highly scattering media. We then propose a framework to invert the forward model, which assumes Poisson noise, to recover a noise-free, unblurred image by solving an optimization problem.

We consider hyperspectral phase/amplitude imaging from hyperspectral complex-valued noisy observations. Block-matching and grouping of similar patches are main instruments of the proposed algorithms. The search neighborhood for similar patches spans both the spectral and 2D spatial dimensions. SVD analysis of 3D grouped patches is used for design of adaptive nonlocal bases. Simulation experiments demonstrate high efficiency of developed state-of-the-art algorithms.

The non-stationary nature of image characteristics calls for adaptive processing, based on the local image content. We propose a simple and flexible method to learn local tuning of parameters in adaptive image processing: we extract simple local features from an image and learn the relation between these features and the optimal filtering parameters. Learning is performed by optimizing a user defined cost function (any image quality metric) on a training set. We apply our method to three classical problems (denoising, demosaicing and deblurring) and we show the effectiveness of the learned parameter modulation strategies. We also show that these strategies are consistent with theoretical results from the literature.