Video streaming hits more than 80% of the carbon emissions generated by worldwide digital technologies consumption that, in their turn, account for 5% of worldwide carbon emissions. Hence, green video encoding emerges as a research field devoted to reducing the size of the video streams and the complexity of the decoding/encoding operations, while keeping a preestablished visual quality. Having the specific view of tracking green encoded video streams, the present paper studies the possibility of identifying the last video encoder considered in the case of multiple reencoding distribution scenarios. To this end, classification solutions backboned by the VGG, ResNet and MobileNet families are considered to discriminate among MPEG-4 AVC stream syntax elements, such as luma/chroma coefficients or intra prediction modes. The video content sums-up to 2 hours and is structured in two databases. Three encoders are alternatively studied, namely a proprietary green-encoder solution, and the two by-default encoders available on a large video sharing platform and on a popular social media, respectively. The quantitative results show classification accuracy ranging between 75% to 100%, according to the specific architecture, sub-set of classified elements, and dataset.

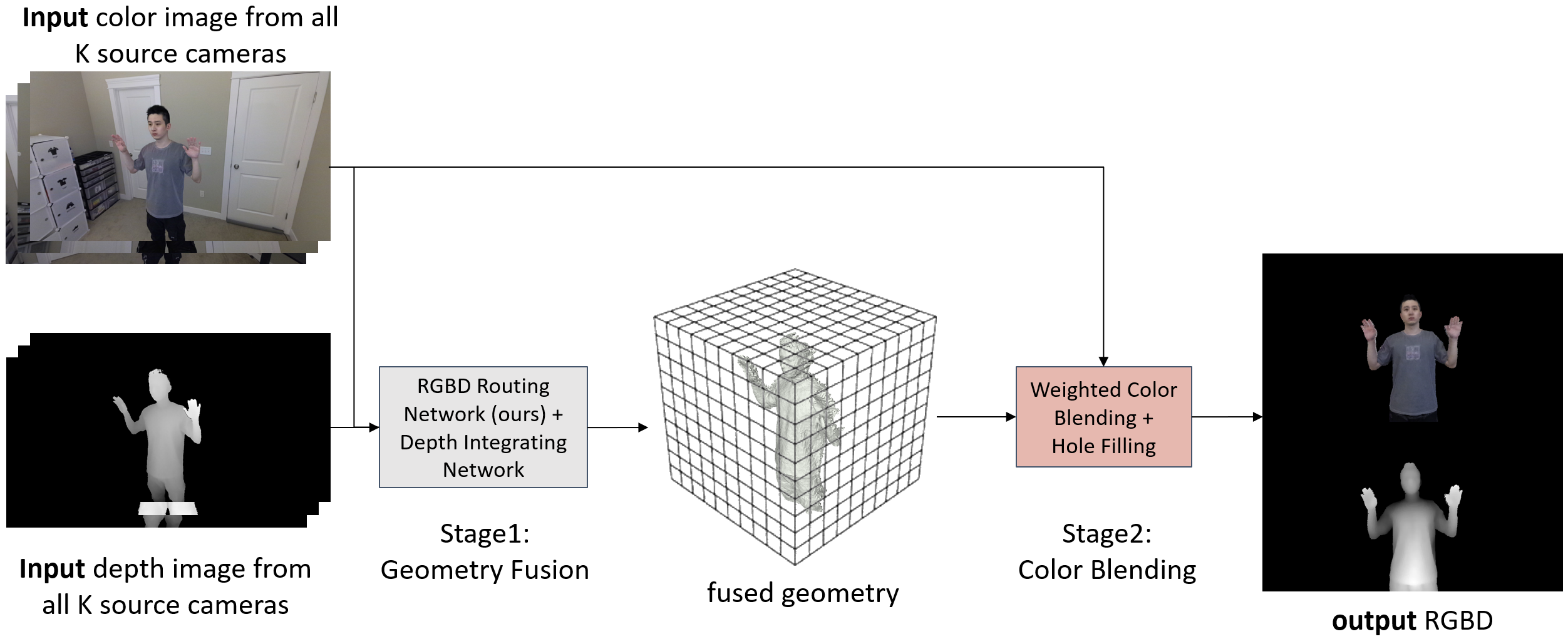

With the widespread use of video conferencing technology for remote communication in the workforce, there is an increasing demand for face-to-face communication between the two parties. To solve the problem of difficulty in acquiring frontal face images, multiple RGB-D cameras have been used to capture and render the frontal faces of target objects. However, the noise of depth cameras can lead to geometry and color errors in the reconstructed 3D surfaces. In this paper, we proposed RGBD Routed Blending, a novel two-stage pipeline for video conferencing that fuses multiple noisy RGB-D images in 3D space and renders virtual color and depth output images from a new camera viewpoint. The first stage is the geometry fusion stage consisting of an RGBD Routing Network followed by a Depth Integrating Network to fuse the RGB-D input images to a 3D volumetric geometry. As an intermediate product, this fused geometry is sent to the second stage, the color blending stage, along with the input color images to render a new color image from the target viewpoint. We quantitatively evaluate our method on two datasets, a synthetic dataset (DeformingThings4D) and a newly collected real dataset, and show that our proposed method outperforms the state-of-the-art baseline methods in both geometry accuracy and color quality.

Recently, many deep learning applications have been used on the mobile platform. To deploy them in the mobile platform, the networks should be quantized. The quantization of computer vision networks has been studied well but there have been few studies for the quantization of image restoration networks. In previous study, we studied the effect of the quantization of activations and weight for deep learning network on image quality following previous study for weight quantization for deep learning network. In this paper, we made adaptive bit-depth control of input patch while maintaining the image quality similar to the floating point network to achieve more quantization bit reduction than previous work. Bit depth is controlled adaptive to the maximum pixel value of the input data block. It can preserve the linearity of the value in the block data so that the deep neural network doesn't need to be trained by the data distribution change. With proposed method we could achieve 5 percent reduction in hardware area and power consumption for our custom deep network hardware while maintaining the image quality in subejctive and objective measurment. It is very important achievement for mobile platform hardware.

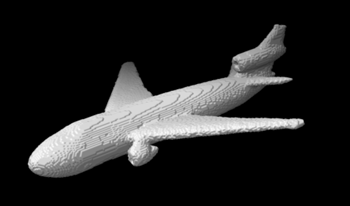

In this paper, we introduce silhouette tomography, a novel formulation of X-ray computed tomography that relies only on the geometry of the imaging system. We formulate silhouette tomography mathematically and provide a simple method for obtaining a particular solution to the problem, assuming that any solution exists. We then propose a supervised reconstruction approach that uses a deep neural network to solve the silhouette tomography problem. We present experimental results on a synthetic dataset that demonstrate the effectiveness of the proposed method.

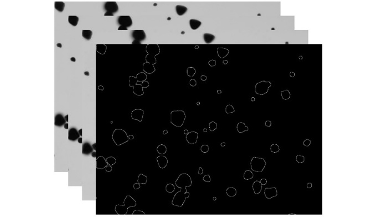

Starch plays a pivotal role in human society, serving as a vital component of our food sources and finding widespread applications in various industries. Microscopic imaging offers a straightforward, efficient, and precise approach to examine the distribution, morphology, and dimensions of starch granules. Quantitative analysis through the segmentation of starch granules from the background aids researchers in exploring their physicochemical properties. This article presents a novel approach utilizing a modified U-Net model in deep learning to achieve the segmentation of starch granule microscope images with remarkable accuracy. The method yields impressive results, with mean values for several evaluation metrics including JS, Dice, Accuracy, Precision, Sensitivity and Specificityreaching 89.67%, 94.55%, 99.40%, 94.89%, 94.23% and 99.70%, respectively.

This manuscript presents a new CNN-based visual localization method that seeks a camera location of an input RGB image with respect to a pre-collected RGB-D images database. To determine an accurate camera pose, we employ a coarse-to-fine localization manner that firstly finds coarse location candidates via image retrieval, then refines them using local 3D structure represented by each retrieved RGB-D image. We use a CNN feature extractor and a relative pose estimator for coarse prediction that do not sufficiently require a scene-specific training. Furthermore, we propose a new pose refinement-verification module that simultaneously evaluates and refines camera poses using differentiable renderer. Experimental results on public datasets show that our proposed pipeline achieves accurate localization on both trained and unknown scenes.

Deep learning (DL)-based algorithms are used in many integral modules of ADAS and Automated Driving Systems. Camera based perception, Driver Monitoring, Driving Policy, Radar and Lidar perception are few of the examples built using DL algorithms in such systems. These real-time DL applications requires huge compute requires up to 250 TOPs to realize them on an edge device. To meet the needs of such SoCs efficiently in-terms of Cost and Power silicon vendor provide a complex SoC with multiple DL engines. These SoCs also comes with all the system resources like L2/L3 on-chip memory, high speed DDR interface, PMIC etc to feed the data and power to utilize these DL engines compute efficiently. These system resource would scale linearly with number of DL engines in the system. This paper proposes solutions to optimizes these system resource to provide cost and Power efficient solution. (1) Co-operative and Adaptive asynchronous DL engines scheduling to optimize the peak resources usage in multiple vectors like memory size, throughput, Power/ Current. (2) Orchestration of Co-operative and Adaptive Multi-core DL Engines to achieve synchronous execution to achieve maximum utilization of all the resources. The proposed solution achieves upto 30% power saving or reducing overhead by 75% in 4 core configuration consisting of 32 TOPS.

A typical edge compute SoC capable of handling deep learning workloads at low power is usually heterogeneous by design. It typically comprises multiple initiators such as real-time IPs for capture and display, hardware accelerators for ISP, computer vision, deep learning engines, codecs, DSP or ARM cores for general compute, GPU for 2D/3D visualization. Every participating initiator transacts with common resources such as L3/L4/DDR memory systems to seamlessly exchange data between them. A careful orchestration of this dataflow is important to keep every producer/consumer at full utilization without causing any drop in real-time performance which is critical for automotive applications. The software stack for such complex workflows can be quite intimidating for customers to bring-up and more often act as an entry barrier for many to even evaluate the device for performance. In this paper we propose techniques developed on TI’s latest TDA4V-Mid SoC, targeted for ADAS and autonomous applications, which is designed around ease-of-use but ensuring device entitlement class of performance using open standards such as DL runtimes, OpenVx and GStreamer.

The COVID-19 epidemic has been a significant healthcare challenge in the United States. COVID-19 is transmitted predominately by respiratory droplets generated when people breathe, talk, cough, or sneeze. Wearing a mask is the primary, effective, and convenient method of blocking 80% of respiratory infections. Therefore, many face mask detection systems have been developed to supervise hospitals, airports, publication transportation, sports venues, and retail locations. However, the current commercial solutions are typically bundled with software or hardware, impeding public accessibility. In this paper, we propose an in-browser serverless edge-computing-based face mask detection solution, called Web-based efficient AI recognition of masks (WearMask), which can be deployed on common devices (e.g., cell phones, tablets, computers) with internet connections using web browsers. The serverless edge-computing design minimizes the hardware costs (e.g., specific devices or cloud computing servers). It provides a holistic edge-computing framework for integrating (1) deep learning models (YOLO), (2) high-performance neural network inference computing framework (NCNN), and (3) a stack-based virtual machine (WebAssembly). For end-users, our solution has advantages of (1) serverless edge-computing design with minimal device limitation and privacy risk, (2) installation-free deployment, (3) low computing requirements, and (4) high detection speed. Our application has been launched with public access at facemask-detection.com.

Recently, many deep learning applications have been used on the mobile platform. To deploy them in the mobile platform, the networks should be quantized. The quantization of computer vision networks has been studied well but there have been few studies for the quantization of image restoration networks. In this paper, we studied the effect of the quantization of activations for deep learning network on image quality following previous study for weight quantization for deep learning network. This study is also about the quantization on raw RGBW image demosaicing for 10 bit image while fixing weight bit as 8 bit. Experimental results show that 11 bit activation quantization can sustain image quality at the similar level with floating-point network. Even though the activations bit-depth can be very small bit in the computer vision applications, but image restoration tasks like demosaicing require much more bits than those applications. 11 bit may not fit the general purpose hardware like NPU, GPU or CPU but for the custom hardware it is very important to reduce its hardware area and power as well as memory size.