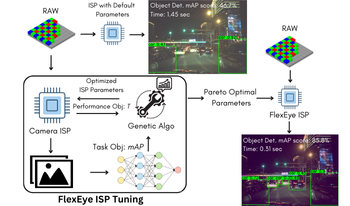

As AI becomes more prevalent, edge devices face challenges due to limited resources and the high demands of deep learning (DL) applications. In such cases, quality scalability can offer significant benefits by adjusting computational load based on available resources. Traditional Image-Signal-Processor (ISP) tuning methods prioritize maximizing intelligence performance, such as classification accuracy, while neglecting critical system constraints like latency and power dissipation. To address this gap, we introduce FlexEye, an application-specific, quality-scalable ISP tuning framework that leverages ISP parameters as a control knob for quality of service (QoS), enabling trade-off between quality and performance. Experimental results demonstrate up to 6% improvement in Object Detection accuracy and a 22.5% reduction in ISP latency compared to state of the art. In addition, we also evaluate Instance Segmentation task, where 1.2% accuracy improvement is attained with a 73% latency reduction.

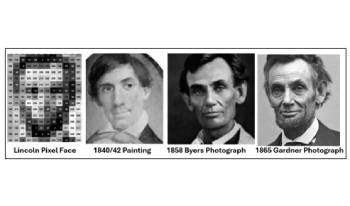

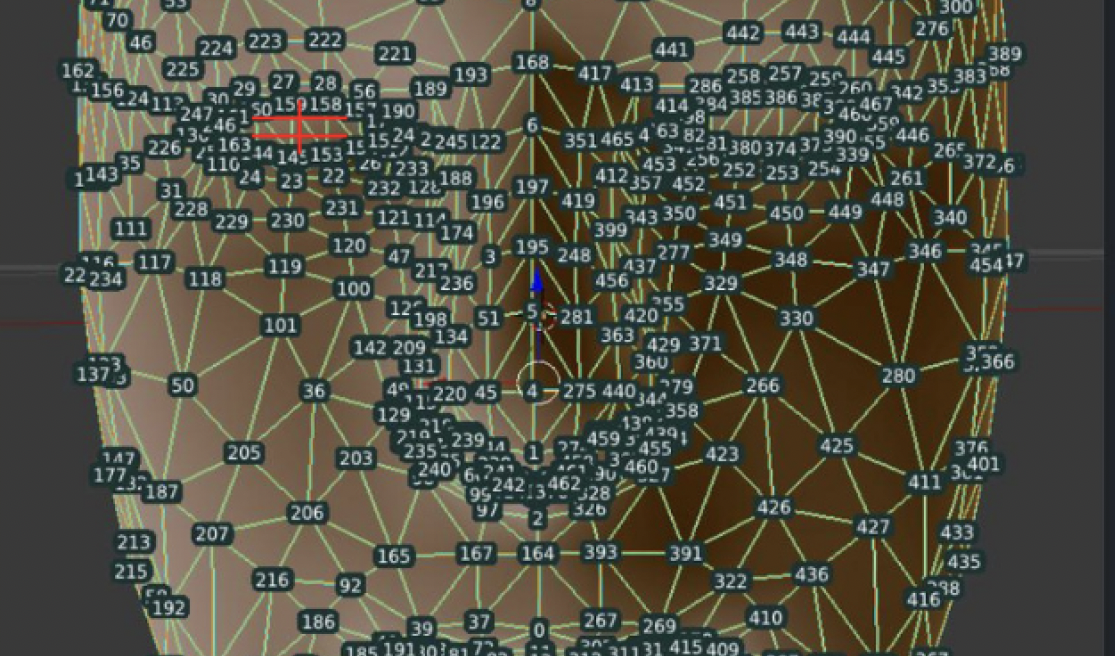

Are we there yet? All the puzzle pieces are here: a 2” miniature portrait on ivory dated circa 1840-1842, discovered alongside a letter detailing the owner’s familial ties to Mary Todd Lincoln. This portrait’s distinctive features echo President Lincoln’s unique facial asymmetry. However, despite intensive investigation, no historical document has been found to definitively link this miniature to Lincoln. This research aims to bridge art and science to determine whether this painting represents the earliest image of Abraham Lincoln, potentially opening avenues for future collaborations in identifying historical faces from the past. A key contributor to this effort is Dr. David Stork, an Adjunct Professor at Stanford University and a leading expert in computer-based image analysis. Dr. Stork holds 64 U.S. patents and has authored over 220 peer-reviewed publications in fields such as machine learning, pattern recognition, computational optics, and the image analysis of art. His recent book, Pixels and Paintings: Foundations of Computer-Assisted Connoisseurship1, fosters a dialogue between art scholars and the computer vision community.

Clothing is a lens through which a society expresses its culture and history. Its stylized portrayal in painting adds an immensely rich layer of cultural self introspection—how artists see themselves and their contemporaries, expressed through art. Particularly of interest in this study is color: how has color in costumes in portraiture painting changed over time, across art styles, and for different genders? In this study, we apply computational methods drawn from computer vision, machine learning, economics, and statistics to a large corpora of over 12k portrait paintings to analyze trends in color in Western art over the past 600 years. For each painting, we obtained clothing segmentation masks using a fine-tuned SegFormer model, performed gender classification using CLIP (Contrastive Language-Image Pre-Training), extracted dominant colors via clustering analysis, and computed Color Contrast Index (CI) and Diversity Index (DI). This study is, to our knowledge, the most comprehensive, large-scale analysis of colors of clothing in paintings. We share our methodology to make more widely accessible state-of-the-art computational tools for scholars studying the history and development of style in fine art paintings. Our tools empower analyses of major trends in costume colors as well as specialized domain-specific searches throughout databases of tens of thousands of paintings—far larger than can be efficiently analyzed without computer methods. These tools can reveal comparisons between different painters and trends within particular artists’ careers. Our tools could be enhanced to enable refined analyses, for instance on the social status of the portrait subject, and other visual criteria.

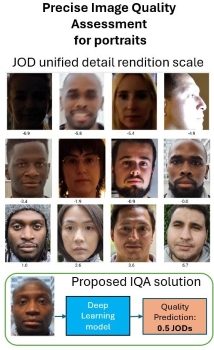

Portraits are one of the most common use cases in photography, especially in smartphone photography. However, evaluating portrait quality in real portraits is costly and difficult to reproduce. We propose a new method to evaluate a large range of detail preservation rendering on real portrait images. Our approach is based on 1) annotating a set of portrait images grouped by semantic content using pairwise comparison 2) taking advantage of the fact that we are focusing on portraits, using cross-content annotations to align the quality scales 3) training a machine learning model on the global quality scale. On top of providing a fine-grained wide range detail preservation quality output, numerical experiments show that the proposed method correlates highly with the perceptual evaluation of image quality experts.

Artificial Intelligence (AI) contributes significantly to the development of autonomous vehicles in an unmatched way. This paper outlines techniques and algorithms for the implementation of Intelligent Autonomous vehicles (IAV) leveraging AI algorithms for traffic perception, decision-making and control in autonomous vehicles through merging traffic scenario detection, traffic lane detection, semantic segmentation, pedestrian detection, and traffic sign classification and detection. The modern computer vision and deep neural networks-based algorithms enable the real-time analysis of different vehicle data through artificial intelligence. The vehicle dynamics are constituted through AI in vehicle control systems for increased safety and efficiency to ensure that they are optimized with time. In addition, the paper will also discuss challenges and possible future directions, underscore how AI has the potential of driving autonomous vehicles towards safer and more reliable as well as intelligent transportation systems. This is the hope of the future whereby mobility is intelligent, sustainable, and accessible with the combination of AI with autonomous vehicles.

Deep learning has enabled rapid advancements in the field of image processing. Learning based approaches have achieved stunning success over their traditional signal processing-based counterparts for a variety of applications such as object detection, semantic segmentation etc. This has resulted in the parallel development of hardware architectures capable of optimizing the inferencing of deep learning algorithms in real time. Embedded devices tend to have hard constraints on internal memory space and must rely on larger (but relatively very slow) DDR memory to store vast data generated while processing the deep learning algorithms. Thus, associated systems have to be evolved to make use of the optimized hardware balancing compute times with data operations. We propose such a generalized framework that can, given a set of compute elements and memory arrangement, devise an efficient method for processing of multidimensional data to optimize inference time of deep learning algorithms for vision applications.

This paper investigates the relationship between image quality and computer vision performance. Two image quality metrics, as defined in the IEEE P2020 draft Standard for Image quality in automotive systems, are used to determine the impact of image quality on object detection. The IQ metrics used are (i) Modulation Transfer function (MTF), the most commonly utilized metric for measuring the sharpness of a camera; and (ii) Modulation and Contrast Transfer Accuracy (CTA), a newly defined, state-of-the-art metric for measuring image contrast. The results show that the MTF and CTA of an optical system are impacted by ISP tuning. Some correlation is shown to exist between MTF and object detection (OD) performance. A trend of improved AP5095 as MTF50 increases is observed in some models. Scenes with similar CTA scores can have widely varying object detection performance. For this reason, CTA is shown to be limited in its ability to predict object detection performance. Gaussian noise and edge enhancement produce similar CTA scores but different AP5095 scores. The results suggest MTF is a better predictor of ML performance than CTA.

Portraits are one of the most common use cases in photography, especially in smartphone photography. However, evaluating portrait quality in real portraits is costly, inconvenient, and difficult to reproduce. We propose a new method to evaluate a large range of detail preservation renditions on realistic mannequins. This laboratory setup can cover all commercial cameras from videoconference to high-end DSLRs. Our method is based on 1) the training of a machine learning method on a perceptual scale target 2) the usage of two different regions of interest per mannequin depending on the quality of the input portrait image 3) the merge of the two quality scales to produce the final wide range scale. On top of providing a fine-grained wide range detail preservation quality output, numerical experiments show that the proposed method is robust to noise and sharpening, unlike other commonly used methods such as the texture acutance on the Dead Leaves chart.

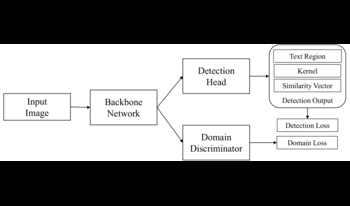

In this paper, we present a deep-learning approach that unifies handwriting and scene-text detection in images. Specifically, we adopt adversarial domain generalization to improve text detection across different domains and extend the conventional dice loss to provide extra training guidance. Furthermore, we build a new benchmark dataset that comprehensively captures various handwritten and scene text scenarios in images. Our extensive experimental results demonstrate the effectiveness of our approach in generalizing detection across both handwriting and scene text.

The Driver Monitoring System (DMS) presented in this work aims to enhance road safety by continuously monitoring a drivers behavior and emotional state during vehicle operation. The system utilizes computer vision and machine learning techniques to analyze the drivers face and actions, providing real-time alerts to mitigate potential hazards. The primary components of the DMS include gaze detection, emotion analysis, and phone usage detection. The system tracks the drivers eye movements to detect drowsiness and distraction through blink patterns and eye-closure durations. The DMS employs deep learning models to analyze the drivers facial expressions and extract dominant emotional states. In case of detected emotional distress, the system offers calming verbal prompts to maintain driver composure. Detected phone usage triggers visual and auditory alerts to discourage distracted driving. Integrating these features creates a comprehensive driver monitoring solution that assists in preventing accidents caused by drowsiness, distraction, and emotional instability. The systems effectiveness is demonstrated through real-time test scenarios, and its potential impact on road safety is discussed.