High dynamic range (HDR) scenes are known to be challenging for most cameras. The most common artifacts associated with bad HDR scene rendition are clipped bright areas and noisy dark regions, rendering the images unnatural and unpleasing. This paper introduces a novel methodology for automating the perceptual evaluation of detail rendition in these extreme regions of the histogram for images that portray natural scenes. The key contributions include 1) the construction of a robust database in Just Objectionable Distance (JOD) scores, incorporating annotator outlier detection 2) the introduction of a Multitask Convolutional Neural Network (CNN) model that effectively addresses the diverse context and region-of-interest challenges inherent in natural scenes. Our experimental evaluation demonstrates that our approach strongly aligns with human evaluations. The adaptability of our model positions it as a valuable tool for ensuring consistent camera performance evaluation, contributing to the continuous evolution of smartphone technologies.

Retinex is a theory of colour vision, and it is also a well-known image enhancement algorithm. The Retinex algorithms reported in the literature are often called path-based or centre-surround. In the path-based approach, an image is processed by calculating (reintegrating along) paths in proximate image regions and averaging amongst the paths. Centre-surround algorithms convolve an image (in log units) with a large-scale centre-surround-type operator. Both types of Retinex algorithms map a high dynamic range image to a lower-range counterpart suitable for display, and both are proposed as a method to simultaneously enhance an image for preference. In this paper, we reformulate one of the most common variants of the path-based approach and show that it can be recast as a centre-surround algorithm at multiple scales. Significantly, our new method processes images more quickly and is potentially biologically plausible. To the extent that Retinex produces pleasing images, it produces equivalent outputs. Experiments validate our method.

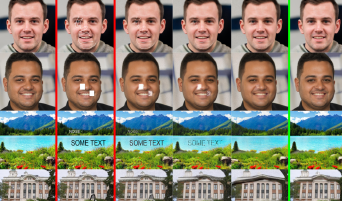

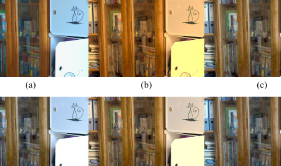

This paper introduces an innovative blind in-painting technique designed for image quality enhancement and noise removal. Employing Monte-Carlo simulations, the proposed method approximates the optimal mask necessary for automatic image in-painting. This involves the progressive construction of a noise removal mask, initially sampled randomly from a binomial distribution. A confidence map is iteratively generated, providing a pixel-wise indicator map that discerns whether a particular pixel resides within the dataset domain. Notably, the proposed method eliminates the manual creation of an image mask to eradicate noise, a process prone to additional time overhead, especially when noise is dispersed across the entire image. Furthermore, the proposed method simplifies the determination of pixels involved in the in-painting process, excluding normal pixels and thereby preserving the integrity of the original image content. Computer simulations demonstrate the efficacy of this method in removing various types of noise, including brush painting and random salt and pepper noise. The proposed technique successfully restores similarity between the original and normalized datasets, yielding a Binary Cross Entropy (BCE) of 0.69 and a Peak-Signal-to-Noise-Ratio (PSNR) of 20.069. With its versatile applications, this method proves beneficial in diverse industry and medical contexts.

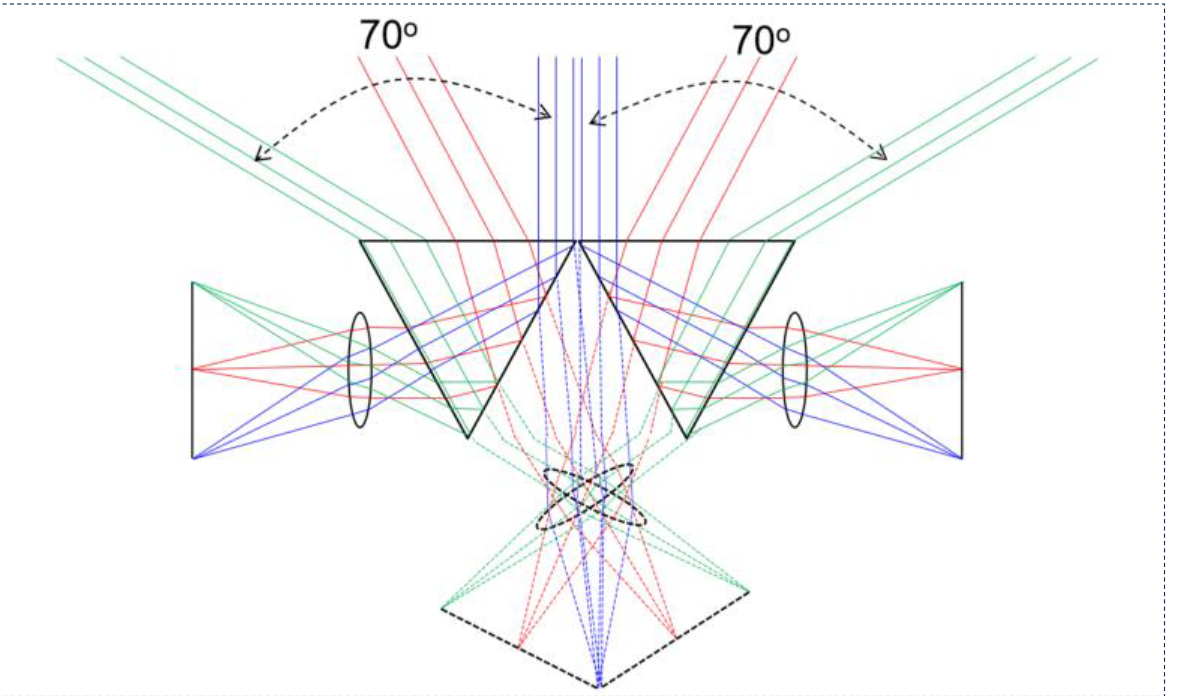

We introduce our cutting-edge panoramic camera – a true panoramic camera (TPC), designed for mobile smartphone applications. Leveraging prism optics and well-known imaging processing algorithms, our camera achieves parallax-free seamless stitching of images captured by dual cameras pointing in two different directions opposite to the normal. The result is an ultra-wide (140ox53o) panoramic field-of-view (FOV) without the optical distortions typically associated with ultra-wide-angle lenses. Packed into a compact camera module measuring 22 mm (length) x 11 mm (width) x 9 mm (height) and integrated into a mobile testing platform featuring the Qualcomm Snapdragon® 8 Gen 1 processor, the TPC demonstrates unprecedented capabilities of capturing panoramic pictures in a single shot and recording panoramic videos.

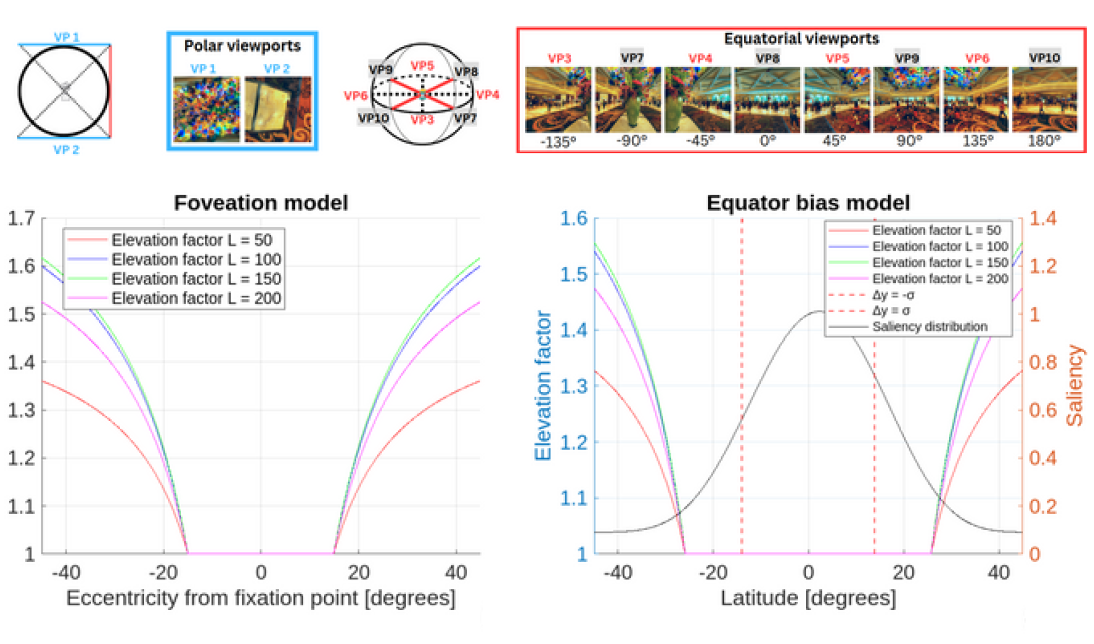

Spatial just noticeable difference (JND) refers to the smallest amplitude of variation that can be reliably detected by the Human Visual System (HVS). Several studies tried to define models based on thresholds obtained under controlled experiments for conventional 2D or 3D imaging. While the concept of JND is almost mastered for the latter types of content, it is legitimate to question the validity of the results for Extended Reality (XR) where the observation conditions are significantly different. In this paper, we investigate the performance of well-known 2D-JND models on 360-degree images. These models are integrated into basic quality assessment metrics to study their ability to improve the quality prediction process with regards to the human judgment. Here, the metrics serve as tools to assess the effectiveness of the JND models. In addition, to mimic the 360-deg conditions, the equator bias is used to balance the JND thresholds. Globally, the obtained results suggest that 2D-JND models are not well adapted to the extended range conditions and require in-depth improvement or re-definition to be applicable. The slight improvement obtained using the equator bias demonstrates the potential of taking into account XR characteristics and opens the floor for further investigations.

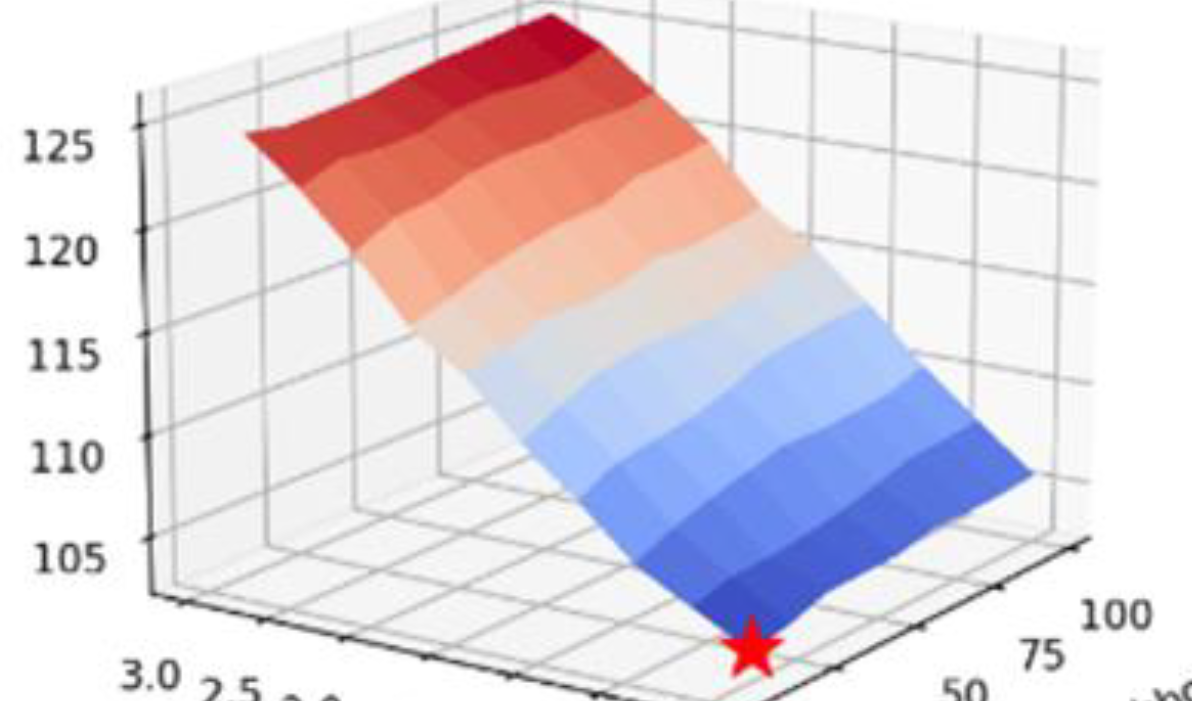

Point clouds generated from 3D scans of part surfaces consist of discrete points, some of which may be outliers. Filtering techniques to remove these outliers from point clouds frequently require a “guess and check” method to determine proper filter parameters. This paper presents two novel approaches to automatically determine proper filter parameters using the relationships between point cloud outlier removal, principal component variance, and the average nearest neighbor distance. Two post-processing workflows were developed that reduce outlier frequency in point clouds using these relationships. These post-processing workflows were applied to point clouds with artificially generated noise and outliers, as well as a real-world point cloud. Analysis of the results showed the approaches effectively reduced outlier frequency while preserving the ground truth surface, without requiring user input.

Low-light image enhancement is a hot topic as the low-light image cannot accurately reflect the content of objects. The use of low-light image enhancement technology can effectively restore the color and texture information. Different from the traditional low-light image enhancement method that is directly from low-light to normal-light, the method of low-light image enhancement based on multispectral reconstruction is proposed. The key point of the proposed method is that the low-light image is firstly transformed to the spectral reflectance space based on a deep learning model to learn the end-to-end mapping relationship from a low-light image to a normal-light multispectral image. Then the corresponding normal-light color image is rendered from the reconstructed multispectral image and the enhancement of the low-light image is completed. The motivation behind the proposed method is whether the low-light image enhancement through multispectral reconstruction will help to improve the enhancement performance or not. The verification of the proposed method based on the commonly used LOL dataset showed it outperforms the traditional direct enhancement methods, however, the underlying mechanism of the method is still to be further studied.

In order to improve traffic conditions and reduce carbon emissions in urban areas, smart mobility and smart cities are becoming increasingly important measures. To enable the widespread use of the cameras required for this, cost and size requirements necessitate the use of low-cost standard dynamic range (SDR) cameras. However, these cameras do not provide sufficient image quality for a reliable classification of road users, especially at night. In this paper, we present a data-driven approach to optimise image quality and improve classification accuracy of a given vehicle classifier at night. Our approach uses a combination of image inpainting and high dynamic range (HDR) image reconstruction to reconstruct and optimise critical image areas. Therefore, we introduce a large HDR traffic dataset with time-synchronised SDR images. We also present an approach to automatically degrade the HDR traffic data to generate relevant and challenging training pairs. We show that our approach significantly improves the classification of road users at night without having to retrain the underlying vehicle classifier. Supplementary information as well as the dataset are published at https://www.mt.hs-rm. de/ nighttime-traffic-reconstruction/ .

In the convolutional retinex approach to image lightness processing, a captured image is processed by a centre/surround filter that is designed to mitigate the effects of shading (illumination gradients), which in turn compresses the dynamic range. Recently, an optimisation approach to convolutional retinex has been introduced that outputs a convolution filter that is optimal (in the least squares sense) when the shading and albedo autocorrelation statistics are known or can be estimated. Although the method uses closed-form expressions for the autocorrelation matrices, the optimal filter has so far been calculated numerically. In this paper, we parameterise the filter, and for a simple shading model we show that the optimal filter takes the form of a cosine function. This important finding suggests that, in general, the optimal filter shape directly depends upon the functional form assumed for the shadings.

When visualizing colors on websites or in apps, color calibration is not feasible for consumer smartphones and tablets. The vast majority of consumers do not have the time, equipment or expertise to conduct color calibration. For such situations we recently developed the MDCIM (Mobile Display Characterization and Illumination Model) model. Using optics-based image processing it aims at improving digital color representation as assessed by human observers. It takes into account display-specific parameters and local lighting conditions. In previous publications we determined model parameters for four mobile displays: an OLED display in Samsung Galaxy S4, and 3 LCD displays: iPad Air 2 and the iPad models from 2017 and 2018. Here, we investigate the performance of another OLED display, the iPhone XS Max. Using a psychophysical experiment, we show that colors generated by the MDCIM method are visually perceived as a much better color match with physical samples than when the default method is used, which is based on sRGB space and the color management system implemented by the smartphone manufacturer. The percentage of reasonable to good color matches improves from 3.1% to 85.9% by using MDCIM method, while the percentage of incorrect color matches drops from 83.8% to 3.6%.